History of numerical weather prediction

Encyclopedia

Mathematical model

A mathematical model is a description of a system using mathematical concepts and language. The process of developing a mathematical model is termed mathematical modeling. Mathematical models are used not only in the natural sciences and engineering disciplines A mathematical model is a...

s of the atmosphere and oceans to predict the weather

Weather forecasting

Weather forecasting is the application of science and technology to predict the state of the atmosphere for a given location. Human beings have attempted to predict the weather informally for millennia, and formally since the nineteenth century...

and future sea state (the process of numerical weather prediction

Numerical weather prediction

Numerical weather prediction uses mathematical models of the atmosphere and oceans to predict the weather based on current weather conditions. Though first attempted in the 1920s, it was not until the advent of computer simulation in the 1950s that numerical weather predictions produced realistic...

) has changed over the years. Though first attempted in the 1920s, it was not until the advent of the computer and computer simulation

Computer simulation

A computer simulation, a computer model, or a computational model is a computer program, or network of computers, that attempts to simulate an abstract model of a particular system...

that computation time was reduced to less than the forecast period itself. ENIAC

ENIAC

ENIAC was the first general-purpose electronic computer. It was a Turing-complete digital computer capable of being reprogrammed to solve a full range of computing problems....

was used to created the first forecasts via computer in 1950, and over the years more powerful computers have been used to increase the size of initial datasets as well as include more complicated versions of the equations of motion. The development of global forecasting models led to the first climate models. The development of limited area (regional) models facilitated advances in forecasting the tracks of tropical cyclone

Tropical cyclone

A tropical cyclone is a storm system characterized by a large low-pressure center and numerous thunderstorms that produce strong winds and heavy rain. Tropical cyclones strengthen when water evaporated from the ocean is released as the saturated air rises, resulting in condensation of water vapor...

as well as air quality in the 1970s and 1980s.

Because the output of forecast models based on atmospheric dynamics requires corrections near ground level, model output statistics

Model output statistics

Model Output Statistics is an omnipresent statistical technique that forms the backbone of modern weather forecasting. The technique pioneered in the 1960s and early 1970s is used to post-process output from numerical weather forecast models...

(MOS) were developed in the 1970s and 1980s for individual forecast points (locations). The MOS apply statistical techniques to post-process the output of dynamical models with the most recent surface observations and the forecast point's climatology. This technique can correct for model resolution as well as model biases. Even with the increasing power of supercomputers, the forecast skill

Forecast skill

Skill in forecasting is a scaled representation of forecast error that relates the forecast accuracy of a particular forecast model to some reference model....

of numerical weather models only extends to about two weeks into the future, since the density and quality of observations—together with the chaotic

Chaos theory

Chaos theory is a field of study in mathematics, with applications in several disciplines including physics, economics, biology, and philosophy. Chaos theory studies the behavior of dynamical systems that are highly sensitive to initial conditions, an effect which is popularly referred to as the...

nature of the partial differential equation

Partial differential equation

In mathematics, partial differential equations are a type of differential equation, i.e., a relation involving an unknown function of several independent variables and their partial derivatives with respect to those variables...

s used to calculate the forecast—introduce errors which double every five days. The use of model ensemble forecasts since the 1990s helps to define the forecast uncertainty and extend weather forecasting farther into the future than otherwise possible.

Background

Until the end of the 19th century, weather prediction was entirely subjective and based on empirical rules, with only limited understanding of the physical mechanisms behind weather processes. In 1901 Cleveland AbbeCleveland Abbe

Cleveland Abbe was an American meteorologist and advocate of time zones. While director of the Cincinnati Observatory in Cincinnati, Ohio, he developed a system of telegraphic weather reports, daily weather maps, and weather forecasts. Congress in 1870 established the U.S. Weather Bureau and...

, founder of the United States Weather Bureau

National Weather Service

The National Weather Service , once known as the Weather Bureau, is one of the six scientific agencies that make up the National Oceanic and Atmospheric Administration of the United States government...

, proposed that the atmosphere is governed by the same principles of thermodynamics

Thermodynamics

Thermodynamics is a physical science that studies the effects on material bodies, and on radiation in regions of space, of transfer of heat and of work done on or by the bodies or radiation...

and hydrodynamics that were studied in the previous century. In 1904, Vilhelm Bjerknes derived a two-step procedure for model-based weather forecasting. First, a diagnostic step

Diagnostic equation

In a physical simulation context, a diagnostic equation is an equation that links the values of these variables simultaneously, either because the equation is time-independent, or because the variables all refer to the values they have at the same identical time...

is used to process data to generate initial conditions, which are then advanced in time by a prognostic step

Prognostic equation

Prognostic equation - in the context of physical simulation, a prognostic equation predicts the value of variables for some time in the future on the basis of the values at the current or previous times....

that solves the initial value problem

Initial value problem

In mathematics, in the field of differential equations, an initial value problem is an ordinary differential equation together with a specified value, called the initial condition, of the unknown function at a given point in the domain of the solution...

. He also identified seven variables that defined the state of the atmosphere at a given point: pressure

Pressure

Pressure is the force per unit area applied in a direction perpendicular to the surface of an object. Gauge pressure is the pressure relative to the local atmospheric or ambient pressure.- Definition :...

, temperature

Temperature

Temperature is a physical property of matter that quantitatively expresses the common notions of hot and cold. Objects of low temperature are cold, while various degrees of higher temperatures are referred to as warm or hot...

, density

Density

The mass density or density of a material is defined as its mass per unit volume. The symbol most often used for density is ρ . In some cases , density is also defined as its weight per unit volume; although, this quantity is more properly called specific weight...

, humidity

Humidity

Humidity is a term for the amount of water vapor in the air, and can refer to any one of several measurements of humidity. Formally, humid air is not "moist air" but a mixture of water vapor and other constituents of air, and humidity is defined in terms of the water content of this mixture,...

, and the three components of the velocity

Velocity

In physics, velocity is speed in a given direction. Speed describes only how fast an object is moving, whereas velocity gives both the speed and direction of the object's motion. To have a constant velocity, an object must have a constant speed and motion in a constant direction. Constant ...

vector. Bjerknes pointed out that equations based on mass continuity, conservation of momentum, the first

First law of thermodynamics

The first law of thermodynamics is an expression of the principle of conservation of work.The law states that energy can be transformed, i.e. changed from one form to another, but cannot be created nor destroyed...

and second

Second law of thermodynamics

The second law of thermodynamics is an expression of the tendency that over time, differences in temperature, pressure, and chemical potential equilibrate in an isolated physical system. From the state of thermodynamic equilibrium, the law deduced the principle of the increase of entropy and...

laws of thermodynamics

Laws of thermodynamics

The four laws of thermodynamics summarize its most important facts. They define fundamental physical quantities, such as temperature, energy, and entropy, in order to describe thermodynamic systems. They also describe the transfer of energy as heat and work in thermodynamic processes...

, and the ideal gas law

Ideal gas law

The ideal gas law is the equation of state of a hypothetical ideal gas. It is a good approximation to the behavior of many gases under many conditions, although it has several limitations. It was first stated by Émile Clapeyron in 1834 as a combination of Boyle's law and Charles's law...

could be used to estimate the state of the atmosphere in the future through numerical methods

Numerical analysis

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis ....

. With the exception of the second law of thermodynamics, these equations form the basis of the primitive equations

Primitive equations

The primitive equations are a set of nonlinear differential equations that are used to approximate global atmospheric flow and are used in most atmospheric models...

used in present-day weather models.

In 1922, Lewis Fry Richardson

Lewis Fry Richardson

Lewis Fry Richardson, FRS was an English mathematician, physicist, meteorologist, psychologist and pacifist who pioneered modern mathematical techniques of weather forecasting, and the application of similar techniques to studying the causes of wars and how to prevent them...

published the first attempt at forecasting the weather numerically. Using a hydrostatic

Hydrostatic equilibrium

Hydrostatic equilibrium or hydrostatic balance is the condition in fluid mechanics where a volume of a fluid is at rest or at constant velocity. This occurs when compression due to gravity is balanced by a pressure gradient force...

variation of Bjerknes's primitive equations, Richardson produced by hand a 6-hour forecast for the state of the atmosphere over two points in central Europe, taking at least six weeks to do so. His forecast calculated that the change in surface pressure

Atmospheric pressure

Atmospheric pressure is the force per unit area exerted into a surface by the weight of air above that surface in the atmosphere of Earth . In most circumstances atmospheric pressure is closely approximated by the hydrostatic pressure caused by the weight of air above the measurement point...

would be 145 millibars (4.3 inHg), an unrealistic value incorrect by two orders of magnitude. The large error was caused by an imbalance in the pressure and wind velocity fields used as the initial conditions in his analysis.

The first successful numerical prediction was performed using the ENIAC

ENIAC

ENIAC was the first general-purpose electronic computer. It was a Turing-complete digital computer capable of being reprogrammed to solve a full range of computing problems....

digital computer in 1950 by a team composed of American meteorologists Jule Charney, Philip Thompson, Larry Gates, and Norwegian meteorologist Ragnar Fjørtoft

Ragnar Fjørtoft

-Further reading:...

and applied mathematician John von Neumann

John von Neumann

John von Neumann was a Hungarian-American mathematician and polymath who made major contributions to a vast number of fields, including set theory, functional analysis, quantum mechanics, ergodic theory, geometry, fluid dynamics, economics and game theory, computer science, numerical analysis,...

. They used a simplified form of atmospheric dynamics based on solving the barotropic vorticity equation

Barotropic vorticity equation

This Barotropic vorticity equation assumes the atmosphere is nearly barotropic, which means that the direction and speed of the geostrophic wind are independent of height. In other words, no vertical wind shear of the geostrophic wind. It also implies that thickness contours are parallel to...

over a single layer of the atmosphere, by computing the geopotential height

Geopotential height

Geopotential height is a vertical coordinate referenced to Earth's mean sea level — an adjustment to geometric height using the variation of gravity with latitude and elevation. Thus it can be considered a "gravity-adjusted height"...

of the atmosphere's 500 mb pressure surface. This simplification greatly reduced demands on computer time and memory, so the computations could be performed on the relatively primitive computers of the day. When news of the first weather forecast by ENIAC was received by Richardson in 1950, he remarked that the results were an "enormous scientific advance." The first calculations for a 24 hour forecast took ENIAC nearly 24 hours to produce, but Charney's group noted that most of that time was spent in "manual operations", and expressed hope that forecasts of the weather before it occurs would soon be realized.

Early years

In September 1954, Carl-Gustav Rossby's group at the Swedish Meteorological and Hydrological Institute produced the first operational forecast (i.e. routine predictions for practical use) based on the barotropic equation. Operational numerical weather prediction in the United States began in 1955 under the Joint Numerical Weather Prediction Unit (JNWPU), a joint project by the U.S. Air Force, Navy, and Weather Bureau. The JNWPU model was originally a three-layer barotropic model, also developed by Charney. It only modeled the atmosphere in the Northern HemisphereNorthern Hemisphere

The Northern Hemisphere is the half of a planet that is north of its equator—the word hemisphere literally means “half sphere”. It is also that half of the celestial sphere north of the celestial equator...

. In 1956, the JNWPU switched to a two-layer thermotropic model developed by Thompson and Gates. The main assumption made by the thermotropic model is that while the magnitude of the thermal wind

Thermal wind

The thermal wind is a vertical shear in the geostrophic wind caused by a horizontal temperature gradient. Its name is a misnomer, because the thermal wind is not actually a wind, but rather a wind shear.- Physical Intuition :...

may change, its direction does not change with respect to height, and thus the baroclinicity

Baroclinity

In fluid dynamics, the baroclinity of a stratified fluid is a measure of how misaligned the gradient of pressure is from the gradient of density in a fluid...

in the atmosphere can be simulated using the 500 mb and 1000 mb geopotential height

Geopotential height

Geopotential height is a vertical coordinate referenced to Earth's mean sea level — an adjustment to geometric height using the variation of gravity with latitude and elevation. Thus it can be considered a "gravity-adjusted height"...

surfaces and the average thermal wind between them. However, due to the low skill showed by the thermotropic model, the JNWPU reverted to the single-layer barotropic model in 1958. The Japanese Meteorological Agency became the third organization to initiate operational numerical weather prediction in 1959. The first real-time forecasts made by Australia's Bureau of Meteorology

Bureau of Meteorology

The Bureau of Meteorology is an Executive Agency of the Australian Government responsible for providing weather services to Australia and surrounding areas. It was established in 1906 under the Meteorology Act, and brought together the state meteorological services that existed before then...

in 1969 for portions of the Southern Hemisphere were also based on the single-layer barotropic model.

Later models used more complete equations for atmospheric dynamics and thermodynamics

Atmospheric thermodynamics

Atmospheric thermodynamics is the study of heat to work transformations in the earth’s atmospheric system in relation to weather or climate...

. In 1959, Karl-Heinz Hinkelmann produced the first reasonable primitive equation forecast, 37 years after Richardson's failed attempt. Hinkelmann did so by removing small oscillations from the numerical model during initialization. In 1966, West Germany

West Germany

West Germany is the common English, but not official, name for the Federal Republic of Germany or FRG in the period between its creation in May 1949 to German reunification on 3 October 1990....

and the United States began producing operational forecasts based on primitive-equation models, followed by the United Kingdom in 1972 and Australia in 1977. Later additions to primitive equation models allowed additional insight into different weather phenomena. In the United States, solar radiation effects were added to the primitive equation model in 1967; moisture effects and latent heat were added in 1968; and feedback effects from rain on convection

Convection

Convection is the movement of molecules within fluids and rheids. It cannot take place in solids, since neither bulk current flows nor significant diffusion can take place in solids....

were incorporated in 1971. Three years later, the first global forecast model was introduced. Sea ice began to be initialized in forecast models in 1971. Efforts to involve sea surface temperature

Sea surface temperature

Sea surface temperature is the water temperature close to the oceans surface. The exact meaning of surface varies according to the measurement method used, but it is between and below the sea surface. Air masses in the Earth's atmosphere are highly modified by sea surface temperatures within a...

in model initialization began in 1972 due to its role in modulating weather in higher latitudes of the Pacific.

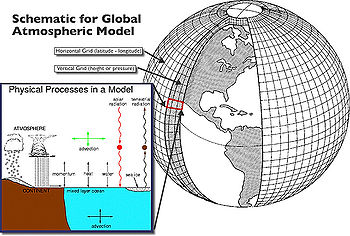

Global forecast models

Troposphere

The troposphere is the lowest portion of Earth's atmosphere. It contains approximately 80% of the atmosphere's mass and 99% of its water vapor and aerosols....

. It is a computer program that produces meteorological information for future times at given locations and altitudes. Within any modern model is a set of equations, known as the primitive equations

Primitive equations

The primitive equations are a set of nonlinear differential equations that are used to approximate global atmospheric flow and are used in most atmospheric models...

, used to predict the future state of the atmosphere. These equations—along with the ideal gas law

Ideal gas law

The ideal gas law is the equation of state of a hypothetical ideal gas. It is a good approximation to the behavior of many gases under many conditions, although it has several limitations. It was first stated by Émile Clapeyron in 1834 as a combination of Boyle's law and Charles's law...

—are used to evolve the density

Density

The mass density or density of a material is defined as its mass per unit volume. The symbol most often used for density is ρ . In some cases , density is also defined as its weight per unit volume; although, this quantity is more properly called specific weight...

, pressure

Pressure

Pressure is the force per unit area applied in a direction perpendicular to the surface of an object. Gauge pressure is the pressure relative to the local atmospheric or ambient pressure.- Definition :...

, and potential temperature scalar field

Scalar field

In mathematics and physics, a scalar field associates a scalar value to every point in a space. The scalar may either be a mathematical number, or a physical quantity. Scalar fields are required to be coordinate-independent, meaning that any two observers using the same units will agree on the...

s and the velocity

Velocity

In physics, velocity is speed in a given direction. Speed describes only how fast an object is moving, whereas velocity gives both the speed and direction of the object's motion. To have a constant velocity, an object must have a constant speed and motion in a constant direction. Constant ...

vector field

Vector field

In vector calculus, a vector field is an assignmentof a vector to each point in a subset of Euclidean space. A vector field in the plane for instance can be visualized as an arrow, with a given magnitude and direction, attached to each point in the plane...

of the atmosphere through time. Additional transport equations for pollutants and other aerosol

Aerosol

Technically, an aerosol is a suspension of fine solid particles or liquid droplets in a gas. Examples are clouds, and air pollution such as smog and smoke. In general conversation, aerosol usually refers to an aerosol spray can or the output of such a can...

s are included in some primitive-equation high-resolution models as well. The equations used are nonlinear partial differential equations which are impossible to solve exactly through analytical methods, with the exception of a few idealized cases. Therefore, numerical methods obtain approximate solutions. Different models use different solution methods: some global models and almost all regional models use finite difference method

Finite difference method

In mathematics, finite-difference methods are numerical methods for approximating the solutions to differential equations using finite difference equations to approximate derivatives.- Derivation from Taylor's polynomial :...

s for all three spatial dimensions, while other global models and a few regional models use spectral method

Spectral method

Spectral methods are a class of techniques used in applied mathematics and scientific computing to numerically solve certain Dynamical Systems, often involving the use of the Fast Fourier Transform. Where applicable, spectral methods have excellent error properties, with the so called "exponential...

s for the horizontal dimensions and finite-difference methods in the vertical.

The National Meteorological Center's Global Spectral Model was introduced during August 1980. The European Centre for Medium-Range Weather Forecasts

European Centre for Medium-Range Weather Forecasts

The European Centre for Medium-Range Weather Forecasts is an independent intergovernmental organisation supported by 19 European Member States and 15 Co-operating States...

model debuted on May 1, 1985. The United Kingdom

United Kingdom

The United Kingdom of Great Britain and Northern IrelandIn the United Kingdom and Dependencies, other languages have been officially recognised as legitimate autochthonous languages under the European Charter for Regional or Minority Languages...

Met Office

Met Office

The Met Office , is the United Kingdom's national weather service, and a trading fund of the Department for Business, Innovation and Skills...

has been running their global model since the late 1980s, adding a 3D-Var data assimilation scheme in mid-1999. The Canadian Meteorological Centre has been running a global model since 1994. Between 2000 and 2002, the Environmental Modeling Center

Environmental Modeling Center

The Environmental Modeling Center , improves numerical weather, marine and climate predictions at the National Centers for Environmental Prediction , through a broad program of research in data assimilation and modeling...

ran the Aviation (AVN) model for shorter range forecasts and the Medium Range Forecast (MRF) model at longer time ranges. During this time, the AVN model was extended to the end of the forecast period, eliminating the need of the MRF and thereby replacing it. In late 2002, the AVN model was renamed the Global Forecast System

Global Forecast System

The Global Forecast System is a global numerical weather prediction computer model run by NOAA. This mathematical model is run four times a day and produces forecasts up to 16 days in advance, but with decreasing spatial and temporal resolution over time...

(GFS). The German Weather Service has been running their global hydrostatic model, the GME

GME of Deutscher Wetterdienst

GME - operational global numerical weather prediction model of the German Weather Service. The model is on almost uniform icosahedral-hexagonal grid...

, using a hexagonal icosohedral grid since 2002.

Global climate models

In 1956, Norman Phillips developed a mathematical model which could realistically depict monthly and seasonal patterns in the troposphere, which became the first successful climate modelClimate model

Climate models use quantitative methods to simulate the interactions of the atmosphere, oceans, land surface, and ice. They are used for a variety of purposes from study of the dynamics of the climate system to projections of future climate...

. Following Phillips's work, several groups began working to create general circulation models. The first general circulation climate model that combined both oceanic and atmospheric processes was developed in the late 1960s at the NOAA Geophysical Fluid Dynamics Laboratory

Geophysical Fluid Dynamics Laboratory

The Geophysical Fluid Dynamics Laboratory is a laboratory in the National Oceanic and Atmospheric Administration /Office of Oceanic and Atmospheric Research . The current director is Dr. V...

. By the early 1980s, the United States' National Center for Atmospheric Research

National Center for Atmospheric Research

The National Center for Atmospheric Research has multiple facilities, including the I. M. Pei-designed Mesa Laboratory headquarters in Boulder, Colorado. NCAR is managed by the nonprofit University Corporation for Atmospheric Research and sponsored by the National Science Foundation...

had developed the Community Atmosphere Model; this model has been continuously refined into the 2000s. In 1986, efforts began to initialize and model soil and vegetation types, which led to more realistic forecasts. For example, the Center for Ocean-Land Atmosphere Studies (COLA) model showed a warm temperature bias of 2-4°C (4-7°F) and a low precipitation bias due to incorrect parameterization of crop and vegetation type across the central United States. Coupled ocean-atmosphere climate models such as the Hadley Centre for Climate Prediction and Research

Hadley Centre for Climate Prediction and Research

The Met Office Hadley Centre for Climate Change — named in honour of George Hadley — is part of, and based at the headquarters of the Met Office in Exeter...

's HadCM3

HadCM3

HadCM3 is a coupled atmosphere-ocean general circulation model developed at the Hadley Centre in the United Kingdom...

model are currently being used as inputs for climate change

Climate change

Climate change is a significant and lasting change in the statistical distribution of weather patterns over periods ranging from decades to millions of years. It may be a change in average weather conditions or the distribution of events around that average...

studies. The importance of gravity wave

Gravity wave

In fluid dynamics, gravity waves are waves generated in a fluid medium or at the interface between two media which has the restoring force of gravity or buoyancy....

s was neglected within these models until the mid 1980s. Now, gravity waves are required within global climate model

Global climate model

A General Circulation Model is a mathematical model of the general circulation of a planetary atmosphere or ocean and based on the Navier–Stokes equations on a rotating sphere with thermodynamic terms for various energy sources . These equations are the basis for complex computer programs commonly...

s in order to properly simulate regional and global scale circulations, though their broad spectrum

Spectral density

In statistical signal processing and physics, the spectral density, power spectral density , or energy spectral density , is a positive real function of a frequency variable associated with a stationary stochastic process, or a deterministic function of time, which has dimensions of power per hertz...

makes their incorporation complicated. The Climate System Model (CSM)

Community Climate System Model

The Community Climate System Model is a coupled Global Climate Model developed by the University Corporation for Atmospheric Research with funding from the National Science Foundation, Department of Energy, and NASA...

was developed at the National Center for Atmospheric Research

National Center for Atmospheric Research

The National Center for Atmospheric Research has multiple facilities, including the I. M. Pei-designed Mesa Laboratory headquarters in Boulder, Colorado. NCAR is managed by the nonprofit University Corporation for Atmospheric Research and sponsored by the National Science Foundation...

in January 1994.

Limited-area models

The horizontal domain of a model is either global, covering the entire Earth, or regional, covering only part of the Earth. Regional models (also known as limited-area models, or LAMs) allow for the use of finer (or smaller) grid spacing than global models. Essentially, the available computational resources are focused on a specific area instead of being spread over the globe. This allows regional models to resolve explicitly smaller-scale meteorological phenomena that cannot be represented on the coarser grid of a global model. Regional models use a global model for initial conditions of the edge of their domain in order to allow systems from outside the regional model domain to move into its area. Uncertainty and errors within regional models are introduced by the global model used for the boundary conditions of the edge of the regional model, as well as errors attributable to the regional model itself.In the United States, the first operational regional model, the limited-area fine-mesh (LFM) model, was introduced in 1971. Its development was halted, or frozen, in 1986. The NGM

Nested Grid Model

The Nested Grid Model was a numerical weather prediction model run by the National Centers for Environmental Prediction, a division of the National Weather Service, in the United States...

debuted in 1987 and was also used to create model output statistics for the United States. Its development was frozen in 1991. The ETA model was implemented for the United States in 1993. Metéo France has been running their Action de Recherche Petite Échelle Grande Échelle (ALADIN) mesoscale model for France, based upon the ECMWF global model, since 1995. In July 1996, the Bureau of Meteorology

Bureau of Meteorology

The Bureau of Meteorology is an Executive Agency of the Australian Government responsible for providing weather services to Australia and surrounding areas. It was established in 1906 under the Meteorology Act, and brought together the state meteorological services that existed before then...

implemented the Limited Area Prediction System (LAPS). The Canadian Global Environmental Multiscale Model (GEM) mesoscale model went into operational use on February 24, 1997.

The German Weather Service developed the High Resolution Regional Model (HRM) in 1999, which is widely run within the operational and research meteorological communities and run with hydrostatic assumptions. The Antarctic Mesoscale Prediction System (AMPS) was developed for the southernmost continent in 2000 by the United States Antarctic Program

United States Antarctic Program

United States Antarctic Program is an organization of the United States government which has presence in the continent of Antarctica. It co-ordinates research and the operational support for research in the region...

. The German non-hydrostatic Lokal-Modell for Europe (LME) has been run since 2002, and an increase in areal domain became operational on September 28, 2005. The Japanese Meteorological Agency has run a high-resolution, non-hydrostatic mesoscale model since September 2004.

Air quality models

The technical literature on air pollution dispersion is quite extensive and dates back to the 1930s and earlier. One of the early air pollutant plume dispersion equations was derived by Bosanquet and Pearson. Their equation did not assume Gaussian distribution nor did it include the effect of ground reflection of the pollutant plume. Sir Graham Sutton derived an air pollutant plume dispersion equation in 1947 which did include the assumption of Gaussian distribution for the vertical and crosswind dispersion of the plume and also included the effect of ground reflection of the plume. Under the stimulus provided by the advent of stringent environmental control regulations

Clean Air Act

A Clean Air Act is one of a number of pieces of legislation relating to the reduction of airborne contaminants, smog and air pollution in general. The use by governments to enforce clean air standards has contributed to an improvement in human health and longer life spans...

, there was an immense growth in the use of air pollutant plume dispersion calculations between the late 1960s and today. A great many computer programs for calculating the dispersion of air pollutant emissions were developed during that period of time and they were called "air dispersion models". The basis for most of those models was the Complete Equation For Gaussian Dispersion Modeling Of Continuous, Buoyant Air Pollution Plumes

Air pollution dispersion terminology

Air pollution dispersion terminology includes the words and technical terms that have a special meaning to those who work in the field of air pollution dispersion modeling...

The Gaussian air pollutant dispersion equation requires the input of H which is the pollutant plume's centerline height above ground level—and H is the sum of Hs (the actual physical height of the pollutant plume's emission source point) plus ΔH (the plume rise due the plume's buoyancy).

To determine ΔH, many if not most of the air dispersion models developed between the late 1960s and the early 2000s used what are known as "the Briggs equations." G. A. Briggs first published his plume rise observations and comparisons in 1965. In 1968, at a symposium sponsored by Conservation of Clean Air and Water in Europe, he compared many of the plume rise models then available in the literature. In that same year, Briggs also wrote the section of the publication edited by Slade dealing with the comparative analyses of plume rise models. That was followed in 1969 by his classical critical review of the entire plume rise literature, in which he proposed a set of plume rise equations which have become widely known as "the Briggs equations". Subsequently, Briggs modified his 1969 plume rise equations in 1971 and in 1972.

The Urban Airshed Model, a regional forecast model for the effects of air pollution

Air pollution

Air pollution is the introduction of chemicals, particulate matter, or biological materials that cause harm or discomfort to humans or other living organisms, or cause damage to the natural environment or built environment, into the atmosphere....

and acid rain

Acid rain

Acid rain is a rain or any other form of precipitation that is unusually acidic, meaning that it possesses elevated levels of hydrogen ions . It can have harmful effects on plants, aquatic animals, and infrastructure. Acid rain is caused by emissions of carbon dioxide, sulfur dioxide and nitrogen...

, was developed by a private company in the USA in 1970. Development of this model was taken over by the Environmental Protection Agency and improved in the mid to late 1970s using results from a regional air pollution study. While developed in California

California

California is a state located on the West Coast of the United States. It is by far the most populous U.S. state, and the third-largest by land area...

, this model was later used in other areas of North America

North America

North America is a continent wholly within the Northern Hemisphere and almost wholly within the Western Hemisphere. It is also considered a northern subcontinent of the Americas...

, Europe

Europe

Europe is, by convention, one of the world's seven continents. Comprising the westernmost peninsula of Eurasia, Europe is generally 'divided' from Asia to its east by the watershed divides of the Ural and Caucasus Mountains, the Ural River, the Caspian and Black Seas, and the waterways connecting...

and Asia

Asia

Asia is the world's largest and most populous continent, located primarily in the eastern and northern hemispheres. It covers 8.7% of the Earth's total surface area and with approximately 3.879 billion people, it hosts 60% of the world's current human population...

during the 1980s. The Community Multiscale Air Quality model (CMAQ) is an open source

Open source

The term open source describes practices in production and development that promote access to the end product's source materials. Some consider open source a philosophy, others consider it a pragmatic methodology...

air quality model run within the United States in conjunction with the NAM mesoscale model since 2004. The first operational air quality model in Canada, Canadian Hemispheric and Regional Ozone and NOx System (CHRONOS), began to be run in 2001. It was replaced with the Global Environmental Multiscale model - Modelling Air quality and Chemistry (GEM-MACH) model in November 2009.

Tropical cyclone models

During 1972, the first model to forecast storm surgeStorm surge

A storm surge is an offshore rise of water associated with a low pressure weather system, typically tropical cyclones and strong extratropical cyclones. Storm surges are caused primarily by high winds pushing on the ocean's surface. The wind causes the water to pile up higher than the ordinary sea...

along the continental shelf

Continental shelf

The continental shelf is the extended perimeter of each continent and associated coastal plain. Much of the shelf was exposed during glacial periods, but is now submerged under relatively shallow seas and gulfs, and was similarly submerged during other interglacial periods. The continental margin,...

was developed, known as the Special Program to List the Amplitude of Surges from Hurricanes (SPLASH). In 1978, the first hurricane-tracking model based on atmospheric dynamics – the movable fine-mesh (MFM) model – began operating. Within the field of tropical cyclone track forecasting

Tropical cyclone track forecasting

Tropical cyclone track forecasting involves predicting where a tropical cyclone is going to track over the next five days, every 6 to 12 hours. The history of tropical cyclone track forecasting has evolved from a single station approach to a comprehensive approach which uses a variety of...

, despite the ever-improving dynamical model guidance which occurred with increased computational power, it was not until the decade of the 1980s when numerical weather prediction showed skill

Forecast skill

Skill in forecasting is a scaled representation of forecast error that relates the forecast accuracy of a particular forecast model to some reference model....

, and until the 1990s when it consistently outperformed statistical

Statistical model

A statistical model is a formalization of relationships between variables in the form of mathematical equations. A statistical model describes how one or more random variables are related to one or more random variables. The model is statistical as the variables are not deterministically but...

or simple dynamical models. In the early 1980s, the assimilation of satellite-derived winds from water vapor, infrared, and visible satellite imagery was found to improve tropical cyclones track forecasting. The Geophysical Fluid Dynamics Laboratory

Geophysical Fluid Dynamics Laboratory

The Geophysical Fluid Dynamics Laboratory is a laboratory in the National Oceanic and Atmospheric Administration /Office of Oceanic and Atmospheric Research . The current director is Dr. V...

(GFDL) hurricane model was used for research purposes between 1973 and the mid-1980s. Once it was determined that it could show skill in hurricane prediction, a multi-year transition transformed the research model into an operational model which could be used by the National Weather Service

National Weather Service

The National Weather Service , once known as the Weather Bureau, is one of the six scientific agencies that make up the National Oceanic and Atmospheric Administration of the United States government...

in 1995.

The Hurricane Weather Research and Forecasting

Hurricane Weather Research and Forecasting model

The Hurricane Weather Research and Forecasting model is a specialized version of the Weather Research and Forecasting model and is used to forecast the track and intensity of tropical cyclones. The model was developed by the National Oceanic and Atmospheric Administration , the U.S...

(HWRF) model is a specialized version of the Weather Research and Forecasting

Weather Research and Forecasting model

The Weather Research and Forecasting model, , is a specific computer program with a dual use for forecasting and research. It was created through a partnership that includes the National Oceanic and Atmospheric Administration , the National Center for Atmospheric Research , and more than 150 other...

(WRF) model and is used to forecast

Weather forecasting

Weather forecasting is the application of science and technology to predict the state of the atmosphere for a given location. Human beings have attempted to predict the weather informally for millennia, and formally since the nineteenth century...

the track and intensity

Tropical cyclone scales

Tropical systems are officially ranked on one of several tropical cyclone scales according to their maximum sustained winds and in what oceanic basin they are located...

of tropical cyclone

Tropical cyclone

A tropical cyclone is a storm system characterized by a large low-pressure center and numerous thunderstorms that produce strong winds and heavy rain. Tropical cyclones strengthen when water evaporated from the ocean is released as the saturated air rises, resulting in condensation of water vapor...

s. The model was developed by the National Oceanic and Atmospheric Administration

National Oceanic and Atmospheric Administration

The National Oceanic and Atmospheric Administration , pronounced , like "noah", is a scientific agency within the United States Department of Commerce focused on the conditions of the oceans and the atmosphere...

(NOAA), the U.S. Naval Research Laboratory

United States Naval Research Laboratory

The United States Naval Research Laboratory is the corporate research laboratory for the United States Navy and the United States Marine Corps and conducts a program of scientific research and development. NRL opened in 1923 at the instigation of Thomas Edison...

, the University of Rhode Island

University of Rhode Island

The University of Rhode Island is the principal public research university in the U.S. state of Rhode Island. Its main campus is located in Kingston. Additional campuses include the Feinstein Campus in Providence, the Narragansett Bay Campus in Narragansett, and the W. Alton Jones Campus in West...

, and Florida State University

Florida State University

The Florida State University is a space-grant and sea-grant public university located in Tallahassee, Florida, United States. It is a comprehensive doctoral research university with medical programs and significant research activity as determined by the Carnegie Foundation...

. It became operational in 2007. Despite improvements in track forecasting, predictions of the intensity of a tropical cyclone based on numerical weather prediction continue to be a challenge, since statiscal methods continue to show higher skill over dynamical guidance.

Ocean models

The first ocean wave modelsWind wave model

In fluid dynamics, wind wave modeling describes the effort to depict the sea state and predict the evolution of the energy of wind waves using numerical techniques...

were developed in the 1960s and 1970s. These models had the tendency to overestimate the role of wind in wave development and underplayed wave interactions. A lack of knowledge concerning how waves interacted among each other, assumptions regarding a maximum wave height, and deficiencies in computer power limited the performance of the models. After experiments were performed in 1968, 1969, and 1973, wind input from the Earth's atmosphere was weighted more accurately in the predictions. A second generation of models was developed in the 1980s, but they could not realistically model swell

Swell (ocean)

A swell, in the context of an ocean, sea or lake, is a series surface gravity waves that is not generated by the local wind. Swell waves often have a long wavelength but this varies with the size of the water body, e.g. rarely more than 150 m in the Mediterranean, and from event to event, with...

nor depict wind-driven waves (also known as wind waves) caused by rapidly changing wind fields, such as those within tropical cyclones. This caused the development of a third generation of wave models from 1988 onward.

Within this third generation of models, the spectral wave transport equation is used to describe the change in wave spectrum over changing topography. It simulates wave generation, wave movement (propagation within a fluid), wave shoaling

Wave shoaling

In fluid dynamics, wave shoaling is the effect by which surface waves entering shallower water increase in wave height . It is caused by the fact that the group velocity, which is also the wave-energy transport velocity, decreases with the reduction of water depth...

, refraction

Refraction

Refraction is the change in direction of a wave due to a change in its speed. It is essentially a surface phenomenon . The phenomenon is mainly in governance to the law of conservation of energy. The proper explanation would be that due to change of medium, the phase velocity of the wave is changed...

, energy transfer between waves, and wave dissipation. Since surface winds are the primary forcing mechanism in the spectral wave transport equation, ocean wave models use information produced by numerical weather prediction models as inputs to determine how much energy is transferred from the atmosphere into the layer at the surface of the ocean. Along with dissipation of energy through whitecaps and resonance

Resonance

In physics, resonance is the tendency of a system to oscillate at a greater amplitude at some frequencies than at others. These are known as the system's resonant frequencies...

between waves, surface winds from numerical weather models allow for more accurate predictions of the state of the sea surface.

Model output statistics

Because forecast models based upon the equations for atmospheric dynamics do not perfectly determine weather conditions near the ground, statistical corrections were developed to attempt to resolve this problem. Statistical models were created based upon the three-dimensional fields produced by numerical weather models, surface observations, and the climatological conditions for specific locations. These statistical models are collectively referred to as model output statisticsModel output statistics

Model Output Statistics is an omnipresent statistical technique that forms the backbone of modern weather forecasting. The technique pioneered in the 1960s and early 1970s is used to post-process output from numerical weather forecast models...

(MOS), and were developed by the National Weather Service

National Weather Service

The National Weather Service , once known as the Weather Bureau, is one of the six scientific agencies that make up the National Oceanic and Atmospheric Administration of the United States government...

for their suite of weather forecasting models by 1976. The United States Air Force

United States Air Force

The United States Air Force is the aerial warfare service branch of the United States Armed Forces and one of the American uniformed services. Initially part of the United States Army, the USAF was formed as a separate branch of the military on September 18, 1947 under the National Security Act of...

developed its own set of MOS based upon their dynamical weather model by 1983.

Ensembles

As proposed by Edward Lorenz in 1963, it is impossible for long-range forecasts—those made more than two weeks in advance—to predict the state of the atmosphere with any degree of skillForecast skill

Skill in forecasting is a scaled representation of forecast error that relates the forecast accuracy of a particular forecast model to some reference model....

, owing to the chaotic nature

Chaos theory

Chaos theory is a field of study in mathematics, with applications in several disciplines including physics, economics, biology, and philosophy. Chaos theory studies the behavior of dynamical systems that are highly sensitive to initial conditions, an effect which is popularly referred to as the...

of the fluid dynamics

Fluid dynamics

In physics, fluid dynamics is a sub-discipline of fluid mechanics that deals with fluid flow—the natural science of fluids in motion. It has several subdisciplines itself, including aerodynamics and hydrodynamics...

equations involved. Extremely small errors in temperature, winds, or other initial inputs given to numerical models will amplify and double every five days. Furthermore, existing observation networks have limited spatial and temporal resolution (for example, over large bodies of water such as the Pacific Ocean), which introduces uncertainty into the true initial state of the atmosphere. While a set of equations, known as the Liouville equations

Liouville's theorem (Hamiltonian)

In physics, Liouville's theorem, named after the French mathematician Joseph Liouville, is a key theorem in classical statistical and Hamiltonian mechanics...

, exists to determine the initial uncertainty in the model initialization, the equations are too complex to run in real-time, even with the use of supercomputers. These uncertainties limit forecast model accuracy to about six days into the future.

Edward Epstein

Edward Epstein (meteorologist)

Edward Epstein was an American meteorologist who pioneered the use of statistical methods in weather forecasting and the development of ensemble forecasting techniques.During the 1960s he was professor of meteorology at the University of Michigan...

recognized in 1969 that the atmosphere could not be completely described with a single forecast run due to inherent uncertainty, and proposed a stochastic

Stochastic process

In probability theory, a stochastic process , or sometimes random process, is the counterpart to a deterministic process...

dynamic model that produced means

Arithmetic mean

In mathematics and statistics, the arithmetic mean, often referred to as simply the mean or average when the context is clear, is a method to derive the central tendency of a sample space...

and variance

Variance

In probability theory and statistics, the variance is a measure of how far a set of numbers is spread out. It is one of several descriptors of a probability distribution, describing how far the numbers lie from the mean . In particular, the variance is one of the moments of a distribution...

s for the state of the atmosphere. Although these Monte Carlo simulations

Monte Carlo method

Monte Carlo methods are a class of computational algorithms that rely on repeated random sampling to compute their results. Monte Carlo methods are often used in computer simulations of physical and mathematical systems...

showed skill, in 1974 Cecil Leith revealed that they produced adequate forecasts only when the ensemble probability distribution

Probability distribution

In probability theory, a probability mass, probability density, or probability distribution is a function that describes the probability of a random variable taking certain values....

was a representative sample of the probability distribution in the atmosphere. However, it was not until 1992 that ensemble forecasts

Ensemble forecasting

Ensemble forecasting is a numerical prediction method that is used to attempt to generate a representative sample of the possible future states of a dynamical system...

began being prepared by the European Centre for Medium-Range Weather Forecasts

European Centre for Medium-Range Weather Forecasts

The European Centre for Medium-Range Weather Forecasts is an independent intergovernmental organisation supported by 19 European Member States and 15 Co-operating States...

, the Canadian Meteorological Centre, and the National Centers for Environmental Prediction

National Centers for Environmental Prediction

The United States National Centers for Environmental Prediction delivers national and global weather, water, climate and space weather guidance, forecasts, warnings and analyses to its Partners and External User Communities...

. The ECMWF model, the Ensemble Prediction System, uses singular vectors

Singular value decomposition

In linear algebra, the singular value decomposition is a factorization of a real or complex matrix, with many useful applications in signal processing and statistics....

to simulate the initial probability density

Probability density function

In probability theory, a probability density function , or density of a continuous random variable is a function that describes the relative likelihood for this random variable to occur at a given point. The probability for the random variable to fall within a particular region is given by the...

, while the NCEP ensemble, the Global Ensemble Forecasting System, uses a technique known as vector breeding.