Convex optimization

Encyclopedia

Convex minimization, a subfield of optimization, studies the problem of minimizing convex function

s over convex set

s. The convexity property can make optimization in some sense "easier" than the general case - for example, any local optimum

must be a global optimum

.

Given a real

vector space

together with a convex

together with a convex

, real-valued function

defined on a convex subset

of

of  , the problem is to find a point

, the problem is to find a point  in

in  for which the number

for which the number  is smallest, i.e., a point

is smallest, i.e., a point  such that

such that

for all

for all  .

.

The convexity of makes the powerful tools of convex analysis

makes the powerful tools of convex analysis

applicable: the Hahn–Banach theorem

and the theory of subgradients lead to a particularly satisfying theory of necessary and sufficient conditions

for optimality, a duality theory

generalizing that for linear programming

, and effective computational methods.

Convex minimization has applications in a wide range of disciplines, such as automatic control systems, estimation and signal processing

, communications and networks, electronic circuit design

, data analysis and modeling, statistics

(optimal design

), and finance

. With recent improvements in computing and in optimization theory, convex minimization is nearly as straightforward as linear programming

. Many optimization problems can be reformulated as convex minimization problems. For example, the problem of maximizing a concave function f can be re-formulated equivalently as a problem of minimizing the function -f, which is convex.

These results are used by the theory of convex minimization along with geometric notions from functional analysis

such as the Hilbert projection theorem

, the separating hyperplane theorem, and Farkas' lemma.

A convex minimization problem is thus written as

Convex function

In mathematics, a real-valued function f defined on an interval is called convex if the graph of the function lies below the line segment joining any two points of the graph. Equivalently, a function is convex if its epigraph is a convex set...

s over convex set

Convex set

In Euclidean space, an object is convex if for every pair of points within the object, every point on the straight line segment that joins them is also within the object...

s. The convexity property can make optimization in some sense "easier" than the general case - for example, any local optimum

Local optimum

Local optimum is a term in applied mathematics and computer science.A local optimum of a combinatorial optimization problem is a solution that is optimal within a neighboring set of solutions...

must be a global optimum

Global optimum

In mathematics, a global optimum is a selection from a given domain which yields either the highest value or lowest value , when a specific function is applied. For example, for the function...

.

Given a real

Real number

In mathematics, a real number is a value that represents a quantity along a continuum, such as -5 , 4/3 , 8.6 , √2 and π...

vector space

Vector space

A vector space is a mathematical structure formed by a collection of vectors: objects that may be added together and multiplied by numbers, called scalars in this context. Scalars are often taken to be real numbers, but one may also consider vector spaces with scalar multiplication by complex...

together with a convex

together with a convexConvex function

In mathematics, a real-valued function f defined on an interval is called convex if the graph of the function lies below the line segment joining any two points of the graph. Equivalently, a function is convex if its epigraph is a convex set...

, real-valued function

Function (mathematics)

In mathematics, a function associates one quantity, the argument of the function, also known as the input, with another quantity, the value of the function, also known as the output. A function assigns exactly one output to each input. The argument and the value may be real numbers, but they can...

defined on a convex subset

Convex set

In Euclidean space, an object is convex if for every pair of points within the object, every point on the straight line segment that joins them is also within the object...

of

of  , the problem is to find a point

, the problem is to find a point  in

in  for which the number

for which the number  is smallest, i.e., a point

is smallest, i.e., a point  such that

such that for all

for all  .

.The convexity of

makes the powerful tools of convex analysis

makes the powerful tools of convex analysisConvex analysis

Convex analysis is the branch of mathematics devoted to the study of properties of convex functions and convex sets, often with applications in convex minimization, a subdomain of optimization theory....

applicable: the Hahn–Banach theorem

Hahn–Banach theorem

In mathematics, the Hahn–Banach theorem is a central tool in functional analysis. It allows the extension of bounded linear functionals defined on a subspace of some vector space to the whole space, and it also shows that there are "enough" continuous linear functionals defined on every normed...

and the theory of subgradients lead to a particularly satisfying theory of necessary and sufficient conditions

Necessary and sufficient conditions

In logic, the words necessity and sufficiency refer to the implicational relationships between statements. The assertion that one statement is a necessary and sufficient condition of another means that the former statement is true if and only if the latter is true.-Definitions:A necessary condition...

for optimality, a duality theory

Dual problem

In constrained optimization, it is often possible to convert the primal problem to a dual form, which is termed a dual problem. Usually dual problem refers to the Lagrangian dual problem but other dual problems are used, for example, the Wolfe dual problem and the Fenchel dual problem...

generalizing that for linear programming

Linear programming

Linear programming is a mathematical method for determining a way to achieve the best outcome in a given mathematical model for some list of requirements represented as linear relationships...

, and effective computational methods.

Convex minimization has applications in a wide range of disciplines, such as automatic control systems, estimation and signal processing

Signal processing

Signal processing is an area of systems engineering, electrical engineering and applied mathematics that deals with operations on or analysis of signals, in either discrete or continuous time...

, communications and networks, electronic circuit design

Circuit design

The process of circuit design can cover systems ranging from complex electronic systems all the way down to the individual transistors within an integrated circuit...

, data analysis and modeling, statistics

Statistics

Statistics is the study of the collection, organization, analysis, and interpretation of data. It deals with all aspects of this, including the planning of data collection in terms of the design of surveys and experiments....

(optimal design

Optimal design

Optimal designs are a class of experimental designs that are optimal with respect to some statistical criterion.In the design of experiments for estimating statistical models, optimal designs allow parameters to be estimated without bias and with minimum-variance...

), and finance

Finance

"Finance" is often defined simply as the management of money or “funds” management Modern finance, however, is a family of business activity that includes the origination, marketing, and management of cash and money surrogates through a variety of capital accounts, instruments, and markets created...

. With recent improvements in computing and in optimization theory, convex minimization is nearly as straightforward as linear programming

Linear programming

Linear programming is a mathematical method for determining a way to achieve the best outcome in a given mathematical model for some list of requirements represented as linear relationships...

. Many optimization problems can be reformulated as convex minimization problems. For example, the problem of maximizing a concave function f can be re-formulated equivalently as a problem of minimizing the function -f, which is convex.

Theory

The following statements are true about the convex minimization problem:- if a local minimum exists, then it is a global minimum.

- the set of all (global) minima is convex.

- for each strictly convex function, if the function has a minimum, then the minimum is unique.

These results are used by the theory of convex minimization along with geometric notions from functional analysis

Functional analysis

Functional analysis is a branch of mathematical analysis, the core of which is formed by the study of vector spaces endowed with some kind of limit-related structure and the linear operators acting upon these spaces and respecting these structures in a suitable sense...

such as the Hilbert projection theorem

Hilbert projection theorem

In mathematics, the Hilbert projection theorem is a famous result of convex analysis that says that for every point x in a Hilbert space H and every closed convex C \subset H, there exists a unique point y \in C for which \lVert x - y \rVert is minimized over C....

, the separating hyperplane theorem, and Farkas' lemma.

Standard form

Standard form is the usual and most intuitive form of describing a convex minimization problem. It consists of the following three parts:- A convex function

to be minimized over the variable

to be minimized over the variable

- Inequality constraints of the form

, where the functions

, where the functions  are convex

are convex - Equality constraints of the form

, where the functions

, where the functions  are affineAffine transformationIn geometry, an affine transformation or affine map or an affinity is a transformation which preserves straight lines. It is the most general class of transformations with this property...

are affineAffine transformationIn geometry, an affine transformation or affine map or an affinity is a transformation which preserves straight lines. It is the most general class of transformations with this property...

. In practice, the terms "linear" and "affine" are often used interchangeably. Such constraints can be expressed in the form , where

, where  and

and  are column-vectors..

are column-vectors..

A convex minimization problem is thus written as

-

Note that every equality constraint can be equivalently replaced by a pair of inequality constraints

can be equivalently replaced by a pair of inequality constraints  and

and  . Therefore, for theoretical purposes, equality constraints are redundant; however, it can be beneficial to treat them specially in practice.

. Therefore, for theoretical purposes, equality constraints are redundant; however, it can be beneficial to treat them specially in practice.

Following from this fact, it is easy to understand why has to be affine as opposed to merely being convex. If

has to be affine as opposed to merely being convex. If  is convex,

is convex,  is convex, but

is convex, but  is concave. Therefore, the only way for

is concave. Therefore, the only way for  to be convex is for

to be convex is for  to be affine.

to be affine.

Examples

The following problems are all convex minimization problems, or can be transformed into convex minimizations problems via a change of variables:

- Least squaresLeast squaresThe method of least squares is a standard approach to the approximate solution of overdetermined systems, i.e., sets of equations in which there are more equations than unknowns. "Least squares" means that the overall solution minimizes the sum of the squares of the errors made in solving every...

- Linear programmingLinear programmingLinear programming is a mathematical method for determining a way to achieve the best outcome in a given mathematical model for some list of requirements represented as linear relationships...

- Quadratic programmingQuadratic programmingQuadratic programming is a special type of mathematical optimization problem. It is the problem of optimizing a quadratic function of several variables subject to linear constraints on these variables....

- Conic optimizationConic optimizationConic optimization is a subfield of convex optimization that studies a class of structured convex optimization problems called conic optimization problems...

- Geometric programming

- Second order cone programming

- Semidefinite programmingSemidefinite programmingSemidefinite programming is a subfield of convex optimization concerned with the optimization of a linear objective functionover the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron....

- Quadratically constrained quadratic programming

- Entropy maximization

Lagrange multipliers

Consider a convex minimization problem given in standard form by a cost function and inequality constraints

and inequality constraints  , where

, where  . Then the domain

. Then the domain  is:

is:

The Lagrangian functionLagrange multipliersIn mathematical optimization, the method of Lagrange multipliers provides a strategy for finding the maxima and minima of a function subject to constraints.For instance , consider the optimization problem...

for the problem is

- L(x,λ0,...,λm) = λ0f(x) + λ1g1(x) + ... + λmgm(x).

For each point x in X that minimizes f over X, there exist real numbers λ0, ..., λm, called Lagrange multipliersLagrange multipliersIn mathematical optimization, the method of Lagrange multipliers provides a strategy for finding the maxima and minima of a function subject to constraints.For instance , consider the optimization problem...

,

that satisfy these conditions simultaneously:

- x minimizes L(y, λ0, λ1, ..., λm) over all y in X,

- λ0 ≥ 0, λ1 ≥ 0, ..., λm ≥ 0, with at least one λk>0,

- λ1g1(x) = 0, ..., λmgm(x) = 0 (complementary slackness).

If there exists a "strictly feasible point", i.e., a point z satisfying- g1(z) < 0,...,gm(z) < 0,

then the statement above can be upgraded to assert that λ0=1.

Conversely, if some x in X satisfies 1-3 for scalarScalar (mathematics)In linear algebra, real numbers are called scalars and relate to vectors in a vector space through the operation of scalar multiplication, in which a vector can be multiplied by a number to produce another vector....

s λ0, ..., λm with λ0 = 1, then x is certain to minimize f over X.

Methods

Convex minimization problems can be solved by the following contemporary methods:- "Bundle methods" (Wolfe, Lemaréchal), and

- Subgradient projection methods (Polyak),

- Interior-point methods (Nemirovskii and Nesterov).

Other methods of interest:- Cutting-plane methods

- Ellipsoid methodEllipsoid methodIn mathematical optimization, the ellipsoid method is an iterative method for minimizing convex functions. When specialized to solving feasible linear optimization problems with rational data, the ellipsoid method is an algorithm, which finds an optimal solution in a finite number of steps.The...

- Subgradient methodSubgradient methodSubgradient methods are iterative methods for solving convex minimization problems. Originally developed by Naum Z. Shor and others in the 1960s and 1970s, subgradient methods are convergent when applied even to a non-differentiable objective function...

Subgradient methods can be implemented simply and so are widely used.

Maximizing convex functions

Besides convex minimization, the field of convex optimization also considers the far more difficult problem of maximizing convex functions:- Consider the restriction of a convex function to a compact convex set: Then, on that set, the function attains its constrained maximum only on the boundary. Such results, called "maximum principleMaximum principleIn mathematics, the maximum principle is a property of solutions to certain partial differential equations, of the elliptic and parabolic types. Roughly speaking, it says that the maximum of a function in a domain is to be found on the boundary of that domain...

s", are useful in the theory of harmonic functions, potential theoryPotential theoryIn mathematics and mathematical physics, potential theory may be defined as the study of harmonic functions.- Definition and comments :The term "potential theory" was coined in 19th-century physics, when it was realized that the fundamental forces of nature could be modeled using potentials which...

, and partial differential equationPartial differential equationIn mathematics, partial differential equations are a type of differential equation, i.e., a relation involving an unknown function of several independent variables and their partial derivatives with respect to those variables...

s.

Solving even close-to-convex problems can be computationally difficult. The problem of minimizing a quadraticQuadratic polynomialIn mathematics, a quadratic polynomial or quadratic is a polynomial of degree two, also called second-order polynomial. That means the exponents of the polynomial's variables are no larger than 2...

multivariate polynomial on a cubeCubeIn geometry, a cube is a three-dimensional solid object bounded by six square faces, facets or sides, with three meeting at each vertex. The cube can also be called a regular hexahedron and is one of the five Platonic solids. It is a special kind of square prism, of rectangular parallelepiped and...

is NP-hardNP-hardNP-hard , in computational complexity theory, is a class of problems that are, informally, "at least as hard as the hardest problems in NP". A problem H is NP-hard if and only if there is an NP-complete problem L that is polynomial time Turing-reducible to H...

. In fact, in the quadratic minimizationQuadratic programmingQuadratic programming is a special type of mathematical optimization problem. It is the problem of optimizing a quadratic function of several variables subject to linear constraints on these variables....

problem, if the matrix has only one negative eigenvalue, the problem is NP-hardNP-hardNP-hard , in computational complexity theory, is a class of problems that are, informally, "at least as hard as the hardest problems in NP". A problem H is NP-hard if and only if there is an NP-complete problem L that is polynomial time Turing-reducible to H...

.

Extensions

Advanced treatments consider convex functions that can attain positive infinity, also; the indicator function of convex analysis is zero for every and positive infinity otherwise.

and positive infinity otherwise.

Extensions of convex functions include pseudo-convex and quasi-convex functions. Partial extensions of the theory of convex analysis and iterative methods for approximately solving non-convex minimization problems occur in the field of generalized convexity ("abstract convex analysis").

Software

Although most general-purpose nonlinear equation solvers such as LSSOL, LOQO, MINOS, and Lancelot work well, many software packages dealing exclusively with convex minimization problems are also available:

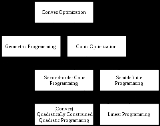

Convex programming languages

Convex minimization solvers

- MOSEK (commercial, stand-alone software and Matlab interface)

- solver.com (commercial)

- SeDuMi (GPLv2, Matlab package)

- SDPT3 (GPLv2, Matlab package)

- OBOE

See also

- Convex analysisConvex analysisConvex analysis is the branch of mathematics devoted to the study of properties of convex functions and convex sets, often with applications in convex minimization, a subdomain of optimization theory....

- Convex functionConvex functionIn mathematics, a real-valued function f defined on an interval is called convex if the graph of the function lies below the line segment joining any two points of the graph. Equivalently, a function is convex if its epigraph is a convex set...

- Convex setConvex setIn Euclidean space, an object is convex if for every pair of points within the object, every point on the straight line segment that joins them is also within the object...

- Interior-point method

- Karush–Kuhn–Tucker conditions

- Lagrange multiplier

- Linear programmingLinear programmingLinear programming is a mathematical method for determining a way to achieve the best outcome in a given mathematical model for some list of requirements represented as linear relationships...

- Optimization problemOptimization problemIn mathematics and computer science, an optimization problem is the problem of finding the best solution from all feasible solutions. Optimization problems can be divided into two categories depending on whether the variables are continuous or discrete. An optimization problem with discrete...

- Optimization theoryOptimization (mathematics)In mathematics, computational science, or management science, mathematical optimization refers to the selection of a best element from some set of available alternatives....

- Pseudoconvex functionPseudoconvex functionIn convex analysis and the calculus of variations, branches of mathematics, a pseudoconvex function is a function that behaves like a convex function with respect to finding its local minima, but need not actually be convex...

- Quadratic programmingQuadratic programmingQuadratic programming is a special type of mathematical optimization problem. It is the problem of optimizing a quadratic function of several variables subject to linear constraints on these variables....

- Quasiconvex functionQuasiconvex functionIn mathematics, a quasiconvex function is a real-valued function defined on an interval or on a convex subset of a real vector space such that the inverse image of any set of the form is a convex set...

(with convex lower level setLevel setIn mathematics, a level set of a real-valued function f of n variables is a set of the formthat is, a set where the function takes on a given constant value c....

s) - Semidefinite programmingSemidefinite programmingSemidefinite programming is a subfield of convex optimization concerned with the optimization of a linear objective functionover the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron....

- Subgradient methodSubgradient methodSubgradient methods are iterative methods for solving convex minimization problems. Originally developed by Naum Z. Shor and others in the 1960s and 1970s, subgradient methods are convergent when applied even to a non-differentiable objective function...

External links

- Stephen Boyd and Lieven Vandenberghe, Convex optimization (book in pdf)

- EE364a: Convex Optimization I and EE364b: Convex Optimization II, Stanford course homepages

- 6.253: Convex Analysis and Optimization, an MIT OCW course homepage

- Haitham Hindi, A tutorial on convex optimization Written for engineers, this overview states (without proofs) many important results and has illustrations. It may merit consultation before turning to Boyd and Vandenberghe's detailed but accessible textbook.

- Haitham Hindi, A Tutorial on convex optimization II: Duality and interior-point methods

- Brian Borchers, An overview of software for convex optimization

- Least squares