Kernel (computing)

Encyclopedia

Computing

Computing is usually defined as the activity of using and improving computer hardware and software. It is the computer-specific part of information technology...

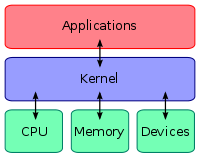

, the kernel is the main component of most computer operating system

Operating system

An operating system is a set of programs that manage computer hardware resources and provide common services for application software. The operating system is the most important type of system software in a computer system...

s; it is a bridge between applications and the actual data processing done at the hardware level. The kernel's responsibilities include managing the system's resources (the communication between hardware

Computer hardware

Personal computer hardware are component devices which are typically installed into or peripheral to a computer case to create a personal computer upon which system software is installed including a firmware interface such as a BIOS and an operating system which supports application software that...

and software

Computer software

Computer software, or just software, is a collection of computer programs and related data that provide the instructions for telling a computer what to do and how to do it....

components). Usually as a basic component of an operating system, a kernel can provide the lowest-level abstraction layer

Abstraction layer

An abstraction layer is a way of hiding the implementation details of a particular set of functionality...

for the resources (especially processors

Central processing unit

The central processing unit is the portion of a computer system that carries out the instructions of a computer program, to perform the basic arithmetical, logical, and input/output operations of the system. The CPU plays a role somewhat analogous to the brain in the computer. The term has been in...

and I/O devices

Input/output

In computing, input/output, or I/O, refers to the communication between an information processing system , and the outside world, possibly a human, or another information processing system. Inputs are the signals or data received by the system, and outputs are the signals or data sent from it...

) that application software must control to perform its function. It typically makes these facilities available to application

Application software

Application software, also known as an application or an "app", is computer software designed to help the user to perform specific tasks. Examples include enterprise software, accounting software, office suites, graphics software and media players. Many application programs deal principally with...

processes

Process (computing)

In computing, a process is an instance of a computer program that is being executed. It contains the program code and its current activity. Depending on the operating system , a process may be made up of multiple threads of execution that execute instructions concurrently.A computer program is a...

through inter-process communication

Inter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

mechanisms and system call

System call

In computing, a system call is how a program requests a service from an operating system's kernel. This may include hardware related services , creating and executing new processes, and communicating with integral kernel services...

s.

Operating system tasks are done differently by different kernels, depending on their design and implementation. While monolithic kernel

Monolithic kernel

A monolithic kernel is an operating system architecture where the entire operating system is working in the kernel space and alone as supervisor mode...

s execute all the operating system code in the same address space

Address space

In computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

to increase the performance of the system, microkernel

Microkernel

In computer science, a microkernel is the near-minimum amount of software that can provide the mechanisms needed to implement an operating system . These mechanisms include low-level address space management, thread management, and inter-process communication...

s run most of the operating system services in user space

User space

A conventional computer operating system usually segregates virtual memory into kernel space and user space. Kernel space is strictly reserved for running the kernel, kernel extensions, and most device drivers...

as servers, aiming to improve maintainability and modularity of the operating system. A range of possibilities exists between these two extremes.

Kernel basic facilities

The kernel's primary function is to manage the computer's resources and allow other programs to run and use these resources. Typically, the resources consist of:- The Central Processing UnitCentral processing unitThe central processing unit is the portion of a computer system that carries out the instructions of a computer program, to perform the basic arithmetical, logical, and input/output operations of the system. The CPU plays a role somewhat analogous to the brain in the computer. The term has been in...

. This is the most central part of a computer system, responsible for running or executing programs on it. The kernel takes responsibility for deciding at any time which of the many running programs should be allocated to the processor or processors (each of which can usually run only one program at a time) - The computer's memoryRandom-access memoryRandom access memory is a form of computer data storage. Today, it takes the form of integrated circuits that allow stored data to be accessed in any order with a worst case performance of constant time. Strictly speaking, modern types of DRAM are therefore not random access, as data is read in...

. Memory is used to store both program instructions and data. Typically, both need to be present in memory in order for a program to execute. Often multiple programs will want access to memory, frequently demanding more memory than the computer has available. The kernel is responsible for deciding which memory each process can use, and determining what to do when not enough is available. - Any Input/Output (I/O)Input/outputIn computing, input/output, or I/O, refers to the communication between an information processing system , and the outside world, possibly a human, or another information processing system. Inputs are the signals or data received by the system, and outputs are the signals or data sent from it...

devices present in the computer, such as keyboard, mouse, disk drives, printers, displays, etc. The kernel allocates requests from applications to perform I/O to an appropriate device (or subsection of a device, in the case of files on a disk or windows on a display) and provides convenient methods for using the device (typically abstracted to the point where the application does not need to know implementation details of the device).

Key aspects necessary in resource managements are the definition of an execution domain (address space

Address space

In computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

) and the protection mechanism used to mediate the accesses to the resources within a domain.

Kernels also usually provide methods for synchronization

Synchronization (computer science)

In computer science, synchronization refers to one of two distinct but related concepts: synchronization of processes, and synchronization of data. Process synchronization refers to the idea that multiple processes are to join up or handshake at a certain point, so as to reach an agreement or...

and communication

Inter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

between processes (called inter-process communication or IPC).

A kernel may implement these features itself, or rely on some of the processes it runs to provide the facilities to other processes, although in this case it must provide some means of IPC to allow processes to access the facilities provided by each other.

Finally, a kernel must provide running programs with a method to make requests to access these facilities.

Process management

The main task of a kernel is to allow the execution of applications and support them with features such as hardware abstractions. A process defines which memory portions the application can access. (For this introduction, process, application and program are used as synonyms.) Kernel process managementProcess management

Process management is the ensemble of activities of planning and monitoring the performance of a process. The term usually refers to the management of business processes and manufacturing processes...

must take into account the hardware built-in equipment for memory protection

Memory protection

Memory protection is a way to control memory access rights on a computer, and is a part of most modern operating systems. The main purpose of memory protection is to prevent a process from accessing memory that has not been allocated to it. This prevents a bug within a process from affecting...

.

To run an application, a kernel typically sets up an address space

Address space

In computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

for the application, loads the file containing the application's code into memory (perhaps via demand paging

Demand paging

In computer operating systems, demand paging is an application of virtual memory. In a system that uses demand paging, the operating system copies a disk page into physical memory only if an attempt is made to access it...

), sets up a stack

Call stack

In computer science, a call stack is a stack data structure that stores information about the active subroutines of a computer program. This kind of stack is also known as an execution stack, control stack, run-time stack, or machine stack, and is often shortened to just "the stack"...

for the program and branches to a given location inside the program, thus starting its execution.

Multi-tasking

Computer multitasking

In computing, multitasking is a method where multiple tasks, also known as processes, share common processing resources such as a CPU. In the case of a computer with a single CPU, only one task is said to be running at any point in time, meaning that the CPU is actively executing instructions for...

kernels are able to give the user the illusion that the number of processes being run simultaneously on the computer is higher than the maximum number of processes the computer is physically able to run simultaneously. Typically, the number of processes a system may run simultaneously is equal to the number of CPUs installed (however this may not be the case if the processors support simultaneous multithreading

Simultaneous multithreading

Simultaneous multithreading, often abbreviated as SMT, is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading...

).

In a pre-emptive multitasking system, the kernel will give every program a slice of time and switch from process to process so quickly that it will appear to the user as if these processes were being executed simultaneously. The kernel uses scheduling algorithms to determine which process is running next and how much time it will be given. The algorithm chosen may allow for some processes to have higher priority than others. The kernel generally also provides these processes a way to communicate; this is known as inter-process communication

Inter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

(IPC) and the main approaches are shared memory

Shared memory

In computing, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Depending on context, programs may run on a single processor or on multiple separate processors...

, message passing

Message passing

Message passing in computer science is a form of communication used in parallel computing, object-oriented programming, and interprocess communication. In this model, processes or objects can send and receive messages to other processes...

and remote procedure call

Remote procedure call

In computer science, a remote procedure call is an inter-process communication that allows a computer program to cause a subroutine or procedure to execute in another address space without the programmer explicitly coding the details for this remote interaction...

s (see concurrent computing

Concurrent computing

Concurrent computing is a form of computing in which programs are designed as collections of interacting computational processes that may be executed in parallel...

).

Other systems (particularly on smaller, less powerful computers) may provide co-operative multitasking, where each process is allowed to run uninterrupted until it makes a special request that tells the kernel it may switch to another process. Such requests are known as "yielding", and typically occur in response to requests for interprocess communication, or for waiting for an event to occur. Older versions of Windows

Microsoft Windows

Microsoft Windows is a series of operating systems produced by Microsoft.Microsoft introduced an operating environment named Windows on November 20, 1985 as an add-on to MS-DOS in response to the growing interest in graphical user interfaces . Microsoft Windows came to dominate the world's personal...

and Mac OS

Mac OS

Mac OS is a series of graphical user interface-based operating systems developed by Apple Inc. for their Macintosh line of computer systems. The Macintosh user experience is credited with popularizing the graphical user interface...

both used co-operative multitasking but switched to pre-emptive schemes as the power of the computers to which they were targeted grew.

The operating system might also support multiprocessing

Multiprocessing

Multiprocessing is the use of two or more central processing units within a single computer system. The term also refers to the ability of a system to support more than one processor and/or the ability to allocate tasks between them...

(SMP

Symmetric multiprocessing

In computing, symmetric multiprocessing involves a multiprocessor computer hardware architecture where two or more identical processors are connected to a single shared main memory and are controlled by a single OS instance. Most common multiprocessor systems today use an SMP architecture...

or Non-Uniform Memory Access

Non-Uniform Memory Access

Non-Uniform Memory Access is a computer memory design used in Multiprocessing, where the memory access time depends on the memory location relative to a processor...

); in that case, different programs and threads may run on different processors. A kernel for such a system must be designed to be re-entrant, meaning that it may safely run two different parts of its code simultaneously. This typically means providing synchronization

Synchronization (computer science)

In computer science, synchronization refers to one of two distinct but related concepts: synchronization of processes, and synchronization of data. Process synchronization refers to the idea that multiple processes are to join up or handshake at a certain point, so as to reach an agreement or...

mechanisms (such as spinlock

Spinlock

In software engineering, a spinlock is a lock where the thread simply waits in a loop repeatedly checking until the lock becomes available. Since the thread remains active but isn't performing a useful task, the use of such a lock is a kind of busy waiting...

s) to ensure that no two processors attempt to modify the same data at the same time.

Memory management

The kernel has full access to the system's memory and must allow processes to safely access this memory as they require it. Often the first step in doing this is virtual addressing, usually achieved by pagingPaging

In computer operating systems, paging is one of the memory-management schemes by which a computer can store and retrieve data from secondary storage for use in main memory. In the paging memory-management scheme, the operating system retrieves data from secondary storage in same-size blocks called...

and/or segmentation

Segmentation (memory)

Memory segmentation is the division of computer memory into segments or sections. Segments or sections are also used in object files of compiled programs when they are linked together into a program image, or when the image is loaded into memory...

. Virtual addressing allows the kernel to make a given physical address appear to be another address, the virtual address. Virtual address spaces may be different for different processes; the memory that one process accesses at a particular (virtual) address may be different memory from what another process accesses at the same address. This allows every program to behave as if it is the only one (apart from the kernel) running and thus prevents applications from crashing each other.

On many systems, a program's virtual address may refer to data which is not currently in memory. The layer of indirection provided by virtual addressing allows the operating system to use other data stores, like a hard drive, to store what would otherwise have to remain in main memory (RAM). As a result, operating systems can allow programs to use more memory than the system has physically available. When a program needs data which is not currently in RAM, the CPU signals to the kernel that this has happened, and the kernel responds by writing the contents of an inactive memory block to disk (if necessary) and replacing it with the data requested by the program. The program can then be resumed from the point where it was stopped. This scheme is generally known as demand paging

Demand paging

In computer operating systems, demand paging is an application of virtual memory. In a system that uses demand paging, the operating system copies a disk page into physical memory only if an attempt is made to access it...

.

Virtual addressing also allows creation of virtual partitions of memory in two disjointed areas, one being reserved for the kernel (kernel space) and the other for the applications (user space

User space

A conventional computer operating system usually segregates virtual memory into kernel space and user space. Kernel space is strictly reserved for running the kernel, kernel extensions, and most device drivers...

). The applications are not permitted by the processor to address kernel memory, thus preventing an application from damaging the running kernel. This fundamental partition of memory space has contributed much to current designs of actual general-purpose kernels and is almost universal in such systems, although some research kernels (e.g. Singularity

Singularity (operating system)

Singularity is an experimental operating system being built by Microsoft Research since 2003. It is intended as a highly-dependable OS in which the kernel, device drivers, and applications are all written in managed code.- Workings :...

) take other approaches.

Device management

To perform useful functions, processes need access to the peripheralPeripheral

A peripheral is a device attached to a host computer, but not part of it, and is more or less dependent on the host. It expands the host's capabilities, but does not form part of the core computer architecture....

s connected to the computer, which are controlled by the kernel through device driver

Device driver

In computing, a device driver or software driver is a computer program allowing higher-level computer programs to interact with a hardware device....

s. A device driver is a computer program that enables the operating system to interact with a hardware device. It provides the operating system with information of how to control and communicate with a certain piece of hardware. The driver is an important and vital piece to a program application. The design goal of a driver is abstraction the function of the driver is to translate the OS mandated function calls (programming calls) into device specific calls. In theory the device should work correctly with the suitable driver. Engineers are most likely to write code for device drivers. They work on: Video cards, Sound cards. Printers, Scanners, Modems, LAN cards. The common levels of abstraction of device drivers are:

1. On the hardware side:

- Interfacing directly.

- Using a high level interface (Video BIOS).

- Using a lower-level device driver (file drivers using disk drivers).

- Simulating work with hardware, while doing something entirely different.

2. On the software side:

- Allowing the operating system direct access to hardware resources.

- Implementing only primitives.

- Implementing an interface for non-driver software (Example: TWAIN).

- Implementing a language, sometimes high-level (Example Postscript).

For example, to show the user something on the screen, an application would make a request to the kernel, which would forward the request to its display driver, which is then responsible for actually plotting the character/pixel.

A kernel must maintain a list of available devices. This list may be known in advance (e.g. on an embedded system where the kernel will be rewritten if the available hardware changes), configured by the user (typical on older PCs and on systems that are not designed for personal use) or detected by the operating system at run time (normally called plug and play). In a plug and play system, a device manager first performs a scan on different hardware buses, such as Peripheral Component Interconnect

Peripheral Component Interconnect

Conventional PCI is a computer bus for attaching hardware devices in a computer...

(PCI) or Universal Serial Bus

Universal Serial Bus

USB is an industry standard developed in the mid-1990s that defines the cables, connectors and protocols used in a bus for connection, communication and power supply between computers and electronic devices....

(USB), to detect installed devices, then searches for the appropriate drivers.

As device management is a very OS-specific topic, these drivers are handled differently by each kind of kernel design, but in every case, the kernel has to provide the I/O

Input/output

In computing, input/output, or I/O, refers to the communication between an information processing system , and the outside world, possibly a human, or another information processing system. Inputs are the signals or data received by the system, and outputs are the signals or data sent from it...

to allow drivers to physically access their devices through some port or memory location. Very important decisions have to be made when designing the device management system, as in some designs accesses may involve context switch

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

es, making the operation very CPU-intensive and easily causing a significant performance overhead.

System calls

A system call is a mechanism that is used by the application program to request a service from the operating system. They use a machine code instruction that causes the processor to change mode. An example would be from supervisor mode to protected mode. This is where the operating system performs actions like accessing hardware devices or the memory management unit. Generally the operating system provides a library that sits between the operating system and normal programs. Usually it is a C library such as Glibc or Windows API. The library handles the low-level details of passing information to the kernel and switching to supervisor mode. System calls include close, open, read, wait and write.To actually perform useful work, a process must be able to access the services provided by the kernel. This is implemented differently by each kernel, but most provide a C library or an API

Application programming interface

An application programming interface is a source code based specification intended to be used as an interface by software components to communicate with each other...

, which in turn invokes the related kernel functions.

The method of invoking the kernel function varies from kernel to kernel. If memory isolation is in use, it is impossible for a user process to call the kernel directly, because that would be a violation of the processor's access control rules. A few possibilities are:

- Using a software-simulated interruptInterruptIn computing, an interrupt is an asynchronous signal indicating the need for attention or a synchronous event in software indicating the need for a change in execution....

. This method is available on most hardware, and is therefore very common. - Using a call gateCall gateA call gate is a mechanism in Intel's x86 architecture for changing the privilege level of the CPU when it executes a predefined function call using a CALL FAR instruction.- Overview :...

. A call gate is a special address stored by the kernel in a list in kernel memory at a location known to the processor. When the processor detects a call to that address, it instead redirects to the target location without causing an access violation. This requires hardware support, but the hardware for it is quite common. - Using a special system callSystem callIn computing, a system call is how a program requests a service from an operating system's kernel. This may include hardware related services , creating and executing new processes, and communicating with integral kernel services...

instruction. This technique requires special hardware support, which common architectures (notably, x86) may lack. System call instructions have been added to recent models of x86 processors, however, and some operating systems for PCs make use of them when available. - Using a memory-based queue. An application that makes large numbers of requests but does not need to wait for the result of each may add details of requests to an area of memory that the kernel periodically scans to find requests.

Issues of kernel support for protection

An important consideration in the design of a kernel is the support it provides for protection from faults (fault tolerance) and from malicious behaviors (securityComputer security

Computer security is a branch of computer technology known as information security as applied to computers and networks. The objective of computer security includes protection of information and property from theft, corruption, or natural disaster, while allowing the information and property to...

). These two aspects are usually not clearly distinguished, and the adoption of this distinction

Separation of protection and security

In computer sciences the separation of protection and security is a design choice. Wulf et al. identified protection as a mechanism and security as a policy, therefore making the protection-security distinction a particular case of the separation of mechanism and policy principle.- Overview :The...

in the kernel design leads to the rejection of a hierarchical structure for protection.

The mechanisms or policies provided by the kernel can be classified according to several criteria, as: static (enforced at compile time

Compile time

In computer science, compile time refers to either the operations performed by a compiler , programming language requirements that must be met by source code for it to be successfully compiled , or properties of the program that can be reasoned about at compile time.The operations performed at...

) or dynamic (enforced at run time); preemptive or post-detection; according to the protection principles they satisfy (i.e. Denning

Peter J. Denning

Peter J. Denning is an American computer scientist, and prolific writer. He is best known for pioneering work in virtual memory, especially for inventing the working-set model for program behavior, which defeated thrashing in operating systems and became the reference standard for all memory...

); whether they are hardware supported or language based; whether they are more an open mechanism or a binding policy; and many more.

Support for hierarchical protection domains is typically that of "CPU modes

CPU modes

CPU modes are operating modes for the central processing unit of some computer architectures that place restrictions on the type and scope of operations that can be performed by certain processes being run by the CPU...

." An efficient and simple way to provide hardware support of capabilities is to delegate the MMU

Memory management unit

A memory management unit , sometimes called paged memory management unit , is a computer hardware component responsible for handling accesses to memory requested by the CPU...

the responsibility of checking access-rights for every memory access, a mechanism called capability-based addressing

Capability-based addressing

In computer science, capability-based addressing is a scheme used by some computers to control access to memory. Under a capability-based addressing scheme, pointers are replaced by protected objects that can only be created through the use of privileged instructions which may only be executed by...

. Most commercial computer architectures lack MMU support for capabilities.

An alternative approach is to simulate capabilities using commonly-supported hierarchical domains; in this approach, each protected object must reside in an address space that the application does not have access to; the kernel also maintains a list of capabilities in such memory. When an application needs to access an object protected by a capability, it performs a system call and the kernel performs the access for it. The performance cost of address space switching limits the practicality of this approach in systems with complex interactions between objects, but it is used in current operating systems for objects that are not accessed frequently or which are not expected to perform quickly.

Approaches where protection mechanism are not firmware supported but are instead simulated at higher levels (e.g. simulating capabilities by manipulating page tables on hardware that does not have direct support), are possible, but there are performance implications. Lack of hardware support may not be an issue, however, for systems that choose to use language-based protection.

An important kernel design decision is the choice of the abstraction levels where the security mechanisms and policies should be implemented. Kernel security mechanisms play a critical role in supporting security at higher levels.

One approach is to use firmware and kernel support for fault tolerance (see above), and build the security policy for malicious behavior on top of that (adding features such as cryptography

Cryptography

Cryptography is the practice and study of techniques for secure communication in the presence of third parties...

mechanisms where necessary), delegating some responsibility to the compiler

Compiler

A compiler is a computer program that transforms source code written in a programming language into another computer language...

. Approaches that delegate enforcement of security policy to the compiler and/or the application level are often called language-based security.

The lack of many critical security mechanisms in current mainstream operating systems impedes the implementation of adequate security policies at the application abstraction level. In fact, a common misconception in computer security is that any security policy can be implemented in an application regardless of kernel support.

Hardware-based protection or language-based protection

Typical computer systems today use hardware-enforced rules about what programs are allowed to access what data. The processor monitors the execution and stops a program that violates a rule (e.g., a user process that is about to read or write to kernel memory, and so on). In systems that lack support for capabilities, processes are isolated from each other by using separate address spaces. Calls from user processes into the kernel are regulated by requiring them to use one of the above-described system call methods.An alternative approach is to use language-based protection. In a language-based protection system

Language-based system

A language-based system is a type of operating system that uses language features to provide security, instead of or in addition to hardware mechanisms. In such systems, code referred to as the trusted base is responsible for approving programs for execution, assuring they cannot perform operations...

, the kernel will only allow code to execute that has been produced by a trusted language compiler

Compiler

A compiler is a computer program that transforms source code written in a programming language into another computer language...

. The language may then be designed such that it is impossible for the programmer to instruct it to do something that will violate a security requirement.

Advantages of this approach include:

- No need for separate address spaces. Switching between address spaces is a slow operation that causes a great deal of overhead, and a lot of optimization work is currently performed in order to prevent unnecessary switches in current operating systems. Switching is completely unnecessary in a language-based protection system, as all code can safely operate in the same address space.

- Flexibility. Any protection scheme that can be designed to be expressed via a programming language can be implemented using this method. Changes to the protection scheme (e.g. from a hierarchical system to a capability-based one) do not require new hardware.

Disadvantages include:

- Longer application start up time. Applications must be verified when they are started to ensure they have been compiled by the correct compiler, or may need recompiling either from source code or from bytecodeBytecodeBytecode, also known as p-code , is a term which has been used to denote various forms of instruction sets designed for efficient execution by a software interpreter as well as being suitable for further compilation into machine code...

. - Inflexible type systemType systemA type system associates a type with each computed value. By examining the flow of these values, a type system attempts to ensure or prove that no type errors can occur...

s. On traditional systems, applications frequently perform operations that are not type safeType safetyIn computer science, type safety is the extent to which a programming language discourages or prevents type errors. A type error is erroneous or undesirable program behaviour caused by a discrepancy between differing data types...

. Such operations cannot be permitted in a language-based protection system, which means that applications may need to be rewritten and may, in some cases, lose performance.

Examples of systems with language-based protection include JX

JX (operating system)

JX is a microkernel operating system with both the kernel and applications implemented using the Java programming language.- Overview :JX is implemented as an extended Java Virtual Machine , adding support to the Java system for necessary features such as protection domains and hardware access,...

and Microsoft

Microsoft

Microsoft Corporation is an American public multinational corporation headquartered in Redmond, Washington, USA that develops, manufactures, licenses, and supports a wide range of products and services predominantly related to computing through its various product divisions...

's Singularity

Singularity (operating system)

Singularity is an experimental operating system being built by Microsoft Research since 2003. It is intended as a highly-dependable OS in which the kernel, device drivers, and applications are all written in managed code.- Workings :...

.

Process cooperation

Edsger DijkstraEdsger Dijkstra

Edsger Wybe Dijkstra ; ) was a Dutch computer scientist. He received the 1972 Turing Award for fundamental contributions to developing programming languages, and was the Schlumberger Centennial Chair of Computer Sciences at The University of Texas at Austin from 1984 until 2000.Shortly before his...

proved that from a logical point of view, atomic lock

Lock (computer science)

In computer science, a lock is a synchronization mechanism for enforcing limits on access to a resource in an environment where there are many threads of execution. Locks are one way of enforcing concurrency control policies.-Types:...

and unlock operations operating on binary semaphores

Semaphore (programming)

In computer science, a semaphore is a variable or abstract data type that provides a simple but useful abstraction for controlling access by multiple processes to a common resource in a parallel programming environment....

are sufficient primitives to express any functionality of process cooperation. However this approach is generally held to be lacking in terms of safety and efficiency, whereas a message passing

Message passing

Message passing in computer science is a form of communication used in parallel computing, object-oriented programming, and interprocess communication. In this model, processes or objects can send and receive messages to other processes...

approach is more flexible.

I/O devices management

The idea of a kernel where I/O devices are handled uniformly with other processes, as parallel co-operating processes, was first proposed and implemented by Brinch Hansen (although similar ideas were suggested in 1967). In Hansen's description of this, the "common" processes are called internal processes, while the I/O devices are called external processes.Similar to physical memory, allowing applications direct access to controller ports and registers can cause the controller to malfunction, or system to crash. With this, depending on the complexity of the device, some devices can get surprisingly complex to program, and use several different controllers. Because of this, providing a more abstract interface to manage the device is important. This interface is normally done by a Device Driver or Hardware Abstraction Layer.

Frequently, applications will require access to these devices. The Kernel must maintain the list of these devices by querying the system for them in some way. This can be done through the BIOS, or through one of the various system buses (Such as PCI/PCIE, or USB.) When an application requests an operation on a device (Such as, displaying a character), the kernel needs to send this request to the current active video driver. The video driver, in turn, needs to carry out this request. This is an example of Inter Process Communication (IPC).

Kernel-wide design approaches

Naturally, the above listed tasks and features can be provided in many ways that differ from each other in design and implementation.The principle of separation of mechanism and policy

Separation of mechanism and policy

The separation of mechanism and policy is a design principle in computer science. It states that mechanisms should not dictate the policies according to which decisions are made about which operations to authorize, and which resources to...

is the substantial difference between the philosophy of micro and monolithic kernels. Here a mechanism is the support that allows the implementation of many different policies, while a policy is a particular "mode of operation". For instance, a mechanism may provide for user log-in attempts to call an authorization server to determine whether access should be granted; a policy may be for the authorization server to request a password and check it against an encrypted password stored in a database. Because the mechanism is generic, the policy could more easily be changed (e.g. by requiring the use of a security token

Security token

A security token may be a physical device that an authorized user of computer services is given to ease authentication...

) than if the mechanism and policy were integrated in the same module.

In minimal microkernel just some very basic policies are included, and its mechanisms allows what is running on top of the kernel (the remaining part of the operating system and the other applications) to decide which policies to adopt (as memory management, high level process scheduling, file system management, etc.). A monolithic kernel instead tends to include many policies, therefore restricting the rest of the system to rely on them.

Per Brinch Hansen

Per Brinch Hansen

Per Brinch Hansen was a Danish-American computer scientist known for concurrent programming theory.-Biography:He was born in Frederiksberg, in Copenhagen, Denmark....

presented cogent arguments in favor of separation of mechanism and policy. The failure to properly fulfill this separation, is one of the major causes of the lack of substantial innovation in existing operating systems, a problem common in computer architecture. The monolithic design is induced by the "kernel mode"/"user mode" architectural approach to protection (technically called hierarchical protection domains), which is common in conventional commercial systems; in fact, every module needing protection is therefore preferably included into the kernel. This link between monolithic design and "privileged mode" can be reconducted to the key issue of mechanism-policy separation; in fact the "privileged mode" architectural approach melts together the protection mechanism with the security policies, while the major alternative architectural approach, capability-based addressing

Capability-based addressing

In computer science, capability-based addressing is a scheme used by some computers to control access to memory. Under a capability-based addressing scheme, pointers are replaced by protected objects that can only be created through the use of privileged instructions which may only be executed by...

, clearly distinguishes between the two, leading naturally to a microkernel design (see Separation of protection and security

Separation of protection and security

In computer sciences the separation of protection and security is a design choice. Wulf et al. identified protection as a mechanism and security as a policy, therefore making the protection-security distinction a particular case of the separation of mechanism and policy principle.- Overview :The...

).

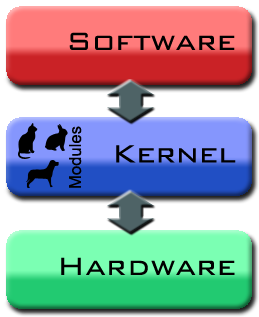

While monolithic kernel

Monolithic kernel

A monolithic kernel is an operating system architecture where the entire operating system is working in the kernel space and alone as supervisor mode...

s execute all of their code in the same address space (kernel space) microkernel

Microkernel

In computer science, a microkernel is the near-minimum amount of software that can provide the mechanisms needed to implement an operating system . These mechanisms include low-level address space management, thread management, and inter-process communication...

s try to run most of their services in user space, aiming to improve maintainability and modularity of the codebase. Most kernels do not fit exactly into one of these categories, but are rather found in between these two designs. These are called hybrid kernel

Hybrid kernel

A hybrid kernel is a kernel architecture based on combining aspects of microkernel and monolithic kernel architectures used in computer operating systems. The category is controversial due to the similarity to monolithic kernel; the term has been dismissed by Linus Torvalds as simple marketing...

s. More exotic designs such as nanokernels and exokernel

Exokernel

Exokernel is an operating system kernel developed by the MIT Parallel and Distributed Operating Systems group, and also a class of similar operating systems....

s are available, but are seldom used for production systems. The Xen

Xen

Xen is a virtual-machine monitor providing services that allow multiple computer operating systems to execute on the same computer hardware concurrently....

hypervisor, for example, is an exokernel.

Monolithic kernels

In a monolithic kernel, all OS services run along with the main kernel thread, thus also residing in the same memory area. This approach provides rich and powerful hardware access. Some developers, such as UNIX developer Ken Thompson, maintain that it is "easier to implement a monolithic kernel" than microkernels. The main disadvantages of monolithic kernels are the dependencies between system components — a bug in a device driver might crash the entire system — and the fact that large kernels can become very difficult to maintain.Monolithic kernels, which have traditionally been used by Unix-like operating systems, contain all the operating system core functions and the device drivers (small programs that allow the operating system to interact with hardware devices, such as disk drives, video cards and printers). This is the traditional design of UNIX systems. A monolithic kernel is one single program that contains all of the code necessary to perform every kernel related task. Every part which is to be accessed by most programs which cannot be put in a library is in the kernel space: Device drivers, Scheduler, Memory handling, File systems, Network stacks. Many system calls are provided to applications, to allow them to access all those services.

A monolithic kernel, while initially loaded with subsystems that may not be needed can be tuned to a point where it is as fast as or faster than the one that was specifically designed for the hardware, although more in a general sense. Modern monolithic kernels, such as those of Linux and FreeBSD, both of which fall into the category of Unix-like operating systems, feature the ability to load modules at runtime, thereby allowing easy extension of the kernel's capabilities as required, while helping to minimize the amount of code running in kernel space. In the monolithic kernel, some advantages hinge on these points:

- Since there is less software involved it is faster.

- As it is one single piece of software it should be smaller both in source and compiled forms.

- Less code generally means fewer bugs which can translate to fewer security problems.

Most work in the monolithic kernel is done via system calls. These are interfaces, usually kept in a tabular structure, that access some subsystem within the kernel such as disk operations. Essentially calls are made within programs and a checked copy of the request is passed through the system call. Hence, not far to travel at all. The monolithic Linux kernel can be made extremely small not only because of its ability to dynamically load modules but also because of its ease of customization. In fact, there are some versions that are small enough to fit together with a large number of utilities and other programs on a

single floppy disk and still provide a fully functional operating system (one of the most popular of which is muLinux). This ability to miniaturize its kernel has also led to a rapid growth in the use of Linux in embedded systems (i.e., computer circuitry built into other products).

These types of kernels consist of the core functions of the operating system and the device drivers with the ability to load modules at runtime. They provide rich and powerful abstractions of the underlying hardware. They provide a small set of simple hardware abstractions and use applications called servers to provide more functionality. This particular approach defines a high-level virtual interface over the hardware, with a set of system calls to implement operating system services such as process management, concurrency and memory management in several modules that run in supervisor mode.

This design has several flaws and limitations:

- Coding in kernel space is hard, since you cannot use common libraries (like a full-featured libc), debugging is harder (it's hard to use a source-level debugger like gdb), rebooting the computer is often needed. This is not just a problem of convenience to the developers. Debugging is harder, and as difficulties become stronger, it becomes more likely that code is "buggier".

- Bugs in one part of the kernel have strong side effects, since every function in the kernel has all the privileges, a bug in one function can corrupt data structure of another, totally unrelated part of the kernel, or of any running program.

- Kernels often become very huge, and difficult to maintain.

- Even if the modules servicing these operations are separate from the whole, the code integration is tight and difficult to do correctly.

- Since the modules run in the same address space, a bug can bring down the entire system.

- The disadvantage cited for monolithic kernels is that they are not portable; that is, they must be rewritten for each new architecture that the operating system is to be used on.

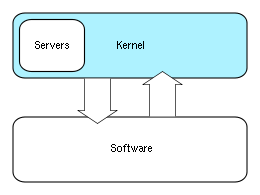

Microkernels

Microkernel (also abbreviated μK or uK) is the term describing an approach to Operating System design by which the functionality of the system is moved out of the traditional "kernel", into a set of "servers" that communicate through a "minimal" kernel, leaving as little as possible in "system space" and as much as possible in "user space". A microkernel that is designed for a specific platform or device is only ever going to have what it needs to operate. The microkernel approach consists of defining a simple abstraction over the hardware, with a set of primitives or system callSystem call

In computing, a system call is how a program requests a service from an operating system's kernel. This may include hardware related services , creating and executing new processes, and communicating with integral kernel services...

s to implement minimal OS services such as memory management

Memory management

Memory management is the act of managing computer memory. The essential requirement of memory management is to provide ways to dynamically allocate portions of memory to programs at their request, and freeing it for reuse when no longer needed. This is critical to the computer system.Several...

, multitasking

Computer multitasking

In computing, multitasking is a method where multiple tasks, also known as processes, share common processing resources such as a CPU. In the case of a computer with a single CPU, only one task is said to be running at any point in time, meaning that the CPU is actively executing instructions for...

, and inter-process communication

Inter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

. Other services, including those normally provided by the kernel, such as networking, are implemented in user-space programs, referred to as servers. Microkernels are easier to maintain than monolithic kernels, but the large number of system calls and context switch

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

es might slow down the system because they typically generate more overhead than plain function calls.

Only parts which really require being in a privileged mode are in kernel space: IPC (Inter-Process Communication), Basic scheduler, or scheduling primitives, Basic memory handling, Basic I/O primitives. Many critical parts are now running in user space: The complete scheduler, Memory handling,

File systems, and Network stacks. Micro kernels were invented as a reaction to traditional "monolithic" kernel design, whereby all system functionality was put in a one static program running in a special "system" mode of the processor.

In the microkernel, only the most fundamental of tasks are performed such as being able to access some (not necessarily all) of the hardware, manage memory and coordinate message passing between the processes. Some systems that use micro kernels are QNX and the HURD. In the case of QNX and HURD, user sessions can be entire snapshots of the system itself or views as it is referred to. The very essence of the microkernel architecture illustrates some of its advantages:

- Maintenance is generally easier.

- Patches can be tested in a separate instance, and then swapped in to take over a production instance.

- Rapid development time and new software can be tested without having to reboot the kernel.

- More persistence in general, if one instance goes hay-wire, it is often possible to substitute it with an operational mirror.

Most micro kernels use a message passing system of some sort to handle requests from one server to another. The message passing system generally operates on a port basis with the microkernel. As an example, if a request for more memory is sent, a port is opened with the microkernel and the request sent through. Once within the microkernel, the steps are similar to system calls.

The rationale was that it would bring modularity in the system architecture, which would entail a cleaner system, easier to debug or dynamically modify, customizable to users' needs, and more performing. They are part of the operating systems like AIX, BeOS, Hurd, Mach, Mac OS X, MINIX, QNX. Etc. Although micro kernels are very small by themselves, in combination with all their required auxiliary code they are, in fact, often larger than monolithic kernels. Advocates of monolithic kernels also point out that the two-tiered structure of microkernel systems, in which most of the operating system does not interact directly with the hardware, creates a not-insignificant cost in terms of system efficiency.

These types of kernels normally provide only the minimal services such as defining memory address spaces, Inter-process communication (IPC) and the process management. The other functions such as running the hardware processes are not handled directly by micro kernels. Proponents of micro kernels point out those monolithic kernels have the disadvantage that an error in the kernel can cause the entire system to crash. However, with a microkernel, if a kernel process crashes, it is still possible to prevent a crash of the system as a whole by merely restarting the service that caused the error. Although this sounds sensible, it is questionable how important it is in reality, because operating systems with monolithic kernels such as Linux have become extremely stable and can run for years without crashing.

Other services provided by the kernel such as networking are implemented in user-space programs referred to as servers. Servers allow the operating system to be modified by simply starting and stopping programs. For a machine without networking support, for instance, the networking server is not started. The task of moving in and out of the kernel to move data between the various applications and servers creates overhead which is detrimental to the efficiency of micro kernels in comparison with monolithic kernels.

Disadvantages in the microkernel exist however. Some are:

- Larger running memory footprint

- More software for interfacing is required, there is a potential for performance loss.

- Messaging bugs can be harder to fix due to the longer trip they have to take versus the one off copy in a monolithic kernel.

- Process management in general can be very complicated.

- The disadvantages for micro kernels are extremely context based. As an example, they work well for small single purpose (and critical) systems because if not many processes need to run, then the complications of process management are effectively mitigated.

A microkernel allows the implementation of the remaining part of the operating system as a normal application program written in a high-level language, and the use of different operating systems on top of the same unchanged kernel. It is also possible to dynamically switch among operating systems and to have more than one active simultaneously.

Monolithic kernels vs. microkernels

As the computer kernel grows, a number of problems become evident. One of the most obvious is that the memory footprintMemory footprint

Memory footprint refers to the amount of main memory that a program uses or references while running.This includes all sorts of active memory regions like code, static data sections , heap, as well as all the stacks, plus memory required to hold any additional data structures, such as symbol...

increases. This is mitigated to some degree by perfecting the virtual memory

Virtual memory

In computing, virtual memory is a memory management technique developed for multitasking kernels. This technique virtualizes a computer architecture's various forms of computer data storage , allowing a program to be designed as though there is only one kind of memory, "virtual" memory, which...

system, but not all computer architecture

Computer architecture

In computer science and engineering, computer architecture is the practical art of selecting and interconnecting hardware components to create computers that meet functional, performance and cost goals and the formal modelling of those systems....

s have virtual memory support. To reduce the kernel's footprint, extensive editing has to be performed to carefully remove unneeded code, which can be very difficult with non-obvious interdependencies between parts of a kernel with millions of lines of code.

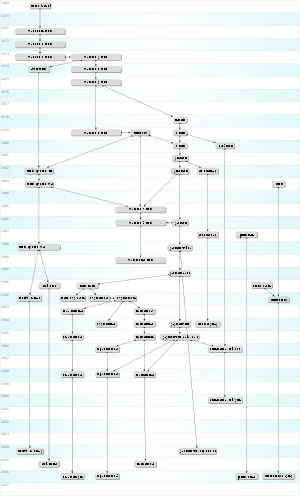

By the early 1990s, due to the various shortcomings of monolithic kernels versus microkernels, monolithic kernels were considered obsolete by virtually all operating system researchers. As a result, the design of Linux as a monolithic kernel rather than a microkernel was the topic of a famous debate between Linus Torvalds

Linus Torvalds

Linus Benedict Torvalds is a Finnish software engineer and hacker, best known for having initiated the development of the open source Linux kernel. He later became the chief architect of the Linux kernel, and now acts as the project's coordinator...

and Andrew Tanenbaum

Andrew S. Tanenbaum

Andrew Stuart "Andy" Tanenbaum is a professor of computer science at the Vrije Universiteit, Amsterdam in the Netherlands. He is best known as the author of MINIX, a free Unix-like operating system for teaching purposes, and for his computer science textbooks, regarded as standard texts in the...

. There is merit on both sides of the argument presented in the Tanenbaum–Torvalds debate.

Performances

Monolithic kernelMonolithic kernel

A monolithic kernel is an operating system architecture where the entire operating system is working in the kernel space and alone as supervisor mode...

s are designed to have all of their code in the same address space (kernel space), which some developers argue is necessary to increase the performance of the system. Some developers also maintain that monolithic systems are extremely efficient if well-written. The monolithic model tends to be more efficient through the use of shared kernel memory, rather than the slower IPC system of microkernel designs, which is typically based on message passing

Message passing

Message passing in computer science is a form of communication used in parallel computing, object-oriented programming, and interprocess communication. In this model, processes or objects can send and receive messages to other processes...

.

The performance of microkernels constructed in the 1980s and early 1990s was poor. Studies that empirically measured the performance of these microkernels did not analyze the reasons of such inefficiency. The explanations of this data were left to "folklore", with the assumption that they were due to the increased frequency of switches from "kernel-mode" to "user-mode", to the increased frequency of inter-process communication

Inter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

and to the increased frequency of context switch

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

es.

In fact, as guessed in 1995, the reasons for the poor performance of microkernels might as well have been: (1) an actual inefficiency of the whole microkernel approach, (2) the particular concepts implemented in those microkernels, and (3) the particular implementation of those concepts. Therefore it remained to be studied if the solution to build an efficient microkernel was, unlike previous attempts, to apply the correct construction techniques.

On the other end, the hierarchical protection domains architecture that leads to the design of a monolithic kernel has a significant performance drawback each time there's an interaction between different levels of protection (i.e. when a process has to manipulate a data structure both in 'user mode' and 'supervisor mode'), since this requires message copying by value.

By the mid-1990s, most researchers had abandoned the belief that careful tuning could reduce this overhead dramatically, but recently, newer microkernels, optimized for performance, such as L4

L4 microkernel family

L4 is a family of second-generation microkernels, generally used to implement Unix-like operating systems, but also used in a variety of other systems.L4 was a response to the poor performance of earlier microkernel-base operating systems...

and K42

K42

K42 is an open-source research operating system for cache-coherent 64-bit multiprocessor systems. It was developed primarily at IBM Thomas J. Watson Research Center in collaboration with University of Toronto and University of New Mexico...

have addressed these problems.

Hybrid (or) Modular kernels

They are part of the operating systems such as Microsoft Windows NT, 2000 and XP. Dragonfly BSD etc. They are similar to micro kernels, except they include some additional code in kernel-space to increase performance. These kernels represent a compromise that was implemented by some developers before it was demonstrated that pure micro kernels can provide high performance. These types of kernels are extensions of micro kernels with some properties of monolithic kernels. Unlike monolithic kernels, these types of kernels are unable to load modules at runtime on their own. Hybrid kernels are micro kernels that have some "none essential" code in kernel-space in order for the code to run more quickly than it would were it to be in user-space. Hybrid kernels are a compromise between the monolithic and microkernel designs. This implies running some services (such as the network stack or the filesystem) in kernel space to reduce the performance overhead of a traditional microkernel, but still running kernel code (such as device drivers) as servers in user space.Many traditionally monolithic kernels are now at least adding (if not actively exploiting) the module capability. The most well known of these kernels is the Linux kernel. The modular kernel essentially can have parts of it that are built into the core kernel binary or binaries that load into memory on demand. It is important to note that a code tainted module has the potential to destabilize a running kernel. Many people become confused on this point when discussing micro kernels. It is possible to write a driver for a microkernel in a completely separate memory space and test it before "going" live. When a kernel module is loaded, it accesses the monolithic portion's memory space by adding to it what it needs, therefore, opening the doorway to possible pollution. A few advantages to the modular (or) Hybrid kernel are:

- Faster development time for drivers that can operate from within modules. No reboot required for testing (provided the kernel is not destabilized).

- On demand capability versus spending time recompiling a whole kernel for things like new drivers or subsystems.

- Faster integration of third party technology (related to development but pertinent unto itself nonetheless).

Modules, generally, communicate with the kernel using a module interface of some sort. The interface is generalized (although particular to a given operating system) so it is not always possible to use modules. Often the device drivers may need more flexibility than the module interface affords. Essentially, it is two system calls and often the safety checks that only have to be done once in the monolithic kernel now may be done twice. Some of the disadvantages of the modular approach are:

- With more interfaces to pass through, the possibility of increased bugs exists (which implies more security holes).

- Maintaining modules can be confusing for some administrators when dealing with problems like symbol differences.

Nanokernels

A nanokernel delegates virtually all services — including even the most basic ones like interrupt controllersProgrammable Interrupt Controller

In computing, a programmable interrupt controller is a device that is used to combine several sources of interrupt onto one or more CPU lines, while allowing priority levels to be assigned to its interrupt outputs. When the device has multiple interrupt outputs to assert, it will assert them in...

or the timer

Timer

A timer is a specialized type of clock. A timer can be used to control the sequence of an event or process. Whereas a stopwatch counts upwards from zero for measuring elapsed time, a timer counts down from a specified time interval, like an hourglass.Timers can be mechanical, electromechanical,...

— to device driver

Device driver

In computing, a device driver or software driver is a computer program allowing higher-level computer programs to interact with a hardware device....

s to make the kernel memory requirement even smaller than a traditional microkernel.

Exokernels

Exokernels are a still experimental approach to operating system design. They differ from the other types of kernels in that their functionality is limited to the protection and multiplexing of the raw hardware, and they provide no hardware abstractions on top of which applications can be constructed. This separation of hardware protection from hardware management enables application developers to determine how to make the most efficient use of the available hardware for each specific program.Exokernels in themselves are extremely small. However, they are accompanied by library operating systems, which provide application developers with the conventional functionalities of a complete operating system. A major advantage of exokernel-based systems is that they can incorporate multiple library operating systems, each exporting a different API (application programming interface), such as one for Linux and one for Microsoft Windows, thus making it possible to simultaneously run both Linux and Windows applications. They are evolving and still under experimental stage in development of an operating system that could incorporate multiple library operating systems and are intended to simultaneously run multiple operating systems of different kinds like Linux and Microsoft Windows together using appropriate Application Programming Interface (API). They provide minimal abstractions, allowing low-level hardware access.

In exokernel systems, library operating systems provide the abstractions typically present in monolithic kernels. Exokernels, also known vertically structured operating systems, are a new and radical approach to OS design. The idea is to force as very few abstractions possible on developers, enabling them to make as many decisions as possible regarding hardware abstractions. They are limited to protection and multiplexing resources. They enable low-level access to the hardware. The applications and abstractions that require specific memory addresses or disk blocks, the kernel ensures that the request resource is free and the application is allowed to access it. The kernel utilizes library operating systems to provide abstractions. MIT has constructed an exokernel called ExOS. An exokernel is a type of kernel that does not abstract hardware into theoretical models. Instead it allocates physical hardware resources, such as processor time, memory pages, and disk blocks, to different programs. A program running on an exokernel can link to a library operating system that uses the exokernel to simulate the abstractions of a well-known OS, or it can develop application-specific abstractions for better performance.

Early operating system kernels

Strictly speaking, an operating system (and thus, a kernel) is not required to run a computer. Programs can be directly loaded and executed on the "bare metal" machine, provided that the authors of those programs are willing to work without any hardware abstraction or operating system support. Most early computers operated this way during the 1950s and early 1960s, which were reset and reloaded between the execution of different programs. Eventually, small ancillary programs such as program loaders and debuggerDebugger

A debugger or debugging tool is a computer program that is used to test and debug other programs . The code to be examined might alternatively be running on an instruction set simulator , a technique that allows great power in its ability to halt when specific conditions are encountered but which...

s were left in memory between runs, or loaded from ROM

Read-only memory

Read-only memory is a class of storage medium used in computers and other electronic devices. Data stored in ROM cannot be modified, or can be modified only slowly or with difficulty, so it is mainly used to distribute firmware .In its strictest sense, ROM refers only...

. As these were developed, they formed the basis of what became early operating system kernels. The "bare metal" approach is still used today on some video game console

Video game console

A video game console is an interactive entertainment computer or customized computer system that produces a video display signal which can be used with a display device to display a video game...

s and embedded system

Embedded system

An embedded system is a computer system designed for specific control functions within a larger system. often with real-time computing constraints. It is embedded as part of a complete device often including hardware and mechanical parts. By contrast, a general-purpose computer, such as a personal...

s, but in general, newer computers use modern operating systems and kernels.

In 1969 the RC 4000 Multiprogramming System

RC 4000 Multiprogramming System

The RC 4000 Multiprogramming System was an operating system developed for the RC 4000 minicomputer in 1969. It is historically notable for being the first attempt to break down an operating system into a group of interacting programs communicating via a message passing kernel...

introduced the system design philosophy of a small nucleus "upon which operating systems for different purposes could be built in an orderly manner", what would be called the microkernel approach.

Time-sharing operating systems

In the decade preceding UnixUnix

Unix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

, computers had grown enormously in power — to the point where computer operators were looking for new ways to get people to use the spare time on their machines. One of the major developments during this era was time-sharing

Time-sharing

Time-sharing is the sharing of a computing resource among many users by means of multiprogramming and multi-tasking. Its introduction in the 1960s, and emergence as the prominent model of computing in the 1970s, represents a major technological shift in the history of computing.By allowing a large...

, whereby a number of users would get small slices of computer time, at a rate at which it appeared they were each connected to their own, slower, machine.

The development of time-sharing systems led to a number of problems. One was that users, particularly at universities where the systems were being developed, seemed to want to hack

Hacker (computer security)

In computer security and everyday language, a hacker is someone who breaks into computers and computer networks. Hackers may be motivated by a multitude of reasons, including profit, protest, or because of the challenge...

the system to get more CPU

Central processing unit

The central processing unit is the portion of a computer system that carries out the instructions of a computer program, to perform the basic arithmetical, logical, and input/output operations of the system. The CPU plays a role somewhat analogous to the brain in the computer. The term has been in...

time. For this reason, security

Computer security

Computer security is a branch of computer technology known as information security as applied to computers and networks. The objective of computer security includes protection of information and property from theft, corruption, or natural disaster, while allowing the information and property to...

and access control

Access control

Access control refers to exerting control over who can interact with a resource. Often but not always, this involves an authority, who does the controlling. The resource can be a given building, group of buildings, or computer-based information system...

became a major focus of the Multics

Multics

Multics was an influential early time-sharing operating system. The project was started in 1964 in Cambridge, Massachusetts...

project in 1965. Another ongoing issue was properly handling computing resources: users spent most of their time staring at the screen and thinking instead of actually using the resources of the computer, and a time-sharing system should give the CPU time to an active user during these periods. Finally, the systems typically offered a memory hierarchy

Memory hierarchy

The term memory hierarchy is used in the theory of computation when discussing performance issues in computer architectural design, algorithm predictions, and the lower level programming constructs such as involving locality of reference. A 'memory hierarchy' in computer storage distinguishes each...

several layers deep, and partitioning this expensive resource led to major developments in virtual memory

Virtual memory

In computing, virtual memory is a memory management technique developed for multitasking kernels. This technique virtualizes a computer architecture's various forms of computer data storage , allowing a program to be designed as though there is only one kind of memory, "virtual" memory, which...

systems.

Amiga

The CommodoreCommodore International

Commodore is the commonly used name for Commodore Business Machines , the U.S.-based home computer manufacturer and electronics manufacturer headquartered in West Chester, Pennsylvania, which also housed Commodore's corporate parent company, Commodore International Limited...

Amiga

Amiga

The Amiga is a family of personal computers that was sold by Commodore in the 1980s and 1990s. The first model was launched in 1985 as a high-end home computer and became popular for its graphical, audio and multi-tasking abilities...