Calibration

Encyclopedia

Calibration is a comparison between measurements – one of known magnitude or correctness made or set with one device and another measurement made in as similar a way as possible with a second device.

The device with the known or assigned correctness is called the standard

. The second device is the unit under test

, test instrument, or any of several other names for the device being calibrated.

during the American Civil War

, in descriptions of artillery

. Many of the earliest measuring devices were intuitive and easy to conceptually validate. The term "calibration" probably was first associated with the precise division of linear distance and angles using a dividing engine

and the measurement of gravitational mass

using a weighing scale

. These two forms of measurement alone and their direct derivatives supported nearly all commerce and technology development from the earliest civilizations until about 1800AD.

The Industrial Revolution

introduced wide scale use of indirect measurement. The measurement of pressure

was an early example of how indirect measurement was added to the existing direct measurement of the same phenomena.

Before the Industrial Revolution, the most common pressure measurement device was a hydrostatic manometer, which is not practical for measuring high pressures. Eugene Bourdon fulfilled the need for high pressure measurement with his Bourdon tube pressure gage.

Before the Industrial Revolution, the most common pressure measurement device was a hydrostatic manometer, which is not practical for measuring high pressures. Eugene Bourdon fulfilled the need for high pressure measurement with his Bourdon tube pressure gage.

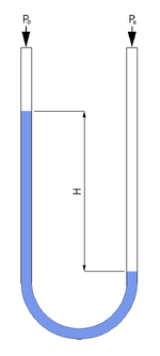

In the direct reading hydrostatic manometer design on the left, unknown pressure pushes the liquid down the left side of the manometer U-tube (or unknown vacuum pulls the liquid up the tube, as shown) where a length scale next to the tube measures the pressure, referenced to the other, open end of the manometer on the right side of the U-tube. The resulting height difference "H" is a direct measurement of the pressure or vacuum with respect to atmospheric pressure

. The absence of pressure or vacuum would make H=0. The self-applied calibration would only require the length scale to be set to zero at that same point.

In a Bourdon tube shown in the two views on the right, applied pressure entering from the bottom on the silver barbed pipe tries to straighten a curved tube (or vacuum tries to curl the tube to a greater extent), moving the free end of the tube that is mechanically connected to the pointer. This is indirect measurement that depends on calibration to read pressure or vacuum correctly. No self-calibration is possible, but generally the zero pressure state is correctable by the user.

Even in recent times, direct measurement is used to increase confidence in the validity of the measurements.

In the early days of US automobile use, people wanted to see the gasoline they were about to buy in a big glass pitcher, a direct measure of volume and quality via appearance. By 1930, rotary flowmeters were accepted as indirect substitutes. A hemispheric viewing window allowed consumers to see the blade of the flowmeter turn as the gasoline was pumped. By 1970, the windows were gone and the measurement was totally indirect.

Indirect measurement always involve linkages or conversions of some kind. It is seldom possible to intuitively monitor the measurement. These facts intensify the need for calibration.

Most measurement techniques used today are indirect.

s would have to be proficient in electronic distortion and sound pressure

measurement. For them, the calibration of a new frequency spectrum analyzer

is a routine matter with extensive precedent. On the other hand, a similar laboratory supporting a coaxial cable

manufacturer may not be as familiar with this specific calibration subject. A transplanted calibration process that worked well to support the microphone application may or may not be the best answer or even adequate for the coaxial cable application. A prior understanding of the measurement requirements of coaxial cable manufacturing would make the calibration process below more successful.

The exact mechanism for assigning tolerance values varies by country and industry type. The measuring equipment manufacturer generally assigns the measurement tolerance, suggests a calibration interval and specifies the environmental range of use and storage. The using organization generally assigns the actual calibration interval, which is dependent on this specific measuring equipment's likely usage level. A very common interval in the United States for 8–12 hours of use 5 days per week is six months. That same instrument in 24/7 usage would generally get a shorter interval. The assignment of calibration intervals can be a formal process based on the results of previous calibrations.

The next step is defining the calibration process. The selection of a standard or standards is the most visible part of the calibration process. Ideally, the standard has less than 1/4 of the measurement uncertainty of the device being calibrated. When this goal is met, the accumulated measurement uncertainty of all of the standards involved is considered to be insignificant when the final measurement is also made with the 4:1 ratio. This ratio was probably first formalized in Handbook 52 that accompanied MIL-STD-45662A, an early US Department of Defense metrology program specification. It was 10:1 from its inception in the 1950s until the 1970s, when advancing technology made 10:1 impossible for most electronic measurements.

Maintaining a 4:1 accuracy ratio with modern equipment is difficult. The test equipment being calibrated can be just as accurate as the working standard. If the accuracy ratio is less than 4:1, then the calibration tolerance can be reduced to compensate. When 1:1 is reached, only an exact match between the standard and the device being calibrated is a completely correct calibration. Another common method for dealing with this capability mismatch is to reduce the accuracy of the device being calibrated.

For example, a gage with 3% manufacturer-stated accuracy can be changed to 4% so that a 1% accuracy standard can be used at 4:1. If the gage is used in an application requiring 16% accuracy, having the gage accuracy reduced to 4% will not affect the accuracy of the final measurements. This is called a limited calibration. But if the final measurement requires 10% accuracy, then the 3% gage never can be better than 3.3:1. Then perhaps adjusting the calibration tolerance for the gage would be a better solution. If the calibration is performed at 100 units, the 1% standard would actually be anywhere between 99 and 101 units. The acceptable values of calibrations where the test equipment is at the 4:1 ratio would be 96 to 104 units, inclusive. Changing the acceptable range to 97 to 103 units would remove the potential contribution of all of the standards and preserve a 3.3:1 ratio. Continuing, a further change to the acceptable range to 98 to 102 restores more than a 4:1 final ratio.

This is a simplified example. The mathematics of the example can be challenged. It is important that whatever thinking guided this process in an actual calibration be recorded and accessible. Informality contributes to tolerance stacks and other difficult to diagnose post calibration problems.

Also in the example above, ideally the calibration value of 100 units would be the best point in the gage's range to perform a single-point calibration. It may be the manufacturer's recommendation or it may be the way similar devices are already being calibrated. Multiple point calibrations are also used. Depending on the device, a zero unit state, the absence of the phenomenon being measured, may also be a calibration point. Or zero may be resettable by the user-there are several variations possible. Again, the points to use during calibration should be recorded.

There may be specific connection techniques between the standard and the device being calibrated that may influence the calibration. For example, in electronic calibrations involving analog phenomena, the impedance of the cable connections can directly influence the result.

All of the information above is collected in a calibration procedure, which is a specific test method

. These procedures capture all of the steps needed to perform a successful calibration. The manufacturer may provide one or the organization may prepare one that also captures all of the organization's other requirements. There are clearinghouses for calibration procedures such as the Government-Industry Data Exchange Program (GIDEP) in the United States.

This exact process is repeated for each of the standards used until transfer standards, certified reference materials

and/or natural physical constants, the measurement standards with the least uncertainty in the laboratory, are reached. This establishes the traceability

of the calibration.

See metrology

for other factors that are considered during calibration process development.

After all of this, individual instruments of the specific type discussed above can finally be calibrated. The process generally begins with a basic damage check. Some organizations such as nuclear power plants collect "as-found" calibration data before any routine maintenance is performed. After routine maintenance and deficiencies detected during calibration are addressed, an "as-left" calibration is performed.

More commonly, a calibration technician is entrusted with the entire process and signs the calibration certificate, which documents the completion of a successful calibration.

s, gas chromatograph systems and laser

interferometer devices can be even more costly to maintain.

The extent of the calibration program exposes the core beliefs of the organization involved. The integrity of organization-wide calibration is easily compromised. Once this happens, the links between scientific theory, engineering practice and mass production that measurement provides can be missing from the start on new work or eventually lost on old work.

The 'single measurement' device used in the basic calibration process description above does exist. But, depending on the organization, the majority of the devices that need calibration can have several ranges and many functionalities in a single instrument. A good example is a common modern oscilloscope

. There easily could be 200,000 combinations of settings to completely calibrate and limitations on how much of an all inclusive calibration can be automated.

Every organization using oscilloscopes has a wide variety of calibration approaches open to them. If a quality assurance program is in force, customers and program compliance efforts can also directly influence the calibration approach. Most oscilloscopes are capital asset

s that increase the value of the organization, in addition to the value of the measurements they make. The individual oscilloscopes are subject to depreciation

for tax purposes over 3, 5, 10 years or some other period in countries with complex tax code

s. The tax treatment of maintenance activity on those assets can bias calibration decisions.

New oscilloscopes are supported by their manufacturers for at least five years, in general. The manufacturers can provide calibration services directly or through agents entrusted with the details of the calibration and adjustment processes.

Very few organizations have only one oscilloscope. Generally, they are either absent or present in large groups. Older devices can be reserved for less demanding uses and get a limited calibration or no calibration at all. In production applications, oscilloscopes can be put in racks used only for one specific purpose. The calibration of that specific scope only has to address that purpose.

This whole process in repeated for each of the basic instrument types present in the organization, such as the digital multimeter pictured below.

Also the picture above shows the extent of the integration between Quality Assurance

and calibration. The small horizontal unbroken paper seals connecting each instrument to the rack prove that the instrument has not been removed since it was last calibrated. These seals are also used to prevent undetected access to the adjustments of the instrument. There also are labels showing the date of the last calibration and when the calibration interval dictates when the next one is needed. Some organizations also assign unique identification to each instrument to standardize the recordkeeping and keep track of accessories that are integral to a specific calibration condition.

When the instruments being calibrated are integrated with computers, the integrated computer programs and any calibration corrections are also under control.

In the United States, there is no universally accepted nomenclature

to identify individual instruments. Besides having multiple names for the same device type there also are multiple, different devices with the same name. This is before slang and shorthand further confuse the situation, which reflects the ongoing open and intense competition that has prevailed since the Industrial Revolution.

Theoretically, anyone who can read and follow the directions of a calibration procedure can perform the work. It is recognizing and dealing with the exceptions that is the most challenging aspect of the work. This is where experience and judgement are called for and where most of the resources are consumed.

is accomplished by a formal comparison to a standard

which is directly or indirectly related to national standards (NIST in the USA), international standards, or certified reference materials

.

Quality management system

s call for an effective metrology

system which includes formal, periodic, and documented calibration of all measuring instruments. ISO 9000

and ISO 17025 sets of standards require that these traceable actions are to a high level and set out how they can be quantified.

In general use, calibration is often regarded as including the process of adjusting the output or indication on a measurement instrument to agree with value of the applied standard, within a specified accuracy. For example, a thermometer

could be calibrated so the error of indication or the correction is determined, and adjusted (e.g. via calibration constants) so that it shows the true temperature in Celsius

at specific points on the scale. This is the perception of the instrument's end-user. However, very few instruments can be adjusted to exactly match the standards they are compared to. For the vast majority of calibrations, the calibration process is actually the comparison of an unknown to a known and recording the results.

plus a number of derived units) which will be used to provide traceability

to customer's instruments by calibration. The NMI supports the metrological infrastructure in that country (and often others) by establishing an unbroken chain, from the top level of standards to an instrument used for measurement. Examples of National Metrology Institutes are NPL

in the UK, NIST in the United States

, PTB

in Germany

and many others. Since the Mutual Recognition Agreement was signed it is now straightforward to take traceability from any participating NMI and it is no longer necessary for a company to obtain traceability for measurements from the NMI of the country in which it is situated.

To communicate the quality of a calibration the calibration value is often accompanied by a traceable uncertainty statement to a stated confidence level. This is evaluated through careful uncertainty analysis.

The device with the known or assigned correctness is called the standard

Standard (metrology)

In the science of measurement, a standard is an object, system, or experiment that bears a defined relationship to a unit of measurement of a physical quantity. Standards are the fundamental reference for a system of weights and measures, against which all other measuring devices are compared...

. The second device is the unit under test

Device under test

Device under test , also known as unit under test , is a term commonly used to refer to a manufactured product undergoing testing.-In semiconductor testing:...

, test instrument, or any of several other names for the device being calibrated.

History

The words "calibrate" and "calibration" entered the English languageEnglish language

English is a West Germanic language that arose in the Anglo-Saxon kingdoms of England and spread into what was to become south-east Scotland under the influence of the Anglian medieval kingdom of Northumbria...

during the American Civil War

American Civil War

The American Civil War was a civil war fought in the United States of America. In response to the election of Abraham Lincoln as President of the United States, 11 southern slave states declared their secession from the United States and formed the Confederate States of America ; the other 25...

, in descriptions of artillery

Artillery

Originally applied to any group of infantry primarily armed with projectile weapons, artillery has over time become limited in meaning to refer only to those engines of war that operate by projection of munitions far beyond the range of effect of personal weapons...

. Many of the earliest measuring devices were intuitive and easy to conceptually validate. The term "calibration" probably was first associated with the precise division of linear distance and angles using a dividing engine

Dividing engine

A dividing engine is a device specifically employed to mark graduations on measuring instruments.-History:There has always been a need for accurate measuring instruments...

and the measurement of gravitational mass

Mass

Mass can be defined as a quantitive measure of the resistance an object has to change in its velocity.In physics, mass commonly refers to any of the following three properties of matter, which have been shown experimentally to be equivalent:...

using a weighing scale

Weighing scale

A weighing scale is a measuring instrument for determining the weight or mass of an object. A spring scale measures weight by the distance a spring deflects under its load...

. These two forms of measurement alone and their direct derivatives supported nearly all commerce and technology development from the earliest civilizations until about 1800AD.

The Industrial Revolution

Industrial Revolution

The Industrial Revolution was a period from the 18th to the 19th century where major changes in agriculture, manufacturing, mining, transportation, and technology had a profound effect on the social, economic and cultural conditions of the times...

introduced wide scale use of indirect measurement. The measurement of pressure

Pressure

Pressure is the force per unit area applied in a direction perpendicular to the surface of an object. Gauge pressure is the pressure relative to the local atmospheric or ambient pressure.- Definition :...

was an early example of how indirect measurement was added to the existing direct measurement of the same phenomena.

In the direct reading hydrostatic manometer design on the left, unknown pressure pushes the liquid down the left side of the manometer U-tube (or unknown vacuum pulls the liquid up the tube, as shown) where a length scale next to the tube measures the pressure, referenced to the other, open end of the manometer on the right side of the U-tube. The resulting height difference "H" is a direct measurement of the pressure or vacuum with respect to atmospheric pressure

Atmospheric pressure

Atmospheric pressure is the force per unit area exerted into a surface by the weight of air above that surface in the atmosphere of Earth . In most circumstances atmospheric pressure is closely approximated by the hydrostatic pressure caused by the weight of air above the measurement point...

. The absence of pressure or vacuum would make H=0. The self-applied calibration would only require the length scale to be set to zero at that same point.

In a Bourdon tube shown in the two views on the right, applied pressure entering from the bottom on the silver barbed pipe tries to straighten a curved tube (or vacuum tries to curl the tube to a greater extent), moving the free end of the tube that is mechanically connected to the pointer. This is indirect measurement that depends on calibration to read pressure or vacuum correctly. No self-calibration is possible, but generally the zero pressure state is correctable by the user.

Even in recent times, direct measurement is used to increase confidence in the validity of the measurements.

In the early days of US automobile use, people wanted to see the gasoline they were about to buy in a big glass pitcher, a direct measure of volume and quality via appearance. By 1930, rotary flowmeters were accepted as indirect substitutes. A hemispheric viewing window allowed consumers to see the blade of the flowmeter turn as the gasoline was pumped. By 1970, the windows were gone and the measurement was totally indirect.

Indirect measurement always involve linkages or conversions of some kind. It is seldom possible to intuitively monitor the measurement. These facts intensify the need for calibration.

Most measurement techniques used today are indirect.

Calibration versus metrology

There is no consistent demarcation between calibration and metrology. Generally, the basic process below would be metrology-centered if it involved new or unfamiliar equipment and processes. For example, a calibration laboratory owned by a successful maker of microphoneMicrophone

A microphone is an acoustic-to-electric transducer or sensor that converts sound into an electrical signal. In 1877, Emile Berliner invented the first microphone used as a telephone voice transmitter...

s would have to be proficient in electronic distortion and sound pressure

Sound pressure

Sound pressure or acoustic pressure is the local pressure deviation from the ambient atmospheric pressure caused by a sound wave. Sound pressure can be measured using a microphone in air and a hydrophone in water...

measurement. For them, the calibration of a new frequency spectrum analyzer

Spectrum analyzer

A spectrum analyzer measures the magnitude of an input signal versus frequency within the full frequency range of the instrument. The primary use is to measure the power of the spectrum of known and unknown signals...

is a routine matter with extensive precedent. On the other hand, a similar laboratory supporting a coaxial cable

Coaxial cable

Coaxial cable, or coax, has an inner conductor surrounded by a flexible, tubular insulating layer, surrounded by a tubular conducting shield. The term coaxial comes from the inner conductor and the outer shield sharing the same geometric axis...

manufacturer may not be as familiar with this specific calibration subject. A transplanted calibration process that worked well to support the microphone application may or may not be the best answer or even adequate for the coaxial cable application. A prior understanding of the measurement requirements of coaxial cable manufacturing would make the calibration process below more successful.

Basic calibration process

The calibration process begins with the design of the measuring instrument that needs to be calibrated. The design has to be able to "hold a calibration" through its calibration interval. In other words, the design has to be capable of measurements that are "within engineering tolerance" when used within the stated environmental conditions over some reasonable period of time. Having a design with these characteristics increases the likelihood of the actual measuring instruments performing as expected.The exact mechanism for assigning tolerance values varies by country and industry type. The measuring equipment manufacturer generally assigns the measurement tolerance, suggests a calibration interval and specifies the environmental range of use and storage. The using organization generally assigns the actual calibration interval, which is dependent on this specific measuring equipment's likely usage level. A very common interval in the United States for 8–12 hours of use 5 days per week is six months. That same instrument in 24/7 usage would generally get a shorter interval. The assignment of calibration intervals can be a formal process based on the results of previous calibrations.

The next step is defining the calibration process. The selection of a standard or standards is the most visible part of the calibration process. Ideally, the standard has less than 1/4 of the measurement uncertainty of the device being calibrated. When this goal is met, the accumulated measurement uncertainty of all of the standards involved is considered to be insignificant when the final measurement is also made with the 4:1 ratio. This ratio was probably first formalized in Handbook 52 that accompanied MIL-STD-45662A, an early US Department of Defense metrology program specification. It was 10:1 from its inception in the 1950s until the 1970s, when advancing technology made 10:1 impossible for most electronic measurements.

Maintaining a 4:1 accuracy ratio with modern equipment is difficult. The test equipment being calibrated can be just as accurate as the working standard. If the accuracy ratio is less than 4:1, then the calibration tolerance can be reduced to compensate. When 1:1 is reached, only an exact match between the standard and the device being calibrated is a completely correct calibration. Another common method for dealing with this capability mismatch is to reduce the accuracy of the device being calibrated.

For example, a gage with 3% manufacturer-stated accuracy can be changed to 4% so that a 1% accuracy standard can be used at 4:1. If the gage is used in an application requiring 16% accuracy, having the gage accuracy reduced to 4% will not affect the accuracy of the final measurements. This is called a limited calibration. But if the final measurement requires 10% accuracy, then the 3% gage never can be better than 3.3:1. Then perhaps adjusting the calibration tolerance for the gage would be a better solution. If the calibration is performed at 100 units, the 1% standard would actually be anywhere between 99 and 101 units. The acceptable values of calibrations where the test equipment is at the 4:1 ratio would be 96 to 104 units, inclusive. Changing the acceptable range to 97 to 103 units would remove the potential contribution of all of the standards and preserve a 3.3:1 ratio. Continuing, a further change to the acceptable range to 98 to 102 restores more than a 4:1 final ratio.

This is a simplified example. The mathematics of the example can be challenged. It is important that whatever thinking guided this process in an actual calibration be recorded and accessible. Informality contributes to tolerance stacks and other difficult to diagnose post calibration problems.

Also in the example above, ideally the calibration value of 100 units would be the best point in the gage's range to perform a single-point calibration. It may be the manufacturer's recommendation or it may be the way similar devices are already being calibrated. Multiple point calibrations are also used. Depending on the device, a zero unit state, the absence of the phenomenon being measured, may also be a calibration point. Or zero may be resettable by the user-there are several variations possible. Again, the points to use during calibration should be recorded.

There may be specific connection techniques between the standard and the device being calibrated that may influence the calibration. For example, in electronic calibrations involving analog phenomena, the impedance of the cable connections can directly influence the result.

All of the information above is collected in a calibration procedure, which is a specific test method

Test method

A test method is a definitive procedure that produces a test result.A test can be considered as technical operation that consists of determination of one or more characteristics of a given product, process or service according to a specified procedure. Often a test is part of an experiment.The test...

. These procedures capture all of the steps needed to perform a successful calibration. The manufacturer may provide one or the organization may prepare one that also captures all of the organization's other requirements. There are clearinghouses for calibration procedures such as the Government-Industry Data Exchange Program (GIDEP) in the United States.

This exact process is repeated for each of the standards used until transfer standards, certified reference materials

Certified reference materials

Certified Reference Materials are ‘controls’ or standards used to check the quality and traceability of products. A reference standard for a unit of measurement is an artifact that embodies the quantity of interest in a way that ties its value to the reference base for calibration.At the highest...

and/or natural physical constants, the measurement standards with the least uncertainty in the laboratory, are reached. This establishes the traceability

Traceability

Traceability refers to the completeness of the information about every step in a process chain.The formal definition: Traceability is the ability to chronologically interrelate uniquely identifiable entities in a way that is verifiable....

of the calibration.

See metrology

Metrology

Metrology is the science of measurement. Metrology includes all theoretical and practical aspects of measurement. The word comes from Greek μέτρον , "measure" + "λόγος" , amongst others meaning "speech, oration, discourse, quote, study, calculation, reason"...

for other factors that are considered during calibration process development.

After all of this, individual instruments of the specific type discussed above can finally be calibrated. The process generally begins with a basic damage check. Some organizations such as nuclear power plants collect "as-found" calibration data before any routine maintenance is performed. After routine maintenance and deficiencies detected during calibration are addressed, an "as-left" calibration is performed.

More commonly, a calibration technician is entrusted with the entire process and signs the calibration certificate, which documents the completion of a successful calibration.

Calibration process success factors

The basic process outlined above is a difficult and expensive challenge. The cost for ordinary equipment support is generally about 10% of the original purchase price on a yearly basis, as a commonly accepted rule-of-thumb. Exotic devices such as scanning electron microscopeScanning electron microscope

A scanning electron microscope is a type of electron microscope that images a sample by scanning it with a high-energy beam of electrons in a raster scan pattern...

s, gas chromatograph systems and laser

Laser

A laser is a device that emits light through a process of optical amplification based on the stimulated emission of photons. The term "laser" originated as an acronym for Light Amplification by Stimulated Emission of Radiation...

interferometer devices can be even more costly to maintain.

The extent of the calibration program exposes the core beliefs of the organization involved. The integrity of organization-wide calibration is easily compromised. Once this happens, the links between scientific theory, engineering practice and mass production that measurement provides can be missing from the start on new work or eventually lost on old work.

The 'single measurement' device used in the basic calibration process description above does exist. But, depending on the organization, the majority of the devices that need calibration can have several ranges and many functionalities in a single instrument. A good example is a common modern oscilloscope

Oscilloscope

An oscilloscope is a type of electronic test instrument that allows observation of constantly varying signal voltages, usually as a two-dimensional graph of one or more electrical potential differences using the vertical or 'Y' axis, plotted as a function of time,...

. There easily could be 200,000 combinations of settings to completely calibrate and limitations on how much of an all inclusive calibration can be automated.

Every organization using oscilloscopes has a wide variety of calibration approaches open to them. If a quality assurance program is in force, customers and program compliance efforts can also directly influence the calibration approach. Most oscilloscopes are capital asset

Capital asset

The term capital asset has three unrelated technical definitions, and is also used in a variety of non-technical ways.*In financial economics, it refers to any asset used to make money, as opposed to assets used for personal enjoyment or consumption...

s that increase the value of the organization, in addition to the value of the measurements they make. The individual oscilloscopes are subject to depreciation

Depreciation

Depreciation refers to two very different but related concepts:# the decrease in value of assets , and# the allocation of the cost of assets to periods in which the assets are used ....

for tax purposes over 3, 5, 10 years or some other period in countries with complex tax code

Tax code

In the UK, every person paid under the PAYE scheme is allocated a tax code by HM Revenue and Customs. This is usually in the form of a number followed by a letter suffix, though other 'non-standard' codes are also used. This code describes to employers how much tax to deduct from an employee. The...

s. The tax treatment of maintenance activity on those assets can bias calibration decisions.

New oscilloscopes are supported by their manufacturers for at least five years, in general. The manufacturers can provide calibration services directly or through agents entrusted with the details of the calibration and adjustment processes.

Very few organizations have only one oscilloscope. Generally, they are either absent or present in large groups. Older devices can be reserved for less demanding uses and get a limited calibration or no calibration at all. In production applications, oscilloscopes can be put in racks used only for one specific purpose. The calibration of that specific scope only has to address that purpose.

This whole process in repeated for each of the basic instrument types present in the organization, such as the digital multimeter pictured below.

Also the picture above shows the extent of the integration between Quality Assurance

Quality Assurance

Quality assurance, or QA for short, is the systematic monitoring and evaluation of the various aspects of a project, service or facility to maximize the probability that minimum standards of quality are being attained by the production process...

and calibration. The small horizontal unbroken paper seals connecting each instrument to the rack prove that the instrument has not been removed since it was last calibrated. These seals are also used to prevent undetected access to the adjustments of the instrument. There also are labels showing the date of the last calibration and when the calibration interval dictates when the next one is needed. Some organizations also assign unique identification to each instrument to standardize the recordkeeping and keep track of accessories that are integral to a specific calibration condition.

When the instruments being calibrated are integrated with computers, the integrated computer programs and any calibration corrections are also under control.

In the United States, there is no universally accepted nomenclature

Nomenclature

Nomenclature is a term that applies to either a list of names or terms, or to the system of principles, procedures and terms related to naming - which is the assigning of a word or phrase to a particular object or property...

to identify individual instruments. Besides having multiple names for the same device type there also are multiple, different devices with the same name. This is before slang and shorthand further confuse the situation, which reflects the ongoing open and intense competition that has prevailed since the Industrial Revolution.

The calibration paradox

Successful calibration has to be consistent and systematic. At the same time, the complexity of some instruments require that only key functions be identified and calibrated. Under those conditions, a degree of randomness is needed to find unexpected deficiencies. Even the most routine calibration requires a willingness to investigate any unexpected observation.Theoretically, anyone who can read and follow the directions of a calibration procedure can perform the work. It is recognizing and dealing with the exceptions that is the most challenging aspect of the work. This is where experience and judgement are called for and where most of the resources are consumed.

Quality

To improve the quality of the calibration and have the results accepted by outside organizations it is desirable for the calibration and subsequent measurements to be "traceable" to the internationally defined measurement units. Establishing traceabilityTraceability

Traceability refers to the completeness of the information about every step in a process chain.The formal definition: Traceability is the ability to chronologically interrelate uniquely identifiable entities in a way that is verifiable....

is accomplished by a formal comparison to a standard

Standard (metrology)

In the science of measurement, a standard is an object, system, or experiment that bears a defined relationship to a unit of measurement of a physical quantity. Standards are the fundamental reference for a system of weights and measures, against which all other measuring devices are compared...

which is directly or indirectly related to national standards (NIST in the USA), international standards, or certified reference materials

Certified reference materials

Certified Reference Materials are ‘controls’ or standards used to check the quality and traceability of products. A reference standard for a unit of measurement is an artifact that embodies the quantity of interest in a way that ties its value to the reference base for calibration.At the highest...

.

Quality management system

Quality management system

A quality management system can be expressed as the organizational structure, procedures, processes and resources needed to implement quality management.-Elements of a Quality Management System:# Organizational structure# Responsibilities# Methods...

s call for an effective metrology

Metrology

Metrology is the science of measurement. Metrology includes all theoretical and practical aspects of measurement. The word comes from Greek μέτρον , "measure" + "λόγος" , amongst others meaning "speech, oration, discourse, quote, study, calculation, reason"...

system which includes formal, periodic, and documented calibration of all measuring instruments. ISO 9000

ISO 9000

The ISO 9000 family of standards relates to quality management systems and is designed to help organizations ensure they meet the needs of customers and other stakeholders . The standards are published by ISO, the International Organization for Standardization, and available through National...

and ISO 17025 sets of standards require that these traceable actions are to a high level and set out how they can be quantified.

Instrument calibration

Calibration can be called for:- with a new instrument

- when a specified time period is elapsed

- when a specified usage (operating hours) has elapsed

- when an instrument has had a shock or vibrationVibrationVibration refers to mechanical oscillations about an equilibrium point. The oscillations may be periodic such as the motion of a pendulum or random such as the movement of a tire on a gravel road.Vibration is occasionally "desirable"...

which potentially may have put it out of calibration - sudden changes in weather

- whenever observations appear questionable

In general use, calibration is often regarded as including the process of adjusting the output or indication on a measurement instrument to agree with value of the applied standard, within a specified accuracy. For example, a thermometer

Thermometer

Developed during the 16th and 17th centuries, a thermometer is a device that measures temperature or temperature gradient using a variety of different principles. A thermometer has two important elements: the temperature sensor Developed during the 16th and 17th centuries, a thermometer (from the...

could be calibrated so the error of indication or the correction is determined, and adjusted (e.g. via calibration constants) so that it shows the true temperature in Celsius

Celsius

Celsius is a scale and unit of measurement for temperature. It is named after the Swedish astronomer Anders Celsius , who developed a similar temperature scale two years before his death...

at specific points on the scale. This is the perception of the instrument's end-user. However, very few instruments can be adjusted to exactly match the standards they are compared to. For the vast majority of calibrations, the calibration process is actually the comparison of an unknown to a known and recording the results.

International

In many countries a National Metrology Institute (NMI) will exist which will maintain primary standards of measurement (the main SI unitsInternational System of Units

The International System of Units is the modern form of the metric system and is generally a system of units of measurement devised around seven base units and the convenience of the number ten. The older metric system included several groups of units...

plus a number of derived units) which will be used to provide traceability

Traceability

Traceability refers to the completeness of the information about every step in a process chain.The formal definition: Traceability is the ability to chronologically interrelate uniquely identifiable entities in a way that is verifiable....

to customer's instruments by calibration. The NMI supports the metrological infrastructure in that country (and often others) by establishing an unbroken chain, from the top level of standards to an instrument used for measurement. Examples of National Metrology Institutes are NPL

National Physical Laboratory, UK

The National Physical Laboratory is the national measurement standards laboratory for the United Kingdom, based at Bushy Park in Teddington, London, England. It is the largest applied physics organisation in the UK.-Description:...

in the UK, NIST in the United States

United States

The United States of America is a federal constitutional republic comprising fifty states and a federal district...

, PTB

Physikalisch-Technische Bundesanstalt

The Physikalisch-Technische Bundesanstalt is based in Braunschweig and Berlin. It is the national institute for natural and engineering sciences and the highest technical authority for metrology and physical safety engineering in Germany....

in Germany

Germany

Germany , officially the Federal Republic of Germany , is a federal parliamentary republic in Europe. The country consists of 16 states while the capital and largest city is Berlin. Germany covers an area of 357,021 km2 and has a largely temperate seasonal climate...

and many others. Since the Mutual Recognition Agreement was signed it is now straightforward to take traceability from any participating NMI and it is no longer necessary for a company to obtain traceability for measurements from the NMI of the country in which it is situated.

To communicate the quality of a calibration the calibration value is often accompanied by a traceable uncertainty statement to a stated confidence level. This is evaluated through careful uncertainty analysis.

See also

- Calibrated geometryCalibrated geometryIn the mathematical field of differential geometry, a calibrated manifold is a Riemannian manifold of dimension n equipped with a differential p-form φ which is a calibration in the sense that...

- Calibration (statistics)Calibration (statistics)There are two main uses of the term calibration in statistics that denote special types of statistical inference problems. Thus "calibration" can mean...

- Calibration curveCalibration curveIn analytical chemistry, a calibration curve is a general method for determining the concentration of a substance in an unknown sample by comparing the unknown to a set of standard samples of known concentration...

- Color calibrationColor calibrationThe aim of color calibration is to measure and/or adjust the color response of a device to a known state. In ICC terms this is the basis for a additional color characterization of the device and later profiling. In non ICC workflows calibration refers sometimes to establishing a known relationship...

– used to calibrate a computer monitor or display. - Deadweight testerDeadweight testerA dead weight tester apparatus uses known traceable weights to apply pressure to a fluid for checking the accuracy of readings from a pressure gauge. A dead weight tester is a calibration standard method that uses a piston cylinder on which a load is placed to make an equilibrium with an applied...

- Measurement Microphone CalibrationMeasurement microphone calibrationIn order to take a scientific measurement with a microphone, its precise sensitivity must be known . Since this may change over the lifetime of the device, it is necessary to regularly calibrate measurement microphones. This service is offered by some microphone manufacturers and by independent...

- Measurement uncertaintyMeasurement uncertaintyIn metrology, measurement uncertainty is a non-negative parameter characterizing the dispersion of the values attributed to a measured quantity. The uncertainty has a probabilistic basis and reflects incomplete knowledge of the quantity. All measurements are subject to uncertainty and a measured...

- Musical tuningMusical tuningIn music, there are two common meanings for tuning:* Tuning practice, the act of tuning an instrument or voice.* Tuning systems, the various systems of pitches used to tune an instrument, and their theoretical bases.-Tuning practice:...

– tuning, in music, means calibrating musical instruments into playing the right pitch. - Precision measurement equipment laboratoryPrecision Measurement Equipment LaboratoryA Precision Measurement Equipment Laboratory is a United States Air Force facility in which the calibration and repair of test equipment takes place. This practice is also known as metrology: the science of measurement...

- Scale test carScale test carA scale test car is a type of railroad car in maintenance of way service. Its purpose is to calibrate the weighing scales used to weigh loaded railroad cars. Cars are weighed to ensure they are within the axle load limits of the railroad, and to determine the amount of cargo loaded...

– a device used to calibrate weighing scaleWeighing scaleA weighing scale is a measuring instrument for determining the weight or mass of an object. A spring scale measures weight by the distance a spring deflects under its load...

s that weigh railroad carRailroad carA railroad car or railway vehicle , also known as a bogie in Indian English, is a vehicle on a rail transport system that is used for the carrying of cargo or passengers. Cars can be coupled together into a train and hauled by one or more locomotives...

s. - Systems of measurementSystems of measurementA system of measurement is a set of units which can be used to specify anything which can be measured and were historically important, regulated and defined because of trade and internal commerce...