Microarchitecture

Encyclopedia

In computer engineering

, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures. Implementations might vary due to different goals of a given design or due to shifts in technology. Computer architecture

is the combination of microarchitecture and instruction set design.

programmer or compiler writer. The ISA includes the execution model, processor register

s, address and data formats among other things. The microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA.

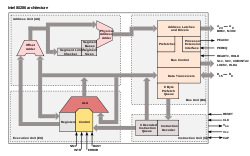

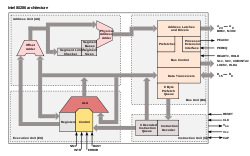

The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic unit

s (ALU)s and even larger elements. These diagrams generally separate the datapath

(where data is placed) and the control path (which can be said to steer the data).

Each microarchitectural element is in turn represented by a schematic

describing the interconnections of logic gate

s used to implement it.

Each logic gate is in turn represented by a circuit diagram

describing the connections of the transistors used to implement it in some particular logic family

.

Machines with different microarchitectures may have the same instruction set architecture, and thus be capable of executing the same programs. New microarchitectures and/or circuitry solutions, along with advances in semiconductor manufacturing, are what allows newer generations of processors to achieve higher performance while using the same ISA.

In principle, a single microarchitecture could execute several different ISAs with only minor changes to the microcode.

The pipelined

The pipelined

datapath

is the most commonly used datapath design in microarchitecture today. This technique is used in most modern microprocessors, microcontroller

s, and DSPs

. The pipelined architecture allows multiple instructions to overlap in execution, much like an assembly line. The pipeline includes several different stages which are fundamental in microarchitecture designs. Some of these stages include instruction fetch, instruction decode, execute, and write back. Some architectures include other stages such as memory access. The design of pipelines is one of the central microarchitectural tasks.

Execution units are also essential to microarchitecture. Execution units include arithmetic logic unit

s (ALU), floating point unit

s (FPU), load/store units, branch prediction, and SIMD

. These units perform the operations or calculations of the processor. The choice of the number of execution units, their latency and throughput is a central microarchitectural design task. The size, latency, throughput and connectivity of memories within the system are also microarchitectural decisions.

System-level design decisions such as whether or not to include peripheral

s, such as memory controller

s, can be considered part of the microarchitectural design process. This includes decisions on the performance-level and connectivity of these peripherals.

Unlike architectural design, where achieving a specific performance level is the main goal, microarchitectural design pays closer attention to other constraints. Since microarchitecture design decisions directly affect what goes into a system, attention must be paid to such issues as:

The instruction cycle is repeated continuously until the power is turned off.

, main memory and non-volatile storage like hard disk

s (where the program instructions and data reside), has always been slower than the processor itself. Step (2) often introduces a lengthy (in CPU terms) delay while the data arrives over the computer bus

. A considerable amount of research has been put into designs that avoid these delays as much as possible. Over the years, a central goal was to execute more instructions in parallel, thus increasing the effective execution speed of a program. These efforts introduced complicated logic and circuit structures. Initially, these techniques could only be implemented on expensive mainframes or supercomputers due to the amount of circuitry needed for these techniques. As semiconductor manufacturing progressed, more and more of these techniques could be implemented on a single semiconductor chip. See Moore's law

.

and EPIC

types have been in fashion. Architectures that are dealing with data parallelism

include SIMD

and Vectors

. Some labels used to denote classes of CPU architectures are not particularly descriptive, especially so the CISC label; many early designs retroactively denoted "CISC

" are in fact significantly simpler than modern RISC processors (in several respects).

However, the choice of instruction set architecture may greatly affect the complexity of implementing high performance devices. The prominent strategy, used to develop the first RISC processors, was to simplify instructions to a minimum of individual semantic complexity combined with high encoding regularity and simplicity. Such uniform instructions were easily fetched, decoded and executed in a pipelined fashion and a simple strategy to reduce the number of logic levels in order to reach high operating frequencies; instruction cache-memories compensated for the higher operating frequency and inherently low code density while large register sets were used to factor out as much of the (slow) memory accesses as possible.

. Early processor designs would carry out all of the steps above for one instruction before moving onto the next. Large portions of the circuitry were left idle at any one step; for instance, the instruction decoding circuitry would be idle during execution and so on.

Pipelines improve performance by allowing a number of instructions to work their way through the processor at the same time. In the same basic example, the processor would start to decode (step 1) a new instruction while the last one was waiting for results. This would allow up to four instructions to be "in flight" at one time, making the processor look four times as fast. Although any one instruction takes just as long to complete (there are still four steps) the CPU as a whole "retires" instructions much faster and can be run at a much higher clock speed.

RISC make pipelines smaller and much easier to construct by cleanly separating each stage of the instruction process and making them take the same amount of time — one cycle. The processor as a whole operates in an assembly line

fashion, with instructions coming in one side and results out the other. Due to the reduced complexity of the Classic RISC pipeline

, the pipelined core and an instruction cache could be placed on the same size die that would otherwise fit the core alone on a CISC design. This was the real reason that RISC was faster. Early designs like the SPARC

and MIPS

often ran over 10 times as fast as Intel and Motorola

CISC solutions at the same clock speed and price.

Pipelines are by no means limited to RISC designs. By 1986 the top-of-the-line VAX implementation (VAX 8800

) was a heavily pipelined design, slightly predating the first commercial MIPS and SPARC designs. Most modern CPUs (even embedded CPUs) are now pipelined, and microcoded CPUs with no pipelining are seen only in the most area-constrained embedded processors. Large CISC machines, from the VAX 8800 to the modern Pentium 4 and Athlon, are implemented with both microcode and pipelines. Improvements in pipelining and caching are the two major microarchitectural advances that have enabled processor performance to keep pace with the circuit technology on which they are based.

on-die. Cache is simply very fast memory, memory that can be accessed in a few cycles as opposed to many needed to "talk" to main memory. The CPU includes a cache controller which automates reading and writing from the cache, if the data is already in the cache it simply "appears", whereas if it is not the processor is "stalled" while the cache controller reads it in.

RISC designs started adding cache in the mid-to-late 1980s, often only 4 KB in total. This number grew over time, and typical CPUs now have at least 512 KB, while more powerful CPUs come with 1 or 2 or even 4, 6, 8 or 12 MB, organized in multiple levels of a memory hierarchy

. Generally speaking, more cache means more performance, due to reduced stalling.

Caches and pipelines were a perfect match for each other. Previously, it didn't make much sense to build a pipeline that could run faster than the access latency of off-chip memory. Using on-chip cache memory instead, meant that a pipeline could run at the speed of the cache access latency, a much smaller length of time. This allowed the operating frequencies of processors to increase at a much faster rate than that of off-chip memory.

Techniques such as branch prediction and speculative execution

are used to lessen these branch penalties. Branch prediction is where the hardware makes educated guesses on whether a particular branch will be taken. In reality one side or the other of the branch will be called much more often than the other. Modern designs have rather complex statistical prediction systems, which watch the results of past branches to predict the future with greater accuracy. The guess allows the hardware to prefetch instructions without waiting for the register read. Speculative execution is a further enhancement in which the code along the predicted path is not just prefetched but also executed before it is known whether the branch should be taken or not. This can yield better performance when the guess is good, with the risk of a huge penalty when the guess is bad because instructions need to be undone.

In the outline above the processor processes parts of a single instruction at a time. Computer programs could be executed faster if multiple instructions were processed simultaneously. This is what superscalar

processors achieve, by replicating functional units such as ALUs. The replication of functional units was only made possible when the die area of a single-issue processor no longer stretched the limits of what could be reliably manufactured. By the late 1980s, superscalar designs started to enter the market place.

In modern designs it is common to find two load units, one store (many instructions have no results to store), two or more integer math units, two or more floating point units, and often a SIMD

unit of some sort. The instruction issue logic grows in complexity by reading in a huge list of instructions from memory and handing them off to the different execution units that are idle at that point. The results are then collected and re-ordered at the end.

allows that ready instruction to be processed while an older instruction waits on the cache, then re-orders the results to make it appear that everything happened in the programmed order. This technique is also used to avoid other operand dependency stalls, such as an instruction awaiting a result from a long latency floating-point operation or other multi-cycle operations.

. One set of instructions is executed first to leave the register to the other set, but if the other set is assigned to a different similar register, both sets of instructions can be executed in parallel.

access times. None of the techniques that exploited instruction-level parallelism within one program could make up for the long stalls that occurred when data had to be fetched from main memory. Additionally, the large transistor counts and high operating frequencies needed for the more advanced ILP techniques required power dissipation levels that could no longer be cheaply cooled. For these reasons, newer generations of computers have started to exploit higher levels of parallelism that exist outside of a single program or program thread

.

This trend is sometimes known as throughput computing. This idea originated in the mainframe market where online transaction processing emphasized not just the execution speed of one transaction, but the capacity to deal with massive numbers of transactions. With transaction-based applications such as network routing and web-site serving greatly increasing in the last decade, the computer industry has re-emphasized capacity and throughput issues.

One technique of how this parallelism is achieved is through multiprocessing

systems, computer systems with multiple CPUs. Once reserved for high-end mainframes

and supercomputer

s, small scale (2-8) multiprocessors servers have become commonplace for the small business market. For large corporations, large scale (16-256) multiprocessors are common. Even personal computer

s with multiple CPUs have appeared since the 1990s.

With further transistor size reductions made available with semiconductor technology advances, multicore CPUs

have appeared where multiple CPUs are implemented on the same silicon chip. Initially used in chips targeting embedded markets, where simpler and smaller CPUs would allow multiple instantiations to fit on one piece of silicon. By 2005, semiconductor technology allowed dual high-end desktop CPUs CMP chips to be manufactured in volume. Some designs, such as Sun Microsystems

' UltraSPARC T1

have reverted back to simpler (scalar, in-order) designs in order to fit more processors on one piece of silicon.

Another technique that has become more popular recently is multithreading. In multithreading, when the processor has to fetch data from slow system memory, instead of stalling for the data to arrive, the processor switches to another program or program thread which is ready to execute. Though this does not speed up a particular program/thread, it increases the overall system throughput by reducing the time the CPU is idle.

Conceptually, multithreading is equivalent to a context switch

at the operating system level. The difference is that a multithreaded CPU can do a thread switch in one CPU cycle instead of the hundreds or thousands of CPU cycles a context switch normally requires. This is achieved by replicating the state hardware (such as the register file

and program counter

) for each active thread.

A further enhancement is simultaneous multithreading

. This technique allows superscalar CPUs to execute instructions from different programs/threads simultaneously in the same cycle.

Computer engineering

Computer engineering, also called computer systems engineering, is a discipline that integrates several fields of electrical engineering and computer science required to develop computer systems. Computer engineers usually have training in electronic engineering, software design, and...

, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures. Implementations might vary due to different goals of a given design or due to shifts in technology. Computer architecture

Computer architecture

In computer science and engineering, computer architecture is the practical art of selecting and interconnecting hardware components to create computers that meet functional, performance and cost goals and the formal modelling of those systems....

is the combination of microarchitecture and instruction set design.

Relation to instruction set architecture

The ISA is roughly the same as the programming model of a processor as seen by an assembly languageAssembly language

An assembly language is a low-level programming language for computers, microprocessors, microcontrollers, and other programmable devices. It implements a symbolic representation of the machine codes and other constants needed to program a given CPU architecture...

programmer or compiler writer. The ISA includes the execution model, processor register

Processor register

In computer architecture, a processor register is a small amount of storage available as part of a CPU or other digital processor. Such registers are addressed by mechanisms other than main memory and can be accessed more quickly...

s, address and data formats among other things. The microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA.

The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic unit

Arithmetic logic unit

In computing, an arithmetic logic unit is a digital circuit that performs arithmetic and logical operations.The ALU is a fundamental building block of the central processing unit of a computer, and even the simplest microprocessors contain one for purposes such as maintaining timers...

s (ALU)s and even larger elements. These diagrams generally separate the datapath

Datapath

A datapath is a collection of functional units, such as arithmetic logic units or multipliers, that perform data processing operations. Most central processing units consist of a datapath and a control unit, with a large part of the control unit dedicated to regulating the interaction between the...

(where data is placed) and the control path (which can be said to steer the data).

Each microarchitectural element is in turn represented by a schematic

Schematic

A schematic diagram represents the elements of a system using abstract, graphic symbols rather than realistic pictures. A schematic usually omits all details that are not relevant to the information the schematic is intended to convey, and may add unrealistic elements that aid comprehension...

describing the interconnections of logic gate

Logic gate

A logic gate is an idealized or physical device implementing a Boolean function, that is, it performs a logical operation on one or more logic inputs and produces a single logic output. Depending on the context, the term may refer to an ideal logic gate, one that has for instance zero rise time and...

s used to implement it.

Each logic gate is in turn represented by a circuit diagram

Circuit diagram

A circuit diagram is a simplified conventional graphical representation of an electrical circuit...

describing the connections of the transistors used to implement it in some particular logic family

Logic family

In computer engineering, a logic family may refer to one of two related concepts. A logic family of monolithic digital integrated circuit devices is a group of electronic logic gates constructed using one of several different designs, usually with compatible logic levels and power supply...

.

Machines with different microarchitectures may have the same instruction set architecture, and thus be capable of executing the same programs. New microarchitectures and/or circuitry solutions, along with advances in semiconductor manufacturing, are what allows newer generations of processors to achieve higher performance while using the same ISA.

In principle, a single microarchitecture could execute several different ISAs with only minor changes to the microcode.

Aspects of microarchitecture

Instruction pipeline

An instruction pipeline is a technique used in the design of computers and other digital electronic devices to increase their instruction throughput ....

datapath

Datapath

A datapath is a collection of functional units, such as arithmetic logic units or multipliers, that perform data processing operations. Most central processing units consist of a datapath and a control unit, with a large part of the control unit dedicated to regulating the interaction between the...

is the most commonly used datapath design in microarchitecture today. This technique is used in most modern microprocessors, microcontroller

Microcontroller

A microcontroller is a small computer on a single integrated circuit containing a processor core, memory, and programmable input/output peripherals. Program memory in the form of NOR flash or OTP ROM is also often included on chip, as well as a typically small amount of RAM...

s, and DSPs

Digital signal processor

A digital signal processor is a specialized microprocessor with an architecture optimized for the fast operational needs of digital signal processing.-Typical characteristics:...

. The pipelined architecture allows multiple instructions to overlap in execution, much like an assembly line. The pipeline includes several different stages which are fundamental in microarchitecture designs. Some of these stages include instruction fetch, instruction decode, execute, and write back. Some architectures include other stages such as memory access. The design of pipelines is one of the central microarchitectural tasks.

Execution units are also essential to microarchitecture. Execution units include arithmetic logic unit

Arithmetic logic unit

In computing, an arithmetic logic unit is a digital circuit that performs arithmetic and logical operations.The ALU is a fundamental building block of the central processing unit of a computer, and even the simplest microprocessors contain one for purposes such as maintaining timers...

s (ALU), floating point unit

Floating point unit

A floating-point unit is a part of a computer system specially designed to carry out operations on floating point numbers. Typical operations are addition, subtraction, multiplication, division, and square root...

s (FPU), load/store units, branch prediction, and SIMD

SIMD

Single instruction, multiple data , is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously...

. These units perform the operations or calculations of the processor. The choice of the number of execution units, their latency and throughput is a central microarchitectural design task. The size, latency, throughput and connectivity of memories within the system are also microarchitectural decisions.

System-level design decisions such as whether or not to include peripheral

Peripheral

A peripheral is a device attached to a host computer, but not part of it, and is more or less dependent on the host. It expands the host's capabilities, but does not form part of the core computer architecture....

s, such as memory controller

Memory controller

The memory controller is a digital circuit which manages the flow of data going to and from the main memory. It can be a separate chip or integrated into another chip, such as on the die of a microprocessor...

s, can be considered part of the microarchitectural design process. This includes decisions on the performance-level and connectivity of these peripherals.

Unlike architectural design, where achieving a specific performance level is the main goal, microarchitectural design pays closer attention to other constraints. Since microarchitecture design decisions directly affect what goes into a system, attention must be paid to such issues as:

- Chip area/cost

- Power consumption

- Logic complexity

- Ease of connectivity

- Manufacturability

- Ease of debugging

- Testability

Instruction cycle

In general, all CPUs, single-chip microprocessors or multi-chip implementations run programs by performing the following steps:- Read an instruction and decode it

- Find any associated data that is needed to process the instruction

- Process the instruction

- Write the results out

The instruction cycle is repeated continuously until the power is turned off.

Increasing execution speed

Complicating this simple-looking series of steps is the fact that the memory hierarchy, which includes cachingCache

In computer engineering, a cache is a component that transparently stores data so that future requests for that data can be served faster. The data that is stored within a cache might be values that have been computed earlier or duplicates of original values that are stored elsewhere...

, main memory and non-volatile storage like hard disk

Hard disk

A hard disk drive is a non-volatile, random access digital magnetic data storage device. It features rotating rigid platters on a motor-driven spindle within a protective enclosure. Data is magnetically read from and written to the platter by read/write heads that float on a film of air above the...

s (where the program instructions and data reside), has always been slower than the processor itself. Step (2) often introduces a lengthy (in CPU terms) delay while the data arrives over the computer bus

Computer bus

In computer architecture, a bus is a subsystem that transfers data between components inside a computer, or between computers.Early computer buses were literally parallel electrical wires with multiple connections, but the term is now used for any physical arrangement that provides the same...

. A considerable amount of research has been put into designs that avoid these delays as much as possible. Over the years, a central goal was to execute more instructions in parallel, thus increasing the effective execution speed of a program. These efforts introduced complicated logic and circuit structures. Initially, these techniques could only be implemented on expensive mainframes or supercomputers due to the amount of circuitry needed for these techniques. As semiconductor manufacturing progressed, more and more of these techniques could be implemented on a single semiconductor chip. See Moore's law

Moore's Law

Moore's law describes a long-term trend in the history of computing hardware: the number of transistors that can be placed inexpensively on an integrated circuit doubles approximately every two years....

.

Instruction set choice

Instruction sets have shifted over the years, from originally very simple to sometimes very complex (in various respects). In recent years, load-store architectures, VLIWVery long instruction word

Very long instruction word or VLIW refers to a CPU architecture designed to take advantage of instruction level parallelism . A processor that executes every instruction one after the other may use processor resources inefficiently, potentially leading to poor performance...

and EPIC

Explicitly Parallel Instruction Computing

Explicitly parallel instruction computing is a term coined in 1997 by the HP–Intel alliance to describe a computing paradigm that researchers had been investigating since the early 1980s. This paradigm is also called Independence architectures...

types have been in fashion. Architectures that are dealing with data parallelism

Data parallelism

Data parallelism is a form of parallelization of computing across multiple processors in parallel computing environments. Data parallelism focuses on distributing the data across different parallel computing nodes...

include SIMD

SIMD

Single instruction, multiple data , is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously...

and Vectors

Vector processor

A vector processor, or array processor, is a central processing unit that implements an instruction set containing instructions that operate on one-dimensional arrays of data called vectors. This is in contrast to a scalar processor, whose instructions operate on single data items...

. Some labels used to denote classes of CPU architectures are not particularly descriptive, especially so the CISC label; many early designs retroactively denoted "CISC

Complex instruction set computer

A complex instruction set computer , is a computer where single instructions can execute several low-level operations and/or are capable of multi-step operations or addressing modes within single instructions...

" are in fact significantly simpler than modern RISC processors (in several respects).

However, the choice of instruction set architecture may greatly affect the complexity of implementing high performance devices. The prominent strategy, used to develop the first RISC processors, was to simplify instructions to a minimum of individual semantic complexity combined with high encoding regularity and simplicity. Such uniform instructions were easily fetched, decoded and executed in a pipelined fashion and a simple strategy to reduce the number of logic levels in order to reach high operating frequencies; instruction cache-memories compensated for the higher operating frequency and inherently low code density while large register sets were used to factor out as much of the (slow) memory accesses as possible.

Instruction pipelining

One of the first, and most powerful, techniques to improve performance is the use of the instruction pipelineInstruction pipeline

An instruction pipeline is a technique used in the design of computers and other digital electronic devices to increase their instruction throughput ....

. Early processor designs would carry out all of the steps above for one instruction before moving onto the next. Large portions of the circuitry were left idle at any one step; for instance, the instruction decoding circuitry would be idle during execution and so on.

Pipelines improve performance by allowing a number of instructions to work their way through the processor at the same time. In the same basic example, the processor would start to decode (step 1) a new instruction while the last one was waiting for results. This would allow up to four instructions to be "in flight" at one time, making the processor look four times as fast. Although any one instruction takes just as long to complete (there are still four steps) the CPU as a whole "retires" instructions much faster and can be run at a much higher clock speed.

RISC make pipelines smaller and much easier to construct by cleanly separating each stage of the instruction process and making them take the same amount of time — one cycle. The processor as a whole operates in an assembly line

Assembly line

An assembly line is a manufacturing process in which parts are added to a product in a sequential manner using optimally planned logistics to create a finished product much faster than with handcrafting-type methods...

fashion, with instructions coming in one side and results out the other. Due to the reduced complexity of the Classic RISC pipeline

Classic RISC pipeline

In the history of computer hardware, some early reduced instruction set computer central processing units used a very similar architectural solution, now called a classic RISC pipeline. Those CPUs were: MIPS, SPARC, Motorola 88000, and later DLX....

, the pipelined core and an instruction cache could be placed on the same size die that would otherwise fit the core alone on a CISC design. This was the real reason that RISC was faster. Early designs like the SPARC

SPARC

SPARC is a RISC instruction set architecture developed by Sun Microsystems and introduced in mid-1987....

and MIPS

MIPS architecture

MIPS is a reduced instruction set computer instruction set architecture developed by MIPS Technologies . The early MIPS architectures were 32-bit, and later versions were 64-bit...

often ran over 10 times as fast as Intel and Motorola

Motorola

Motorola, Inc. was an American multinational telecommunications company based in Schaumburg, Illinois, which was eventually divided into two independent public companies, Motorola Mobility and Motorola Solutions on January 4, 2011, after losing $4.3 billion from 2007 to 2009...

CISC solutions at the same clock speed and price.

Pipelines are by no means limited to RISC designs. By 1986 the top-of-the-line VAX implementation (VAX 8800

VAX 8000

The VAX 8000 was a family of minicomputers developed and manufactured by Digital Equipment Corporation using processors implementing the VAX instruction set architecture .- VAX 8600 :...

) was a heavily pipelined design, slightly predating the first commercial MIPS and SPARC designs. Most modern CPUs (even embedded CPUs) are now pipelined, and microcoded CPUs with no pipelining are seen only in the most area-constrained embedded processors. Large CISC machines, from the VAX 8800 to the modern Pentium 4 and Athlon, are implemented with both microcode and pipelines. Improvements in pipelining and caching are the two major microarchitectural advances that have enabled processor performance to keep pace with the circuit technology on which they are based.

Cache

It was not long before improvements in chip manufacturing allowed for even more circuitry to be placed on the die, and designers started looking for ways to use it. One of the most common was to add an ever-increasing amount of cache memoryCPU cache

A CPU cache is a cache used by the central processing unit of a computer to reduce the average time to access memory. The cache is a smaller, faster memory which stores copies of the data from the most frequently used main memory locations...

on-die. Cache is simply very fast memory, memory that can be accessed in a few cycles as opposed to many needed to "talk" to main memory. The CPU includes a cache controller which automates reading and writing from the cache, if the data is already in the cache it simply "appears", whereas if it is not the processor is "stalled" while the cache controller reads it in.

RISC designs started adding cache in the mid-to-late 1980s, often only 4 KB in total. This number grew over time, and typical CPUs now have at least 512 KB, while more powerful CPUs come with 1 or 2 or even 4, 6, 8 or 12 MB, organized in multiple levels of a memory hierarchy

Memory hierarchy

The term memory hierarchy is used in the theory of computation when discussing performance issues in computer architectural design, algorithm predictions, and the lower level programming constructs such as involving locality of reference. A 'memory hierarchy' in computer storage distinguishes each...

. Generally speaking, more cache means more performance, due to reduced stalling.

Caches and pipelines were a perfect match for each other. Previously, it didn't make much sense to build a pipeline that could run faster than the access latency of off-chip memory. Using on-chip cache memory instead, meant that a pipeline could run at the speed of the cache access latency, a much smaller length of time. This allowed the operating frequencies of processors to increase at a much faster rate than that of off-chip memory.

Branch prediction

One barrier to achieving higher performance through instruction-level parallelism stems from pipeline stalls and flushes due to branches. Normally, whether a conditional branch will be taken isn't known until late in the pipeline as conditional branches depend on results coming from a register. From the time that the processor's instruction decoder has figured out that it has encountered a conditional branch instruction to the time that the deciding register value can be read out, the pipeline needs to be stalled for several cycles, or if it's not and the branch is taken, the pipeline needs to be flushed. As clock speeds increase the depth of the pipeline increases with it, and some modern processors may have 20 stages or more. On average, every fifth instruction executed is a branch, so without any intervention, that's a high amount of stalling.Techniques such as branch prediction and speculative execution

Speculative execution

Speculative execution in computer systems is doing work, the result of which may not be needed. This performance optimization technique is used in pipelined processors and other systems.-Main idea:...

are used to lessen these branch penalties. Branch prediction is where the hardware makes educated guesses on whether a particular branch will be taken. In reality one side or the other of the branch will be called much more often than the other. Modern designs have rather complex statistical prediction systems, which watch the results of past branches to predict the future with greater accuracy. The guess allows the hardware to prefetch instructions without waiting for the register read. Speculative execution is a further enhancement in which the code along the predicted path is not just prefetched but also executed before it is known whether the branch should be taken or not. This can yield better performance when the guess is good, with the risk of a huge penalty when the guess is bad because instructions need to be undone.

Superscalar

Even with all of the added complexity and gates needed to support the concepts outlined above, improvements in semiconductor manufacturing soon allowed even more logic gates to be used.In the outline above the processor processes parts of a single instruction at a time. Computer programs could be executed faster if multiple instructions were processed simultaneously. This is what superscalar

Superscalar

A superscalar CPU architecture implements a form of parallelism called instruction level parallelism within a single processor. It therefore allows faster CPU throughput than would otherwise be possible at a given clock rate...

processors achieve, by replicating functional units such as ALUs. The replication of functional units was only made possible when the die area of a single-issue processor no longer stretched the limits of what could be reliably manufactured. By the late 1980s, superscalar designs started to enter the market place.

In modern designs it is common to find two load units, one store (many instructions have no results to store), two or more integer math units, two or more floating point units, and often a SIMD

SIMD

Single instruction, multiple data , is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously...

unit of some sort. The instruction issue logic grows in complexity by reading in a huge list of instructions from memory and handing them off to the different execution units that are idle at that point. The results are then collected and re-ordered at the end.

Out-of-order execution

The addition of caches reduces the frequency or duration of stalls due to waiting for data to be fetched from the memory hierarchy, but does not get rid of these stalls entirely. In early designs a cache miss would force the cache controller to stall the processor and wait. Of course there may be some other instruction in the program whose data is available in the cache at that point. Out-of-order executionOut-of-order execution

In computer engineering, out-of-order execution is a paradigm used in most high-performance microprocessors to make use of instruction cycles that would otherwise be wasted by a certain type of costly delay...

allows that ready instruction to be processed while an older instruction waits on the cache, then re-orders the results to make it appear that everything happened in the programmed order. This technique is also used to avoid other operand dependency stalls, such as an instruction awaiting a result from a long latency floating-point operation or other multi-cycle operations.

Register renaming

Register renaming refers to a technique used to avoid unnecessary serialized execution of program instructions because of the reuse of the same registers by those instructions. Suppose we have two groups of instruction that will use the same registerProcessor register

In computer architecture, a processor register is a small amount of storage available as part of a CPU or other digital processor. Such registers are addressed by mechanisms other than main memory and can be accessed more quickly...

. One set of instructions is executed first to leave the register to the other set, but if the other set is assigned to a different similar register, both sets of instructions can be executed in parallel.

Multiprocessing and multithreading

Computer architects have become stymied by the growing mismatch in CPU operating frequencies and DRAMDynamic random access memory

Dynamic random-access memory is a type of random-access memory that stores each bit of data in a separate capacitor within an integrated circuit. The capacitor can be either charged or discharged; these two states are taken to represent the two values of a bit, conventionally called 0 and 1...

access times. None of the techniques that exploited instruction-level parallelism within one program could make up for the long stalls that occurred when data had to be fetched from main memory. Additionally, the large transistor counts and high operating frequencies needed for the more advanced ILP techniques required power dissipation levels that could no longer be cheaply cooled. For these reasons, newer generations of computers have started to exploit higher levels of parallelism that exist outside of a single program or program thread

Thread (computer science)

In computer science, a thread of execution is the smallest unit of processing that can be scheduled by an operating system. The implementation of threads and processes differs from one operating system to another, but in most cases, a thread is contained inside a process...

.

This trend is sometimes known as throughput computing. This idea originated in the mainframe market where online transaction processing emphasized not just the execution speed of one transaction, but the capacity to deal with massive numbers of transactions. With transaction-based applications such as network routing and web-site serving greatly increasing in the last decade, the computer industry has re-emphasized capacity and throughput issues.

One technique of how this parallelism is achieved is through multiprocessing

Multiprocessing

Multiprocessing is the use of two or more central processing units within a single computer system. The term also refers to the ability of a system to support more than one processor and/or the ability to allocate tasks between them...

systems, computer systems with multiple CPUs. Once reserved for high-end mainframes

Mainframe computer

Mainframes are powerful computers used primarily by corporate and governmental organizations for critical applications, bulk data processing such as census, industry and consumer statistics, enterprise resource planning, and financial transaction processing.The term originally referred to the...

and supercomputer

Supercomputer

A supercomputer is a computer at the frontline of current processing capacity, particularly speed of calculation.Supercomputers are used for highly calculation-intensive tasks such as problems including quantum physics, weather forecasting, climate research, molecular modeling A supercomputer is a...

s, small scale (2-8) multiprocessors servers have become commonplace for the small business market. For large corporations, large scale (16-256) multiprocessors are common. Even personal computer

Personal computer

A personal computer is any general-purpose computer whose size, capabilities, and original sales price make it useful for individuals, and which is intended to be operated directly by an end-user with no intervening computer operator...

s with multiple CPUs have appeared since the 1990s.

With further transistor size reductions made available with semiconductor technology advances, multicore CPUs

Multi-core (computing)

A multi-core processor is a single computing component with two or more independent actual processors , which are the units that read and execute program instructions...

have appeared where multiple CPUs are implemented on the same silicon chip. Initially used in chips targeting embedded markets, where simpler and smaller CPUs would allow multiple instantiations to fit on one piece of silicon. By 2005, semiconductor technology allowed dual high-end desktop CPUs CMP chips to be manufactured in volume. Some designs, such as Sun Microsystems

Sun Microsystems

Sun Microsystems, Inc. was a company that sold :computers, computer components, :computer software, and :information technology services. Sun was founded on February 24, 1982...

' UltraSPARC T1

UltraSPARC T1

|right|262px|UltraSPARC T1 processorSun Microsystems' UltraSPARC T1 microprocessor, known until its 14 November 2005 announcement by its development codename "Niagara", is a multithreading, multicore CPU...

have reverted back to simpler (scalar, in-order) designs in order to fit more processors on one piece of silicon.

Another technique that has become more popular recently is multithreading. In multithreading, when the processor has to fetch data from slow system memory, instead of stalling for the data to arrive, the processor switches to another program or program thread which is ready to execute. Though this does not speed up a particular program/thread, it increases the overall system throughput by reducing the time the CPU is idle.

Conceptually, multithreading is equivalent to a context switch

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

at the operating system level. The difference is that a multithreaded CPU can do a thread switch in one CPU cycle instead of the hundreds or thousands of CPU cycles a context switch normally requires. This is achieved by replicating the state hardware (such as the register file

Register file

A register file is an array of processor registers in a central processing unit . Modern integrated circuit-based register files are usually implemented by way of fast static RAMs with multiple ports...

and program counter

Program counter

The program counter , commonly called the instruction pointer in Intel x86 microprocessors, and sometimes called the instruction address register, or just part of the instruction sequencer in some computers, is a processor register that indicates where the computer is in its instruction sequence...

) for each active thread.

A further enhancement is simultaneous multithreading

Simultaneous multithreading

Simultaneous multithreading, often abbreviated as SMT, is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading...

. This technique allows superscalar CPUs to execute instructions from different programs/threads simultaneously in the same cycle.

See also

- MicroprocessorMicroprocessorA microprocessor incorporates the functions of a computer's central processing unit on a single integrated circuit, or at most a few integrated circuits. It is a multipurpose, programmable device that accepts digital data as input, processes it according to instructions stored in its memory, and...

- MicrocontrollerMicrocontrollerA microcontroller is a small computer on a single integrated circuit containing a processor core, memory, and programmable input/output peripherals. Program memory in the form of NOR flash or OTP ROM is also often included on chip, as well as a typically small amount of RAM...

- Digital signal processorDigital signal processorA digital signal processor is a specialized microprocessor with an architecture optimized for the fast operational needs of digital signal processing.-Typical characteristics:...

(DSP) - CPU designCPU designCPU design is the design engineering task of creating a central processing unit , a component of computer hardware. It is a subfield of electronics engineering and computer engineering.- Overview :CPU design focuses on these areas:...

- Hardware description languageHardware description languageIn electronics, a hardware description language or HDL is any language from a class of computer languages, specification languages, or modeling languages for formal description and design of electronic circuits, and most-commonly, digital logic...

(HDL) - Hardware architectureHardware architectureIn engineering, hardware architecture refers to the identification of a system's physical components and their interrelationships. This description, often called a hardware design model, allows hardware designers to understand how their components fit into a system architecture and provides...

- Harvard architectureHarvard architectureThe Harvard architecture is a computer architecture with physically separate storage and signal pathways for instructions and data. The term originated from the Harvard Mark I relay-based computer, which stored instructions on punched tape and data in electro-mechanical counters...

- von Neumann architectureVon Neumann architectureThe term Von Neumann architecture, aka the Von Neumann model, derives from a computer architecture proposal by the mathematician and early computer scientist John von Neumann and others, dated June 30, 1945, entitled First Draft of a Report on the EDVAC...

- Multi-core (computing)Multi-core (computing)A multi-core processor is a single computing component with two or more independent actual processors , which are the units that read and execute program instructions...

- DatapathDatapathA datapath is a collection of functional units, such as arithmetic logic units or multipliers, that perform data processing operations. Most central processing units consist of a datapath and a control unit, with a large part of the control unit dedicated to regulating the interaction between the...

- Dataflow architectureDataflow architectureDataflow architecture is a computer architecture that directly contrasts the traditional von Neumann architecture or control flow architecture. Dataflow architectures do not have a program counter, or the executability and execution of instructions is solely determined based on the availability of...

- Very-large-scale integrationVery-large-scale integrationVery-large-scale integration is the process of creating integrated circuits by combining thousands of transistors into a single chip. VLSI began in the 1970s when complex semiconductor and communication technologies were being developed. The microprocessor is a VLSI device.The first semiconductor...

(VLSI) - VHDL

- VerilogVerilogIn the semiconductor and electronic design industry, Verilog is a hardware description language used to model electronic systems. Verilog HDL, not to be confused with VHDL , is most commonly used in the design, verification, and implementation of digital logic chips at the register-transfer level...

- Stream processingStream processingStream processing is a computer programming paradigm, related to SIMD , that allows some applications to more easily exploit a limited form of parallel processing...

- Instruction level parallelismInstruction level parallelismInstruction-level parallelism is a measure of how many of the operations in a computer program can be performed simultaneously. Consider the following program: 1. e = a + b 2. f = c + d 3. g = e * f...

(ILP)