Defragmentation

Encyclopedia

File system

A file system is a means to organize data expected to be retained after a program terminates by providing procedures to store, retrieve and update data, as well as manage the available space on the device which contain it. A file system organizes data in an efficient manner and is tuned to the...

s, defragmentation is a process that reduces the amount of fragmentation

File system fragmentation

In computing, file system fragmentation, sometimes called file system aging, is the inability of a file system to lay out related data sequentially , an inherent phenomenon in storage-backed file systems that allow in-place modification of their contents. It is a special case of data fragmentation...

. It does this by physically organizing the contents of the mass storage

Mass storage

In computing, mass storage refers to the storage of large amounts of data in a persisting and machine-readable fashion. Devices and/or systems that have been described as mass storage include tape libraries, RAID systems, hard disk drives, magnetic tape drives, optical disc drives, magneto-optical...

device used to store file

Computer file

A computer file is a block of arbitrary information, or resource for storing information, which is available to a computer program and is usually based on some kind of durable storage. A file is durable in the sense that it remains available for programs to use after the current program has finished...

s into the smallest number of contiguous regions (fragments). It also attempts to create larger regions of free space using compaction to impede the return of fragmentation. Some defragmentation utilities try to keep smaller files within a single directory together, as they are often accessed in sequence.

Defragmentation is advantageous and relevant to file systems on electromechanical disk drives

Disk storage

Disk storage or disc storage is a general category of storage mechanisms, in which data are digitally recorded by various electronic, magnetic, optical, or mechanical methods on a surface layer deposited of one or more planar, round and rotating disks...

. The movement of the hard drive's read/write head

Disk read-and-write head

Disk read/write heads are the small parts of a disk drive, that move above the disk platter and transform platter's magnetic field into electrical current or vice versa – transform electrical current into magnetic field...

s over different areas of the disk when accessing fragmented files is slower, compared to accessing the entire contents of a non-fragmented file sequentially without moving the read/write heads to seek other fragments.

Causes of fragmentation

Fragmentation occurs when the file systemFile system

A file system is a means to organize data expected to be retained after a program terminates by providing procedures to store, retrieve and update data, as well as manage the available space on the device which contain it. A file system organizes data in an efficient manner and is tuned to the...

cannot or will not allocate enough contiguous space to store a complete file as a unit, but instead puts parts of it in gaps between other files (usually those gaps exist because they formerly held a file that the operating system has subsequently deleted or because the file system allocated excess space for the file in the first place). Larger files and greater numbers of files also contribute to fragmentation and consequent performance loss. Defragmentation attempts to alleviate these problems.

Example

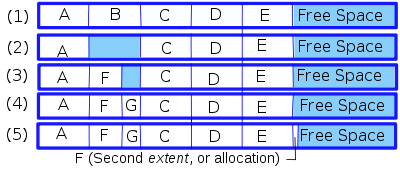

An otherwise blank disk has five files, A through E, each using 10 blocks of space (for this section, a block is an allocation unit of that system, it could be 1 KB, 100 KB or 1 MB and is not any specific size). On a blank disk, all of these files will be allocated one after the other. (Example (1) on the image.) If file B is deleted, there are two options, leave the space for B empty and use it again later, or move all the files after B so that the empty space is at the end. This could be time consuming if there were hundreds or thousands of files which needed to be moved, so in general the empty space is simply left there, marked in a table as available for later use, then used again as needed. (Example (2) on the image.) When a new file, F, is allocated requiring six blocks of space, it can be placed into the first 6 blocks of the space formerly holding the file B and the four blocks following it will remain available. (Example (3) on the image.) If another new file, G is added, and needs only four blocks, it could then occupy the space after F and before C. (Example (4) on the image). When F needs to be expanded, since the space immediately following it is no longer available, there are two options:

- Move the file F to where it can be created as one contiguous file of the new, larger size. Relocating the file may not be possible as the file may be larger than any one contiguous space available. The file conceivably could be so large the operation would take an undesirably long period of time. Some filesystems relocate files as a background task with low priority.

- Add a new block somewhere else and indicate that F has a second extent (Example (5) on the image.) Repeat this hundreds of times and the file system has many free segments in many places and many files may be spread over many extents. When a new file (or a file which has been extended) has to be placed in a large number of extents, access time for that file may become excessively long.

The process of creating, deleting and expanding existing files, may be referred to as churn, and can occur at both the level of the general root file system or in subdirectories. Fragmentation not only occurs at the level of individual files, but also when different files in a directory (and maybe its subdirectories), that are often read in a sequence, start to "drift apart" as a result of "churn".

A defragmentation program must move files around within the free space available to undo fragmentation. This is an intensive operation and cannot be performed on a file system with no free space. Performance during this process will be severely degraded. Depending on the algorithm used it may advantageous to perform multiple passes. The reorganization involved in defragmentation does not change logical location of the files (defined as their location within the directory structure).

Common countermeasures

PartitioningA common strategy to optimize defragmentation and to reduce the impact of fragmentation is to partition the hard disk(s) in a way that separates partitions of the file system that experience many more reads than writes from the more volatile zones where files are created and deleted frequently. The directories that contain the users' profiles are modified constantly (especially with the Temp directory and web browser cache creating thousands of files that are deleted in a few days). If files from user profiles are held on a dedicated partition (as is commonly done on UNIX

Unix

Unix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

systems), the defragmenter runs better since it does not need to deal with all the static files from other directories. For partitions with relatively little write activity, defragmentation performance greatly improves after the first defragmentation, since the defragmenter will need to defrag only a small number of new files in the future.

Offline defragmentation

The presence of immovable system files, especially a swap file

Paging

In computer operating systems, paging is one of the memory-management schemes by which a computer can store and retrieve data from secondary storage for use in main memory. In the paging memory-management scheme, the operating system retrieves data from secondary storage in same-size blocks called...

, can impede defragmentation. These files can be safely moved when the operating system is not in use. For example, ntfsresize

Ntfsresize

ntfsresize is a free Unix utility that non-destructively resizes the NTFS filesystem used by Windows NT 4.0, 2000, XP, 2003, and Vista typically on a hard-disk partition. All NTFS versions used by 32-bit and 64-bit Windows are supported. No defragmentation is required prior to resizing since...

moves these files to resize an NTFS

NTFS

NTFS is the standard file system of Windows NT, including its later versions Windows 2000, Windows XP, Windows Server 2003, Windows Server 2008, Windows Vista, and Windows 7....

partition. The tool PageDefrag

PageDefrag

PageDefrag is a program, developed by Sysinternals , for Microsoft Windows that runs at start-up to defragment the virtual memory page file, the registry files and the Event Viewer's logs .Defragmenting these files may improve performance...

could defragment Windows system files such as the swap file and the files that store the Windows registry

Windows registry

The Windows Registry is a hierarchical database that stores configuration settings and options on Microsoft Windows operating systems. It contains settings for low-level operating system components as well as the applications running on the platform: the kernel, device drivers, services, SAM, user...

by running at boot time before the GUI is loaded. Since Windows Vista, the feature is not fully supported and has not been updated.

If the NTFS Master File Table (MFT) must grow after the partition was formatted, it may become fragmented, and in early versions of Windows it could not be safely defragmented while the partition was in use. An increasing number of defragmentation programs are able to defragment the MFT in versions of Windows since XP with API support for this.

User and performance issues

In a wide range of modern multi-user operating systems, an ordinary user cannot defragment the system disks since superuser (or "Administrator") access is required to move system files. Additionally, file systems such as NTFS are designed to decrease the likelihood of fragmentation. Improvements in modern hard drives such as RAMRam

-Animals:*Ram, an uncastrated male sheep*Ram cichlid, a species of freshwater fish endemic to Colombia and Venezuela-Military:*Battering ram*Ramming, a military tactic in which one vehicle runs into another...

cache, faster platter rotation speed, command queuing (SCSI

SCSI

Small Computer System Interface is a set of standards for physically connecting and transferring data between computers and peripheral devices. The SCSI standards define commands, protocols, and electrical and optical interfaces. SCSI is most commonly used for hard disks and tape drives, but it...

TCQ

Tagged Command Queuing

Tagged Command Queuing is a technology built into certain ATA and SCSI hard drives. It allows the operating system to send multiple read and write requests to a hard drive. ATA TCQ is not identical in function to the more efficient native command queuing used by SATA drives...

/SATA

Sata

Sata is a traditional dish from the Malaysian state of Terengganu, consisting of spiced fish meat wrapped in banana leaves and cooked on a grill.It is a type of Malaysian fish cake, or otak-otak...

NCQ

Native Command Queuing

Native Command Queuing is a technology designed to increase performance of SATA hard disks under certain conditions by allowing the individual hard disk to internally optimize the order in which received read and write commands are executed...

), and greater data density reduce the negative impact of fragmentation on system performance to some degree, though increases in commonly used data quantities offset those benefits. However, modern systems profit enormously from the huge disk capacities currently available, since partially filled disks fragment much less than full disks, and on a high-capacity HDD, the same partition occupies a smaller range of cylinders, resulting in faster seeks. However, the average access time can never be lower than a half rotation of the platters, and platter rotation (measured in rpm) is the speed characteristic of HDDs which has experienced the slowest growth over the decades (compared to data transfer rate and seek time), so minimizing the number of seeks remains beneficial in most storage-heavy applications. Defragmentation is just that: ensuring that there is at most one seek per file, counting only the seeks to non-adjacent tracks.

When reading data from a conventional electromechanical hard disk drive, the disk controller must first position the head, relatively slowly, to the track where a given fragment resides, and then wait while the disk platter rotates until the fragment reaches the head.

Since disks based on flash memory

Flash memory

Flash memory is a non-volatile computer storage chip that can be electrically erased and reprogrammed. It was developed from EEPROM and must be erased in fairly large blocks before these can be rewritten with new data...

have no moving parts, random access

Random access

In computer science, random access is the ability to access an element at an arbitrary position in a sequence in equal time, independent of sequence size. The position is arbitrary in the sense that it is unpredictable, thus the use of the term "random" in "random access"...

of a fragment does not suffer this delay, making defragmentation to optimize access speed unnecessary. Furthermore, since flash memory can be written to only a limited number of times before it fails, defragmentation is actually detrimental.

Windows System Restore points may be deleted during defragmenting/optimizing

Running most defragmenters and optimizers can cause the Microsoft Shadow Copy service to delete some of the oldest restore points, even if the defragmenters/optimizers are built on Windows API. This is due to Shadow Copy keeping track of some movements of big files performed by the defragmenters/optimizers; when the total disk space used by shadow copies would exceed a specified threshold, older restore points are deleted until the limit is not exceeded.Defragmenting and optimizing

Besides defragmenting program files, the defragmenting tool can also reduce the time it takes to load programs and open files. For example, the Windows 9x defragmenter included the Intel Application Launch Accelerator which optimized programs on the disk. The outer tracks of a hard disk have a higher transfer rate than the inner tracks, therefore placing files on the outer tracks increases performance. In addition, the defragmenting tool may also use free space on other partitions or drives to be able to defragment volumes of low disk space.Approach and defragmenters by file-system type

- FATFile Allocation TableFile Allocation Table is a computer file system architecture now widely used on many computer systems and most memory cards, such as those used with digital cameras. FAT file systems are commonly found on floppy disks, flash memory cards, digital cameras, and many other portable devices because of...

: MS-DOS 6.x and Windows 9x-systems come with a defragmentation utility called Defrag. The DOS version is a limited version of Norton SpeedDisk. The version that came with Windows 9x was licensed from Symantec Corporation, and the version that came with Windows 2000 and above (with the exceptions of Vista, Server 2008, and 7) is licensed from Diskeeper CorporationDiskeeper CorporationDiskeeper Corporation is the name of a software company based in Burbank, California, founded in 1981. The company's current name is derived from its flagship product, Diskeeper, a file system defragmentation software package for Microsoft Windows and VAX...

. - NTFSNTFSNTFS is the standard file system of Windows NT, including its later versions Windows 2000, Windows XP, Windows Server 2003, Windows Server 2008, Windows Vista, and Windows 7....

: Windows 2000-2003 include a defragmentation tool based on DiskeeperDiskeeper-Further reading:#...

. Newer versions of Windows ship with a Microsoft product. Windows NT 4.0Windows NT 4.0Windows NT 4.0 is a preemptive, graphical and business-oriented operating system designed to work with either uniprocessor or symmetric multi-processor computers. It was the next release of Microsoft's Windows NT line of operating systems and was released to manufacturing on 31 July 1996...

includes an application programming interfaceApplication programming interfaceAn application programming interface is a source code based specification intended to be used as an interface by software components to communicate with each other...

that third-party tools can use to perform defragmentation tasks, but no command-line or graphical tools are included that make use of it. Windows NT 3.51Windows NT 3.51Windows NT 3.51 is the third release of Microsoft's Windows NT line of operating systems. It was released on 30 May 1995, nine months after Windows NT 3.5. The release provided two notable feature improvements; firstly NT 3.51 was the first of a short-lived outing of Microsoft Windows on the...

and below do not include any defragmentation capabilities. A number of free and commercial third-party defragmentation products are available for Microsoft WindowsMicrosoft WindowsMicrosoft Windows is a series of operating systems produced by Microsoft.Microsoft introduced an operating environment named Windows on November 20, 1985 as an add-on to MS-DOS in response to the growing interest in graphical user interfaces . Microsoft Windows came to dominate the world's personal...

. - BSD UFSUnix File SystemThe Unix file system is a file system used by many Unix and Unix-like operating systems. It is also called the Berkeley Fast File System, the BSD Fast File System or FFS...

and particularly FreeBSD uses an internal reallocator that seeks to reduce fragmentation right in the moment when the information is written to disk. This effectively controls system degradation after extended use. - ext2Ext2The ext2 or second extended filesystem is a file system for the Linux kernel. It was initially designed by Rémy Card as a replacement for the extended file system ....

, ext3Ext3The ext3 or third extended filesystem is a journaled file system that is commonly used by the Linux kernel. It is the default file system for many popular Linux distributions, including Debian...

, and ext4Ext4The ext4 or fourth extended filesystem is a journaling file system for Linux, developed as the successor to ext3.It was born as a series of backward compatible extensions to ext3, many of them originally developed by Cluster File Systems for the Lustre file system between 2003 and 2006, meant to...

(Linux): ext2 uses an offline defragmenter called e2defrag, which does not work with its successor ext3. However, other programs, or filesystem-independent ones, may be used to defragment an ext3 filesystem. ext4 is somewhat backward compatibleBackward compatibilityIn the context of telecommunications and computing, a device or technology is said to be backward or downward compatible if it can work with input generated by an older device...

with ext3, and thus has generally the same amount of support from defragmentation programs. - VxFSVERITAS File SystemThe VERITAS File System, , is an extent-based file system. It was originally developed by VERITAS Software. Through an OEM agreement, VxFS is used as the primary filesystem of the HP-UX operating system...

has the fsadm utility meant to perform also defrag operations. - JFS has the defragfs utility on IBM operating systems.

- HFS PlusHFS PlusHFS Plus or HFS+ is a file system developed by Apple Inc. to replace their Hierarchical File System as the primary file system used in Macintosh computers . It is also one of the formats used by the iPod digital music player...

(Mac OS X) introduced in 1998 a number of optimizations to the allocation algorithms in an attempt to defragment files while they are being accessed without a separate defragmenter. If the filesystem becomes fragmented, the only way to defragment it is to use a utility such as Coriolis System's iDefrag, or to wipe the hard drive completely and install the system from scratch. - WAFLWrite Anywhere File LayoutThe Write Anywhere File Layout is a file layout that supports large, high-performance RAID arrays, quick restarts without lengthy consistency checks in the event of a crash or power failure , and growing the filesystems size quickly. It was designed by NetApp for use in its storage appliances...

in NetApp's ONTAP 7.2 operating system has a command called reallocate that is designed to defragment large files. - XFSXFSXFS is a high-performance journaling file system created by Silicon Graphics, Inc. It is the default file system in IRIX releases 5.3 and onwards and later ported to the Linux kernel. XFS is particularly proficient at parallel IO due to its allocation group based design...

provides an online defragmentation utility called xfs_fsr. - SFSSmart File SystemThe Smart File System is a journaling filesystem used on Amiga computers. It is designed for performance, scalability and integrity...

processes the defragmentation feature in almost completely stateless way (apart from the location it is working on), so defragmentation can be stopped and started instantly.

See also

- Comparison of defragmentation softwareComparison of defragmentation software-Comparison:...

- Fragmentation (computer)Fragmentation (computer)In computer storage, fragmentation is a phenomenon in which storage space is used inefficiently, reducing storage capacity and in most cases reducing the performance. The term is also used to denote the wasted space itself....

- File system fragmentationFile system fragmentationIn computing, file system fragmentation, sometimes called file system aging, is the inability of a file system to lay out related data sequentially , an inherent phenomenon in storage-backed file systems that allow in-place modification of their contents. It is a special case of data fragmentation...

- List of defragmentation software

- Memory fragmentation

- Virtual disk imageVirtual disk imageA virtual disk image is a file on a physical disk, which has a well-defined, published or proprietary, format and is interpreted by a Virtual Machine Monitor as a hard disk. IT administrators and software developers administer them through offline operations using built-in or third-party tools...

Sources

- Norton, Peter (1994) Peter Norton's Complete Guide to DOS 6.22, page 521 - Sams (ISBN 067230614X)

- Woody Leonhard, Justin Leonhard (2005) Windows XP Timesaving Techniques For Dummies, Second Edition page 456 - For Dummies (ISBN 0-764578-839).

- Jensen, Craig (1994). Fragmentation: The Condition, the Cause, the Cure. Executive Software International (ISBN 0-9640049-0-9).

- Dave KleimanDave KleimanDave Kleiman is a noted Forensic Computer Investigator, an author/coauthor of multiple books and a noted speaker at security related events.-Computer Security & Forensics:...

, Laura Hunter, Mahesh Satyanarayana, Kimon Andreou, Nancy G Altholz, Lawrence Abrams, Darren Windham, Tony Bradley and Brian Barber (2006) Winternals: Defragmentation, Recovery, and Administration Field Guide - Syngress (ISBN 1-597490-792) - Robb, Drew (2003) Server Disk Management in a Windows Environment Chapter 7 - AUERBACH (ISBN 0849324327)

External links

- Microsoft Windows XP defragmentation - How to schedule a weekly defragmentation

- Microsoft Windows 2000 Professional and Server defragmentation - How to schedule defragmentation

- SST Hard Disk Optimizer

- How Linux avoids making files fragmented

- How defragmentation was changed for Windows 7

- Complete list of Defragmentation Utilities for Windows