Database normalization

Encyclopedia

In the design of a relational database management system (RDBMS

), the process of organizing data to minimize redundancy is called normalization. The goal of database normalization is to decompose relations with anomalies in order to produce smaller, well-structured relations. Normalization usually involves dividing large tables into smaller (and less redundant) tables and defining relationships between them. The objective is to isolate data so that additions, deletions, and modifications of a field can be made in just one table and then propagated through the rest of the database via the defined relationships.

Edgar F. Codd

, the inventor of the relational model

, introduced the concept of normalization and what we now know as the First Normal Form (1NF

) in 1970. Codd went on to define the Second Normal Form (2NF

) and Third Normal Form (3NF

) in 1971, and Codd and Raymond F. Boyce

defined the Boyce-Codd Normal Form (BCNF) in 1974. Higher normal forms were defined by other theorists in subsequent years, the most recent being the Sixth Normal Form (6NF

) introduced by Chris Date

, Hugh Darwen

, and Nikos Lorentzos

in 2002.

Informally, a relational database table

(the computerized representation of a relation

) is often described as "normalized" if it is in the Third Normal Form. Most 3NF tables are free of insertion, update, and deletion anomalies, i.e. in most cases 3NF tables adhere to BCNF, 4NF

, and 5NF

(but typically not 6NF

).

A standard piece of database design guidance is that the designer should create a fully normalized design; selective denormalization

can subsequently be performed for performance

reasons. However, some modeling disciplines, such as the dimensional modeling

approach to data warehouse

design, explicitly recommend non-normalized designs, i.e. designs that in large part do not adhere to 3NF.

defined by Codd in 1970 was to permit data to be queried and manipulated using a "universal data sub-language" grounded in first-order logic

. (SQL

is an example of such a data sub-language, albeit one that Codd regarded as seriously flawed.)

The objectives of normalization beyond 1NF (First Normal Form) were stated as follows by Codd:

The sections below give details of each of these objectives.

When an attempt is made to modify (update, insert into, or delete from) a table, undesired side-effects may follow. Not all tables can suffer from these side-effects; rather, the side-effects can only arise in tables that have not been sufficiently normalized. An insufficiently normalized table might have one or more of the following characteristics:

When an attempt is made to modify (update, insert into, or delete from) a table, undesired side-effects may follow. Not all tables can suffer from these side-effects; rather, the side-effects can only arise in tables that have not been sufficiently normalized. An insufficiently normalized table might have one or more of the following characteristics:

For example, consider an online bookseller whose customers maintain wishlists of books they'd like to have. For the obvious, anticipated query—what books does this customer want?—it's enough to store the customer's wishlist in the table as, say, a homogeneous string of authors and titles.

With this design, though, the database can answer only that one single query. It cannot by itself answer interesting but unanticipated queries: What is the most-wished-for book? Which customers are interested in WWII espionage? How does Lord Byron stack up against his contemporary poets? Answers to these questions must come from special adaptive tools completely separate from the database. One tool might be software written especially to handle such queries. This special adaptive software has just one single purpose: in effect to normalize the non-normalized field.

Unforeseen queries can be answered trivially, and entirely within the database framework, with a normalized table.

To each customer there corresponds a repeating group of transactions. The automated evaluation of any query relating to customers' transactions therefore would broadly involve two stages:

For example, in order to find out the monetary sum of all transactions that occurred in October 2003 for all customers, the system would have to know that it must first unpack the Transactions group of each customer, then sum the Amounts of all transactions thus obtained where the Date of the transaction falls in October 2003.

One of Codd's important insights was that this structural complexity could always be removed completely, leading to much greater power and flexibility in the way queries could be formulated (by users

and applications

) and evaluated (by the DBMS

). The normalized equivalent of the structure above would look like this:

Now each row represents an individual credit card transaction, and the DBMS can obtain the answer of interest, simply by finding all rows with a Date falling in October, and summing their Amounts. The data structure places all of the values on an equal footing, exposing each to the DBMS directly, so each can potentially participate directly in queries; whereas in the previous situation some values were embedded in lower-level structures that had to be handled specially. Accordingly, the normalized design lends itself to general-purpose query processing, whereas the unnormalized design does not.

on a set of attributes X (written X → Y) if and only if each X value is associated with precisely one Y value. For example, in an "Employee" table that includes the attributes "Employee ID" and "Employee Date of Birth", the functional dependency {Employee ID} → {Employee Date of Birth} would hold. It follows from the previous two sentences that each {Employee ID} is associated with precisely one {Employee Date of Birth}.

Trivial functional dependency: A trivial functional dependency is a functional dependency of an attribute on a superset of itself. {Employee ID, Employee Address} → {Employee Address} is trivial, as is {Employee Address} → {Employee Address}.

Full functional dependency: An attribute is fully functionally dependent on a set of attributes X if it is:

Transitive dependency: A transitive dependency

is an indirect functional dependency, one in which X→Z only by virtue of X→Y and Y→Z.

Multivalued dependency: A multivalued dependency

is a constraint according to which the presence of certain rows in a table implies the presence of certain other rows.

Join dependency: A table T is subject to a join dependency

if T can always be recreated by joining multiple tables each having a subset of the attributes of T.

Superkey: A superkey

is a combination of attributes that can be used to uniquely identify a database record. A table might have many superkeys.

Candidate key: A candidate key

is a special subset of superkeys that do not have any extraneous information in them: it is a minimal superkey.

Examples:

Imagine a table with the fields, , and . This table has many possible superkeys. Three of these are , and . Of those listed, only is a candidate key, as the others contain information not necessary to uniquely identify records ('SSN' here refers to Social Security Number, which is unique to each person).

Non-prime attribute: A non-prime attribute is an attribute that does not occur in any candidate key. Employee Address would be a non-prime attribute in the "Employees' Skills" table.

Prime attribute: A prime attribute, conversely, is an attribute that does occur in some candidate key.

Primary key: Most DBMSs

require a table to be defined as having a single unique key, rather than a number of possible unique keys. A primary key is a key which the database designer has designated for this purpose.

The normal forms are applicable to individual tables; to say that an entire database is in normal form n is to say that all of its tables are in normal form n.

Newcomers to database design sometimes suppose that normalization proceeds in an iterative fashion, i.e. a 1NF design is first normalized to 2NF, then to 3NF, and so on. This is not an accurate description of how normalization typically works. A sensibly designed table is likely to be in 3NF on the first attempt; furthermore, if it is 3NF, it is overwhelmingly likely to have an HNF of 5NF. Achieving the "higher" normal forms (above 3NF) does not usually require an extra expenditure of effort on the part of the designer, because 3NF tables usually need no modification to meet the requirements of these higher normal forms.

The main normal forms are summarized below.

(OLTP) are typically more normalized than databases intended for online analytical processing (OLAP). OLTP applications are characterized by a high volume of small transactions such as updating a sales record at a supermarket checkout counter. The expectation is that each transaction will leave the database in a consistent state. By contrast, databases intended for OLAP operations are primarily "read mostly" databases. OLAP applications tend to extract historical data that has accumulated over a long period of time. For such databases, redundant or "denormalized" data may facilitate business intelligence

applications. Specifically, dimensional tables

in a star schema

often contain denormalized data. The denormalized or redundant data must be carefully controlled during extract, transform, load

(ETL) processing, and users should not be permitted to see the data until it is in a consistent state. The normalized alternative to the star schema is the snowflake schema

. In many cases, the need for denormalization has waned as computers and RDBMS software have become more powerful, but since data volumes have generally increased along with hardware and software performance, OLAP databases often still use denormalized schemas.

Denormalization is also used to improve performance on smaller computers as in computerized cash-registers and mobile devices, since these may use the data for look-up only (e.g. price lookups). Denormalization may also be used when no RDBMS exists for a platform (such as Palm), or no changes are to be made to the data and a swift response is crucial.

One way of looking at this is to consider such structured values as being specialized types of values (domains), with their own domain-specific languages. However, what is usually meant by non-1NF models is the approach in which the relational model and the languages used to query it are extended with a general mechanism for such structure; for instance, the nested relational model supports the use of relations as domain values, by adding two additional operators (nest and unnest) to the relational algebra that can create and flatten nested relations, respectively.

Consider the following table:

Assume a person has several favourite colours. Obviously, favourite colours consist of a set of colours modeled by the given table. To transform a 1NF into an NF² table a "nest" operator is required which extends the relational algebra of the higher normal forms. Applying the "nest" operator to the 1NF table yields the following NF² table:

To transform this NF² table back into a 1NF an "unnest" operator is required which extends the relational algebra of the higher normal forms. The unnest, in this case, would make "colours" into its own table.

Although "unnest" is the mathematical inverse to "nest", the operator "nest" is not always the mathematical inverse of "unnest". Another constraint required is for the operators to be bijective

, which is covered by the Partitioned Normal Form (PNF).

Relational database management system

A relational database management system is a database management system that is based on the relational model as introduced by E. F. Codd. Most popular databases currently in use are based on the relational database model....

), the process of organizing data to minimize redundancy is called normalization. The goal of database normalization is to decompose relations with anomalies in order to produce smaller, well-structured relations. Normalization usually involves dividing large tables into smaller (and less redundant) tables and defining relationships between them. The objective is to isolate data so that additions, deletions, and modifications of a field can be made in just one table and then propagated through the rest of the database via the defined relationships.

Edgar F. Codd

Edgar F. Codd

Edgar Frank "Ted" Codd was an English computer scientist who, while working for IBM, invented the relational model for database management, the theoretical basis for relational databases...

, the inventor of the relational model

Relational model

The relational model for database management is a database model based on first-order predicate logic, first formulated and proposed in 1969 by Edgar F...

, introduced the concept of normalization and what we now know as the First Normal Form (1NF

First normal form

First normal form is a normal form used in database normalization. A relational database table that adheres to 1NF is one that meets a certain minimum set of criteria...

) in 1970. Codd went on to define the Second Normal Form (2NF

Second normal form

Second normal form is a normal form used in database normalization. 2NF was originally defined by E.F. Codd in 1971.A table that is in first normal form must meet additional criteria if it is to qualify for second normal form...

) and Third Normal Form (3NF

Third normal form

In computer science, the third normal form is a normal form used in database normalization. 3NF was originally defined by E.F. Codd in 1971. Codd's definition states that a table is in 3NF if and only if both of the following conditions hold:...

) in 1971, and Codd and Raymond F. Boyce

Raymond F. Boyce

Raymond 'Ray' Boyce was an American computer scientist who was known for his research in relational databases.Boyce grew up in New York, and went to college in Providence, Rhode Island. He earned his PhD in computer science at Purdue in 1971 . After leaving Purdue he worked on database projects...

defined the Boyce-Codd Normal Form (BCNF) in 1974. Higher normal forms were defined by other theorists in subsequent years, the most recent being the Sixth Normal Form (6NF

Sixth normal form

Sixth normal form is a term in relational database theory, used in two different ways.-6NF :A book by Christopher J...

) introduced by Chris Date

Christopher J. Date

Chris Date is an independent author, lecturer, researcher, and consultant, specializing in relational database theory.-Biography:Chris Date attended High Wycombe Royal Grammar School from 1951 to 1958 and received his BA in Mathematics from Cambridge University in 1962. He entered the computer...

, Hugh Darwen

Hugh Darwen

Hugh Darwen is a computer scientist who was an employee of IBM United Kingdom from 1967 to 2004, and has been involved in the history of the relational model.- Work :...

, and Nikos Lorentzos

Nikos Lorentzos

Nikos Lorentzos is a Greek professor of Informatics. He is a specialist on the Relational Model of Database Management, having made significant contributions in the field of temporal databases, where he has co-authored an important book with Hugh Darwen and Christopher J Date.- Bibliography : , . ...

in 2002.

Informally, a relational database table

Table (database)

In relational databases and flat file databases, a table is a set of data elements that is organized using a model of vertical columns and horizontal rows. A table has a specified number of columns, but can have any number of rows...

(the computerized representation of a relation

Relation (database)

In relational model:A relation value, which is assigned to a certain relation variable, is time-varying. By using a Data Definition Language , it is able to define relation variables.The following is an example of a heading which consists of three attributes....

) is often described as "normalized" if it is in the Third Normal Form. Most 3NF tables are free of insertion, update, and deletion anomalies, i.e. in most cases 3NF tables adhere to BCNF, 4NF

Fourth normal form

Fourth normal form is a normal form used in database normalization. Introduced by Ronald Fagin in 1977, 4NF is the next level of normalization after Boyce–Codd normal form . Whereas the second, third, and Boyce–Codd normal forms are concerned with functional dependencies, 4NF is concerned with a...

, and 5NF

Fifth normal form

Fifth normal form , also known as Project-join normal form is a level of database normalization designed to reduce redundancy in relational databases recording multi-valued facts by isolating semantically related multiple relationships...

(but typically not 6NF

Sixth normal form

Sixth normal form is a term in relational database theory, used in two different ways.-6NF :A book by Christopher J...

).

A standard piece of database design guidance is that the designer should create a fully normalized design; selective denormalization

Denormalization

In computing, denormalization is the process of attempting to optimise the read performance of a database by adding redundant data or by grouping data. In some cases, denormalisation helps cover up the inefficiencies inherent in relational database software...

can subsequently be performed for performance

Computer performance

Computer performance is characterized by the amount of useful work accomplished by a computer system compared to the time and resources used.Depending on the context, good computer performance may involve one or more of the following:...

reasons. However, some modeling disciplines, such as the dimensional modeling

Dimensional modeling

Dimensional modeling is the name of a set of techniques and concepts used in data warehouse design. It is considered to be different from entity-relationship modeling . Dimensional Modeling does not necessarily involve a relational database. The same modeling approach, at the logical level, can be...

approach to data warehouse

Data warehouse

In computing, a data warehouse is a database used for reporting and analysis. The data stored in the warehouse is uploaded from the operational systems. The data may pass through an operational data store for additional operations before it is used in the DW for reporting.A data warehouse...

design, explicitly recommend non-normalized designs, i.e. designs that in large part do not adhere to 3NF.

Objectives of normalization

A basic objective of the first normal formFirst normal form

First normal form is a normal form used in database normalization. A relational database table that adheres to 1NF is one that meets a certain minimum set of criteria...

defined by Codd in 1970 was to permit data to be queried and manipulated using a "universal data sub-language" grounded in first-order logic

First-order logic

First-order logic is a formal logical system used in mathematics, philosophy, linguistics, and computer science. It goes by many names, including: first-order predicate calculus, the lower predicate calculus, quantification theory, and predicate logic...

. (SQL

SQL

SQL is a programming language designed for managing data in relational database management systems ....

is an example of such a data sub-language, albeit one that Codd regarded as seriously flawed.)

The objectives of normalization beyond 1NF (First Normal Form) were stated as follows by Codd:

The sections below give details of each of these objectives.

Free the database of modification anomalies

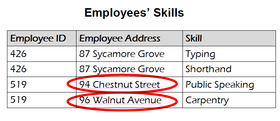

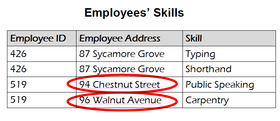

- The same information can be expressed on multiple rows; therefore updates to the table may result in logical inconsistencies. For example, each record in an "Employees' Skills" table might contain an Employee ID, Employee Address, and Skill; thus a change of address for a particular employee will potentially need to be applied to multiple records (one for each of his skills). If the update is not carried through successfully—if, that is, the employee's address is updated on some records but not others—then the table is left in an inconsistent state. Specifically, the table provides conflicting answers to the question of what this particular employee's address is. This phenomenon is known as an update anomaly.

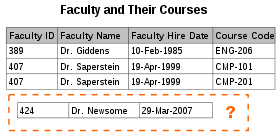

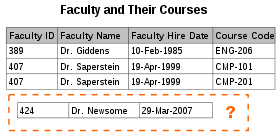

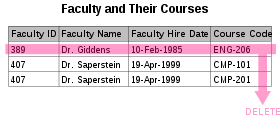

- There are circumstances in which certain facts cannot be recorded at all. For example, each record in a "Faculty and Their Courses" table might contain a Faculty ID, Faculty Name, Faculty Hire Date, and Course Code—thus we can record the details of any faculty member who teaches at least one course, but we cannot record the details of a newly-hired faculty member who has not yet been assigned to teach any courses except by setting the Course Code to null. This phenomenon is known as an insertion anomaly.

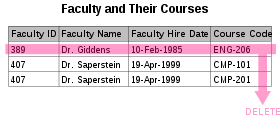

- There are circumstances in which the deletion of data representing certain facts necessitates the deletion of data representing completely different facts. The "Faculty and Their Courses" table described in the previous example suffers from this type of anomaly, for if a faculty member temporarily ceases to be assigned to any courses, we must delete the last of the records on which that faculty member appears, effectively also deleting the faculty member. This phenomenon is known as a deletion anomaly.

Minimize redesign when extending the database structure

When a fully normalized database structure is extended to allow it to accommodate new types of data, the pre-existing aspects of the database structure can remain largely or entirely unchanged. As a result, applications interacting with the database are minimally affected.Make the data model more informative to users

Normalized tables, and the relationship between one normalized table and another, mirror real-world concepts and their interrelationships.Avoid bias towards any particular pattern of querying

Normalized tables are suitable for general-purpose querying. This means any queries against these tables, including future queries whose details cannot be anticipated, are supported. In contrast, tables that are not normalized lend themselves to some types of queries, but not others.For example, consider an online bookseller whose customers maintain wishlists of books they'd like to have. For the obvious, anticipated query—what books does this customer want?—it's enough to store the customer's wishlist in the table as, say, a homogeneous string of authors and titles.

With this design, though, the database can answer only that one single query. It cannot by itself answer interesting but unanticipated queries: What is the most-wished-for book? Which customers are interested in WWII espionage? How does Lord Byron stack up against his contemporary poets? Answers to these questions must come from special adaptive tools completely separate from the database. One tool might be software written especially to handle such queries. This special adaptive software has just one single purpose: in effect to normalize the non-normalized field.

Unforeseen queries can be answered trivially, and entirely within the database framework, with a normalized table.

Example

Querying and manipulating the data within an unnormalized data structure, such as the following non-1NF representation of customers' credit card transactions, involves more complexity than is really necessary:| Customer | Transactions | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jones |

|

||||||||||||

| Wilkins |

|

||||||||||||

| Stevens |

|

To each customer there corresponds a repeating group of transactions. The automated evaluation of any query relating to customers' transactions therefore would broadly involve two stages:

- Unpacking one or more customers' groups of transactions allowing the individual transactions in a group to be examined, and

- Deriving a query result based on the results of the first stage

For example, in order to find out the monetary sum of all transactions that occurred in October 2003 for all customers, the system would have to know that it must first unpack the Transactions group of each customer, then sum the Amounts of all transactions thus obtained where the Date of the transaction falls in October 2003.

One of Codd's important insights was that this structural complexity could always be removed completely, leading to much greater power and flexibility in the way queries could be formulated (by users

User (computing)

A user is an agent, either a human agent or software agent, who uses a computer or network service. A user often has a user account and is identified by a username , screen name , nickname , or handle, which is derived from the identical Citizen's Band radio term.Users are...

and applications

Application software

Application software, also known as an application or an "app", is computer software designed to help the user to perform specific tasks. Examples include enterprise software, accounting software, office suites, graphics software and media players. Many application programs deal principally with...

) and evaluated (by the DBMS

Database management system

A database management system is a software package with computer programs that control the creation, maintenance, and use of a database. It allows organizations to conveniently develop databases for various applications by database administrators and other specialists. A database is an integrated...

). The normalized equivalent of the structure above would look like this:

| Customer | Tr. ID | Date | Amount |

|---|---|---|---|

| Jones | 12890 | 14-Oct-2003 | −87 |

| Jones | 12904 | 15-Oct-2003 | −50 |

| Wilkins | 12898 | 14-Oct-2003 | −21 |

| Stevens | 12907 | 15-Oct-2003 | −18 |

| Stevens | 14920 | 20-Nov-2003 | −70 |

| Stevens | 15003 | 27-Nov-2003 | −60 |

Now each row represents an individual credit card transaction, and the DBMS can obtain the answer of interest, simply by finding all rows with a Date falling in October, and summing their Amounts. The data structure places all of the values on an equal footing, exposing each to the DBMS directly, so each can potentially participate directly in queries; whereas in the previous situation some values were embedded in lower-level structures that had to be handled specially. Accordingly, the normalized design lends itself to general-purpose query processing, whereas the unnormalized design does not.

Background to normalization: definitions

Functional dependency: In a given table, an attribute Y is said to have a functional dependencyFunctional dependency

A functional dependency is a constraint between two sets of attributes in a relation from a database.Given a relation R, a set of attributes X in R is said to functionally determine another attribute Y, also in R, if, and only if, each X value is associated with precisely one Y value...

on a set of attributes X (written X → Y) if and only if each X value is associated with precisely one Y value. For example, in an "Employee" table that includes the attributes "Employee ID" and "Employee Date of Birth", the functional dependency {Employee ID} → {Employee Date of Birth} would hold. It follows from the previous two sentences that each {Employee ID} is associated with precisely one {Employee Date of Birth}.

Trivial functional dependency: A trivial functional dependency is a functional dependency of an attribute on a superset of itself. {Employee ID, Employee Address} → {Employee Address} is trivial, as is {Employee Address} → {Employee Address}.

Full functional dependency: An attribute is fully functionally dependent on a set of attributes X if it is:

- functionally dependent on X, and

- not functionally dependent on any proper subset of X. {Employee Address} has a functional dependency on {Employee ID, Skill}, but not a full functional dependency, because it is also dependent on {Employee ID}.

Transitive dependency: A transitive dependency

Transitive dependency

In mathematics, a transitive dependency is a functional dependency which holds by virtue of transitivity. A transitive dependency can occur only in a relation that has three or more attributes. Let A, B, and C designate three distinct attributes in the relation...

is an indirect functional dependency, one in which X→Z only by virtue of X→Y and Y→Z.

Multivalued dependency: A multivalued dependency

Multivalued dependency

In database theory, multivalued dependency is a full constraint between two sets of attributes in a relation.In contrast to the functional dependency, the multivalued dependency requires that certain tuples be present in a relation. Therefore, a multivalued dependency is a special case of...

is a constraint according to which the presence of certain rows in a table implies the presence of certain other rows.

Join dependency: A table T is subject to a join dependency

Join dependency

A join dependency is a constraint on the set of legal relations over a database scheme. A table T is subject to a join dependency if T can always be recreated by joining multiple tables each having a subset of the attributes of T...

if T can always be recreated by joining multiple tables each having a subset of the attributes of T.

Superkey: A superkey

Superkey

A superkey is defined in the relational model of database organization as a set of attributes of a relation variable for which it holds that in all relations assigned to that variable, there are no two distinct tuples that have the same values for the attributes in this set...

is a combination of attributes that can be used to uniquely identify a database record. A table might have many superkeys.

Candidate key: A candidate key

Candidate key

In the relational model of databases, a candidate key of a relation is a minimal superkey for that relation; that is, a set of attributes such that# the relation does not have two distinct tuples In the relational model of databases, a candidate key of a relation is a minimal superkey for that...

is a special subset of superkeys that do not have any extraneous information in them: it is a minimal superkey.

Examples:

Imagine a table with the fields

Non-prime attribute: A non-prime attribute is an attribute that does not occur in any candidate key. Employee Address would be a non-prime attribute in the "Employees' Skills" table.

Prime attribute: A prime attribute, conversely, is an attribute that does occur in some candidate key.

Primary key: Most DBMSs

Database management system

A database management system is a software package with computer programs that control the creation, maintenance, and use of a database. It allows organizations to conveniently develop databases for various applications by database administrators and other specialists. A database is an integrated...

require a table to be defined as having a single unique key, rather than a number of possible unique keys. A primary key is a key which the database designer has designated for this purpose.

Normal forms

The normal forms (abbrev. NF) of relational database theory provide criteria for determining a table's degree of vulnerability to logical inconsistencies and anomalies. The higher the normal form applicable to a table, the less vulnerable it is to inconsistencies and anomalies. Each table has a "highest normal form" (HNF): by definition, a table always meets the requirements of its HNF and of all normal forms lower than its HNF; also by definition, a table fails to meet the requirements of any normal form higher than its HNF.The normal forms are applicable to individual tables; to say that an entire database is in normal form n is to say that all of its tables are in normal form n.

Newcomers to database design sometimes suppose that normalization proceeds in an iterative fashion, i.e. a 1NF design is first normalized to 2NF, then to 3NF, and so on. This is not an accurate description of how normalization typically works. A sensibly designed table is likely to be in 3NF on the first attempt; furthermore, if it is 3NF, it is overwhelmingly likely to have an HNF of 5NF. Achieving the "higher" normal forms (above 3NF) does not usually require an extra expenditure of effort on the part of the designer, because 3NF tables usually need no modification to meet the requirements of these higher normal forms.

The main normal forms are summarized below.

| Normal form | Defined by | Brief definition |

|---|---|---|

| First normal form First normal form First normal form is a normal form used in database normalization. A relational database table that adheres to 1NF is one that meets a certain minimum set of criteria... (1NF) |

Two versions: E.F. Codd (1970), C.J. Date (2003) | Table faithfully represents a relation Relation (database) In relational model:A relation value, which is assigned to a certain relation variable, is time-varying. By using a Data Definition Language , it is able to define relation variables.The following is an example of a heading which consists of three attributes.... and has no repeating groups |

| Second normal form Second normal form Second normal form is a normal form used in database normalization. 2NF was originally defined by E.F. Codd in 1971.A table that is in first normal form must meet additional criteria if it is to qualify for second normal form... (2NF) |

E.F. Codd (1971) | No non-prime attribute in the table is functionally dependent Functional dependency A functional dependency is a constraint between two sets of attributes in a relation from a database.Given a relation R, a set of attributes X in R is said to functionally determine another attribute Y, also in R, if, and only if, each X value is associated with precisely one Y value... on a proper subset of a candidate key Candidate key In the relational model of databases, a candidate key of a relation is a minimal superkey for that relation; that is, a set of attributes such that# the relation does not have two distinct tuples In the relational model of databases, a candidate key of a relation is a minimal superkey for that... |

| Third normal form Third normal form In computer science, the third normal form is a normal form used in database normalization. 3NF was originally defined by E.F. Codd in 1971. Codd's definition states that a table is in 3NF if and only if both of the following conditions hold:... (3NF) |

E.F. Codd (1971); see +also Carlo Zaniolo's equivalent but differently-expressed definition (1982) | Every non-prime attribute is non-transitively dependent on every candidate key Candidate key In the relational model of databases, a candidate key of a relation is a minimal superkey for that relation; that is, a set of attributes such that# the relation does not have two distinct tuples In the relational model of databases, a candidate key of a relation is a minimal superkey for that... in the table |

| Elementary Key Normal Form (EKNF) | C.Zaniolo (1982); | Every non-trivial functional dependency in the table is either the dependency of an elementary key attribute or a dependency on a superkey |

| Boyce–Codd normal form (BCNF) | Raymond F. Boyce and E.F. Codd (1974) | Every non-trivial functional dependency in the table is a dependency on a superkey Superkey A superkey is defined in the relational model of database organization as a set of attributes of a relation variable for which it holds that in all relations assigned to that variable, there are no two distinct tuples that have the same values for the attributes in this set... |

| Fourth normal form Fourth normal form Fourth normal form is a normal form used in database normalization. Introduced by Ronald Fagin in 1977, 4NF is the next level of normalization after Boyce–Codd normal form . Whereas the second, third, and Boyce–Codd normal forms are concerned with functional dependencies, 4NF is concerned with a... (4NF) |

Ronald Fagin Ronald Fagin Ronald Fagin is the Manager of the Foundations of Computer Science group at the IBM Almaden Research Center. He is best known for his pioneering work in database theory, finite model theory, and reasoning about knowledge... (1977) |

Every non-trivial multivalued dependency Multivalued dependency In database theory, multivalued dependency is a full constraint between two sets of attributes in a relation.In contrast to the functional dependency, the multivalued dependency requires that certain tuples be present in a relation. Therefore, a multivalued dependency is a special case of... in the table is a dependency on a superkey |

| Fifth normal form Fifth normal form Fifth normal form , also known as Project-join normal form is a level of database normalization designed to reduce redundancy in relational databases recording multi-valued facts by isolating semantically related multiple relationships... (5NF) |

Ronald Fagin Ronald Fagin Ronald Fagin is the Manager of the Foundations of Computer Science group at the IBM Almaden Research Center. He is best known for his pioneering work in database theory, finite model theory, and reasoning about knowledge... (1979) |

Every non-trivial join dependency Join dependency A join dependency is a constraint on the set of legal relations over a database scheme. A table T is subject to a join dependency if T can always be recreated by joining multiple tables each having a subset of the attributes of T... in the table is implied by the superkeys of the table |

| Domain/key normal form Domain/key normal form Domain/key normal form is a normal form used in database normalization which requires that the database contains no constraints other than domain constraints and key constraints.... (DKNF) |

Ronald Fagin Ronald Fagin Ronald Fagin is the Manager of the Foundations of Computer Science group at the IBM Almaden Research Center. He is best known for his pioneering work in database theory, finite model theory, and reasoning about knowledge... (1981) |

Every constraint on the table is a logical consequence of the table's domain constraints and key constraints |

| Sixth normal form Sixth normal form Sixth normal form is a term in relational database theory, used in two different ways.-6NF :A book by Christopher J... (6NF) |

C.J. Date Christopher J. Date Chris Date is an independent author, lecturer, researcher, and consultant, specializing in relational database theory.-Biography:Chris Date attended High Wycombe Royal Grammar School from 1951 to 1958 and received his BA in Mathematics from Cambridge University in 1962. He entered the computer... , Hugh Darwen Hugh Darwen Hugh Darwen is a computer scientist who was an employee of IBM United Kingdom from 1967 to 2004, and has been involved in the history of the relational model.- Work :... , and Nikos Lorentzos Nikos Lorentzos Nikos Lorentzos is a Greek professor of Informatics. He is a specialist on the Relational Model of Database Management, having made significant contributions in the field of temporal databases, where he has co-authored an important book with Hugh Darwen and Christopher J Date.- Bibliography : , . ... (2002) |

Table features no non-trivial join dependencies at all (with reference to generalized join operator) |

Denormalization

Databases intended for online transaction processingOnline transaction processing

Online transaction processing, or OLTP, refers to a class of systems that facilitate and manage transaction-oriented applications, typically for data entry and retrieval transaction processing...

(OLTP) are typically more normalized than databases intended for online analytical processing (OLAP). OLTP applications are characterized by a high volume of small transactions such as updating a sales record at a supermarket checkout counter. The expectation is that each transaction will leave the database in a consistent state. By contrast, databases intended for OLAP operations are primarily "read mostly" databases. OLAP applications tend to extract historical data that has accumulated over a long period of time. For such databases, redundant or "denormalized" data may facilitate business intelligence

Business intelligence

Business intelligence mainly refers to computer-based techniques used in identifying, extracting, and analyzing business data, such as sales revenue by products and/or departments, or by associated costs and incomes....

applications. Specifically, dimensional tables

Dimension table

In data warehousing, a dimension table is one of the set of companion tables to a fact table.The fact table contains business facts or measures and foreign keys which refer to candidate keys in the dimension tables....

in a star schema

Star schema

In computing, the star schema is the simplest style of data warehouse schema. The star schema consists of one or more fact tables referencing any number of dimension tables...

often contain denormalized data. The denormalized or redundant data must be carefully controlled during extract, transform, load

Extract, transform, load

Extract, transform and load is a process in database usage and especially in data warehousing that involves:* Extracting data from outside sources* Transforming it to fit operational needs...

(ETL) processing, and users should not be permitted to see the data until it is in a consistent state. The normalized alternative to the star schema is the snowflake schema

Snowflake schema

In computing, a snowflake schema is a logical arrangement of tables in a multidimensional database such that the entity relationship diagram resembles a snowflake in shape. The snowflake schema is represented by centralized fact tables which are connected to multiple dimensions.The snowflake schema...

. In many cases, the need for denormalization has waned as computers and RDBMS software have become more powerful, but since data volumes have generally increased along with hardware and software performance, OLAP databases often still use denormalized schemas.

Denormalization is also used to improve performance on smaller computers as in computerized cash-registers and mobile devices, since these may use the data for look-up only (e.g. price lookups). Denormalization may also be used when no RDBMS exists for a platform (such as Palm), or no changes are to be made to the data and a swift response is crucial.

Non-first normal form (NF² or N1NF)

In recognition that denormalization can be deliberate and useful, the non-first normal form is a definition of database designs which do not conform to first normal form, by allowing "sets and sets of sets to be attribute domains" (Schek 1982). The languages used to query and manipulate data in the model must be extended accordingly to support such values.One way of looking at this is to consider such structured values as being specialized types of values (domains), with their own domain-specific languages. However, what is usually meant by non-1NF models is the approach in which the relational model and the languages used to query it are extended with a general mechanism for such structure; for instance, the nested relational model supports the use of relations as domain values, by adding two additional operators (nest and unnest) to the relational algebra that can create and flatten nested relations, respectively.

Consider the following table:

| Person | Favourite Colour |

|---|---|

| Bob | blue |

| Bob | red |

| Jane | green |

| Jane | yellow |

| Jane | red |

Assume a person has several favourite colours. Obviously, favourite colours consist of a set of colours modeled by the given table. To transform a 1NF into an NF² table a "nest" operator is required which extends the relational algebra of the higher normal forms. Applying the "nest" operator to the 1NF table yields the following NF² table:

| Person | Favourite Colours | ||||

|---|---|---|---|---|---|

| Bob |

|

||||

| Jane |

|

To transform this NF² table back into a 1NF an "unnest" operator is required which extends the relational algebra of the higher normal forms. The unnest, in this case, would make "colours" into its own table.

Although "unnest" is the mathematical inverse to "nest", the operator "nest" is not always the mathematical inverse of "unnest". Another constraint required is for the operators to be bijective

Bijection

A bijection is a function giving an exact pairing of the elements of two sets. A bijection from the set X to the set Y has an inverse function from Y to X. If X and Y are finite sets, then the existence of a bijection means they have the same number of elements...

, which is covered by the Partitioned Normal Form (PNF).

See also

- Aspect (computer science)Aspect (computer science)In computer science, an aspect of a program is a feature linked to many other parts of the program, but which is not related to the program's primary function. An aspect crosscuts the program's core concerns, therefore violating its separation of concerns that tries to encapsulate unrelated functions...

- Business ruleBusiness ruleA Business rule is a statement that defines or constrains some aspect of the business and always resolves to either true or false. Business rules are intended to assert business structure or to control or influence the behavior of the business. Business rules describe the operations, definitions...

- Canonical formCanonical formGenerally, in mathematics, a canonical form of an object is a standard way of presenting that object....

- Cross-cutting concernCross-cutting concernIn computer science, cross-cutting concerns are aspects of a program which affect other concerns.These concerns often cannot be cleanly decomposed from the rest of the system in both the design and implementation, and can result in either scattering , tangling , or both.For instance, if writing an...

- Optimization (computer science)Optimization (computer science)In computer science, program optimization or software optimization is the process of modifying a software system to make some aspect of it work more efficiently or use fewer resources...

- RefactoringRefactoringCode refactoring is "disciplined technique for restructuring an existing body of code, altering its internal structure without changing its external behavior", undertaken in order to improve some of the nonfunctional attributes of the software....

- Universal relation assumptionUniversal relation assumptionIn relational databases, the universal relation assumption states that one can place all data attributes into a table, which may then be decomposed into smaller tables as needed....

Further reading

- Litt's Tips: Normalization

- Date, C. J. (1999), An Introduction to Database Systems (8th ed.). Addison-Wesley Longman. ISBN 0-321-19784-4.

- Kent, W. (1983) A Simple Guide to Five Normal Forms in Relational Database Theory, Communications of the ACM, vol. 26, pp. 120–125

- Date, C.J., & Darwen, H., & Pascal, F. Database Debunkings

- H.-J. Schek, P. Pistor Data Structures for an Integrated Data Base Management and Information Retrieval System

External links

- Database Normalization Basics by Mike Chapple (About.com)

- Database Normalization Intro, Part 2

- An Introduction to Database Normalization by Mike Hillyer.

- A tutorial on the first 3 normal forms by Fred Coulson

- DB Normalization Examples

- Description of the database normalization basics by Microsoft

- Database Normalization and Design Techniques by Barry Wise, recommended reading for the Harvard MIS.

- A Simple Guide to Five Normal Forms in Relational Database Theory