3D rendering

Encyclopedia

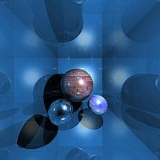

3D rendering is the 3D computer graphics

process of automatically converting 3D wire frame model

s into 2D images with 3D photorealistic effects

on a computer.

rendering through polygon-based rendering, to more advanced techniques such as: scanline rendering

, ray tracing, or radiosity

. Rendering may take from fractions of a second to days for a single image/frame. In general, different methods are better suited for either photo-realistic rendering, or real-time rendering.

Rendering for interactive media, such as games and simulations, is calculated and displayed in real time, at rates of approximately 20 to 120 frames per second. In real-time rendering, the goal is to show as much information as possible as the eye can process in a fraction of a second (a.k.a. in one frame. In the case of 30 frame-per-second animation a frame encompasses one 30th of a second). The primary goal is to achieve an as high as possible degree of photorealism at an acceptable minimum rendering speed (usually 24 frames per second, as that is the minimum the human eye needs to see to successfully create the illusion of movement). In fact, exploitations can be applied in the way the eye 'perceives' the world, and as a result the final image presented is not necessarily that of the real-world, but one close enough for the human eye to tolerate. Rendering software may simulate such visual effects as lens flare

Rendering for interactive media, such as games and simulations, is calculated and displayed in real time, at rates of approximately 20 to 120 frames per second. In real-time rendering, the goal is to show as much information as possible as the eye can process in a fraction of a second (a.k.a. in one frame. In the case of 30 frame-per-second animation a frame encompasses one 30th of a second). The primary goal is to achieve an as high as possible degree of photorealism at an acceptable minimum rendering speed (usually 24 frames per second, as that is the minimum the human eye needs to see to successfully create the illusion of movement). In fact, exploitations can be applied in the way the eye 'perceives' the world, and as a result the final image presented is not necessarily that of the real-world, but one close enough for the human eye to tolerate. Rendering software may simulate such visual effects as lens flare

s, depth of field

or motion blur

. These are attempts to simulate visual phenomena resulting from the optical characteristics of cameras and of the human eye. These effects can lend an element of realism to a scene, even if the effect is merely a simulated artifact of a camera. This is the basic method employed in games, interactive worlds and VRML

. The rapid increase in computer processing power has allowed a progressively higher degree of realism even for real-time rendering, including techniques such as HDR rendering

. Real-time rendering is often polygonal and aided by the computer's GPU

.

Animations for non-interactive media, such as feature films and video, are rendered much more slowly. Non-real time rendering enables the leveraging of limited processing power in order to obtain higher image quality. Rendering times for individual frames may vary from a few seconds to several days for complex scenes. Rendered frames are stored on a hard disk then can be transferred to other media such as motion picture film or optical disk. These frames are then displayed sequentially at high frame rates, typically 24, 25, or 30 frames per second, to achieve the illusion of movement.

Animations for non-interactive media, such as feature films and video, are rendered much more slowly. Non-real time rendering enables the leveraging of limited processing power in order to obtain higher image quality. Rendering times for individual frames may vary from a few seconds to several days for complex scenes. Rendered frames are stored on a hard disk then can be transferred to other media such as motion picture film or optical disk. These frames are then displayed sequentially at high frame rates, typically 24, 25, or 30 frames per second, to achieve the illusion of movement.

When the goal is photo-realism, techniques such as ray tracing or radiosity

are employed. This is the basic method employed in digital media and artistic works. Techniques have been developed for the purpose of simulating other naturally-occurring effects, such as the interaction of light with various forms of matter. Examples of such techniques include particle system

s (which can simulate rain, smoke, or fire), volumetric sampling

(to simulate fog, dust and other spatial atmospheric effects), caustics

(to simulate light focusing by uneven light-refracting surfaces, such as the light ripples seen on the bottom of a swimming pool), and subsurface scattering

(to simulate light reflecting inside the volumes of solid objects such as human skin).

The rendering process is computationally expensive, given the complex variety of physical processes being simulated. Computer processing power has increased rapidly over the years, allowing for a progressively higher degree of realistic rendering. Film studios that produce computer-generated animations typically make use of a render farm

to generate images in a timely manner. However, falling hardware costs mean that it is entirely possible to create small amounts of 3D animation on a home computer system. The output of the renderer is often used as only one small part of a completed motion-picture scene. Many layers of material may be rendered separately and integrated into the final shot using compositing

software.

(not to be confused with Phong shading

). In refraction of light, an important concept is the refractive index

. In most 3D programming implementations, the term for this value is "index of refraction," usually abbreviated "IOR." Shading can be broken down into two orthogonal issues, which are often studied independently:

Reflection or scattering is the relationship between incoming and outgoing illumination at a given point. Descriptions of scattering are usually given in terms of a bidirectional scattering distribution function

Reflection or scattering is the relationship between incoming and outgoing illumination at a given point. Descriptions of scattering are usually given in terms of a bidirectional scattering distribution function

or BSDF. Popular reflection rendering techniques in 3D computer graphics include:

addresses how different types of scattering are distributed across the surface (i.e., which scattering function applies where). Descriptions of this kind are typically expressed with a program called a shader

. (Note that there is some confusion since the word "shader" is sometimes used for programs that describe local geometric variation.) A simple example of shading is texture mapping

, which uses an image to specify the diffuse color at each point on a surface, giving it more apparent detail.

describes how illumination in a scene gets from one place to another. Visibility

is a major component of light transport.

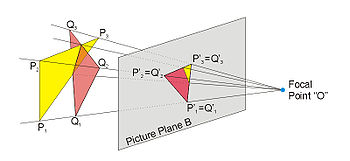

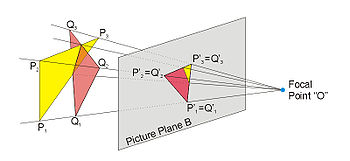

The shaded three-dimensional objects must be flattened so that the display device - namely a monitor - can display it in only two dimensions, this process is called 3D projection

The shaded three-dimensional objects must be flattened so that the display device - namely a monitor - can display it in only two dimensions, this process is called 3D projection

. This is done using projection and, for most applications, perspective projection. The basic idea behind perspective projection is that objects that are further away are made smaller in relation to those that are closer to the eye. Programs produce perspective by multiplying a dilation constant raised to the power of the negative of the distance from the observer. A dilation constant of one means that there is no perspective. High dilation constants can cause a "fish-eye" effect in which image distortion begins to occur. Orthographic projection

is used mainly in CAD or CAM

applications where scientific modeling requires precise measurements and preservation of the third dimension.

3D computer graphics

3D computer graphics are graphics that use a three-dimensional representation of geometric data that is stored in the computer for the purposes of performing calculations and rendering 2D images...

process of automatically converting 3D wire frame model

Wire frame model

A wire frame model is a visual presentation of a three dimensional or physical object used in 3D computer graphics. It is created by specifying each edge of the physical object where two mathematically continuous smooth surfaces meet, or by connecting an object's constituent vertices using straight...

s into 2D images with 3D photorealistic effects

Photorealism

Photorealism is the genre of painting based on using the camera and photographs to gather information and then from this information creating a painting that appears photographic...

on a computer.

Rendering methods

Rendering is the final process of creating the actual 2D image or animation from the prepared scene. This can be compared to taking a photo or filming the scene after the setup is finished in real life. Several different, and often specialized, rendering methods have been developed. These range from the distinctly non-realistic wireframeWire frame model

A wire frame model is a visual presentation of a three dimensional or physical object used in 3D computer graphics. It is created by specifying each edge of the physical object where two mathematically continuous smooth surfaces meet, or by connecting an object's constituent vertices using straight...

rendering through polygon-based rendering, to more advanced techniques such as: scanline rendering

Scanline rendering

Scanline rendering is an algorithm for visible surface determination, in 3D computer graphics,that works on a row-by-row basis rather than a polygon-by-polygon or pixel-by-pixel basis...

, ray tracing, or radiosity

Radiosity

Radiosity is a global illumination algorithm used in 3D computer graphics rendering. Radiosity is an application of the finite element method to solving the rendering equation for scenes with purely diffuse surfaces...

. Rendering may take from fractions of a second to days for a single image/frame. In general, different methods are better suited for either photo-realistic rendering, or real-time rendering.

Real-time

Lens flare

Lens flare is the light scattered in lens systems through generally unwanted image formation mechanisms, such as internal reflection and scattering from material inhomogeneities in the lens. These mechanisms differ from the intended image formation mechanism that depends on refraction of the image...

s, depth of field

Depth of field

In optics, particularly as it relates to film and photography, depth of field is the distance between the nearest and farthest objects in a scene that appear acceptably sharp in an image...

or motion blur

Motion blur

Motion blur is the apparent streaking of rapidly moving objects in a still image or a sequence of images such as a movie or animation. It results when the image being recorded changes during the recording of a single frame, either due to rapid movement or long exposure.- Photography :When a camera...

. These are attempts to simulate visual phenomena resulting from the optical characteristics of cameras and of the human eye. These effects can lend an element of realism to a scene, even if the effect is merely a simulated artifact of a camera. This is the basic method employed in games, interactive worlds and VRML

VRML

VRML is a standard file format for representing 3-dimensional interactive vector graphics, designed particularly with the World Wide Web in mind...

. The rapid increase in computer processing power has allowed a progressively higher degree of realism even for real-time rendering, including techniques such as HDR rendering

High dynamic range

High dynamic range is a term generally used for media applications such as digital imaging and digital audio production...

. Real-time rendering is often polygonal and aided by the computer's GPU

Graphics processing unit

A graphics processing unit or GPU is a specialized circuit designed to rapidly manipulate and alter memory in such a way so as to accelerate the building of images in a frame buffer intended for output to a display...

.

Non real-time

When the goal is photo-realism, techniques such as ray tracing or radiosity

Radiosity

Radiosity is a global illumination algorithm used in 3D computer graphics rendering. Radiosity is an application of the finite element method to solving the rendering equation for scenes with purely diffuse surfaces...

are employed. This is the basic method employed in digital media and artistic works. Techniques have been developed for the purpose of simulating other naturally-occurring effects, such as the interaction of light with various forms of matter. Examples of such techniques include particle system

Particle system

The term particle system refers to a computer graphics technique to simulate certain fuzzy phenomena, which are otherwise very hard to reproduce with conventional rendering techniques...

s (which can simulate rain, smoke, or fire), volumetric sampling

Volumetric lighting

Volumetric lighting is a technique used in 3D computer graphics to add lighting effects to a rendered scene. It allows the viewer to see beams of light shining through the environment; seeing sunbeams streaming through an open window is an example of volumetric lighting, also known as crepuscular...

(to simulate fog, dust and other spatial atmospheric effects), caustics

Caustic (optics)

In optics, a caustic or caustic network is the envelope of light rays reflected or refracted by a curved surface or object, or the projection of that envelope of rays on another surface. The caustic is a curve or surface to which each of the light rays is tangent, defining a boundary of an...

(to simulate light focusing by uneven light-refracting surfaces, such as the light ripples seen on the bottom of a swimming pool), and subsurface scattering

Subsurface scattering

Subsurface scattering is a mechanism of light transport in which light penetrates the surface of a translucent object, is scattered by interacting with the material, and exits the surface at a different point...

(to simulate light reflecting inside the volumes of solid objects such as human skin).

The rendering process is computationally expensive, given the complex variety of physical processes being simulated. Computer processing power has increased rapidly over the years, allowing for a progressively higher degree of realistic rendering. Film studios that produce computer-generated animations typically make use of a render farm

Render farm

A render farm is a computer cluster built to render computer-generated imagery , typically for film and television visual effects, using off-line batch processing. This is different from a render wall, which is a networked, tiled display used for real-time rendering...

to generate images in a timely manner. However, falling hardware costs mean that it is entirely possible to create small amounts of 3D animation on a home computer system. The output of the renderer is often used as only one small part of a completed motion-picture scene. Many layers of material may be rendered separately and integrated into the final shot using compositing

Compositing

Compositing is the combining of visual elements from separate sources into single images, often to create the illusion that all those elements are parts of the same scene. Live-action shooting for compositing is variously called "chroma key", "blue screen", "green screen" and other names. Today,...

software.

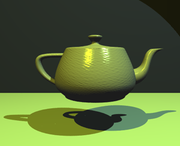

Reflection and shading models

Models of reflection/scattering and shading are used to describe the appearance of a surface. Although these issues may seem like problems all on their own, they are studied almost exclusively within the context of rendering. Modern 3D computer graphics rely heavily on a simplified reflection model called Phong reflection modelPhong reflection model

The Phong reflection model is an empirical model of the local illumination of points on a surface...

(not to be confused with Phong shading

Phong shading

Phong shading refers to an interpolation technique for surface shading in 3D computer graphics. It is also called Phong interpolation or normal-vector interpolation shading. Specifically, it interpolates surface normals across rasterized polygons and computes pixel colors based on the interpolated...

). In refraction of light, an important concept is the refractive index

Refractive index

In optics the refractive index or index of refraction of a substance or medium is a measure of the speed of light in that medium. It is expressed as a ratio of the speed of light in vacuum relative to that in the considered medium....

. In most 3D programming implementations, the term for this value is "index of refraction," usually abbreviated "IOR." Shading can be broken down into two orthogonal issues, which are often studied independently:

- Reflection/Scattering - How light interacts with the surface at a given point

- Shading - How material properties vary across the surface

Reflection

Bidirectional scattering distribution function

The definition of the BSDF is not well standardized. The term was probably introduced in 1991 by Paul Heckbert. Most often it is used to name the general mathematical function which describes the way in which the light is scattered by a surface...

or BSDF. Popular reflection rendering techniques in 3D computer graphics include:

- Flat shading: A technique that shades each polygon of an object based on the polygon's "normal" and the position and intensity of a light source.

- Gouraud shadingGouraud shadingGouraud shading, named after Henri Gouraud, is an interpolation method used in computer graphics to produce continuous shading of surfaces represented by polygon meshes...

: Invented by H. Gouraud in 1971, a fast and resource-conscious vertex shading technique used to simulate smoothly shaded surfaces. - Texture mappingTexture mappingTexture mapping is a method for adding detail, surface texture , or color to a computer-generated graphic or 3D model. Its application to 3D graphics was pioneered by Dr Edwin Catmull in his Ph.D. thesis of 1974.-Texture mapping:...

: A technique for simulating a large amount of surface detail by mapping images (textures) onto polygons. - Phong shadingPhong shadingPhong shading refers to an interpolation technique for surface shading in 3D computer graphics. It is also called Phong interpolation or normal-vector interpolation shading. Specifically, it interpolates surface normals across rasterized polygons and computes pixel colors based on the interpolated...

: Invented by Bui Tuong Phong, used to simulate specular highlights and smooth shaded surfaces. - Bump mappingBump mappingBump mapping is a technique in computer graphics for simulating bumps and wrinkles on the surface of an object. This is achieved by perturbing the surface normals of the object and using the perturbed normal during lighting calculations. The result is an apparently bumpy surface rather than a...

: Invented by Jim BlinnJim BlinnJames F. Blinn is a computer scientist who first became widely known for his work as a computer graphics expert at NASA's Jet Propulsion Laboratory , particularly his work on the pre-encounter animations for the Voyager project, his work on the Carl Sagan Cosmos documentary series and the research...

, a normal-perturbation technique used to simulate wrinkled surfaces. - Cel shadingCel-shaded animationCel-shaded animation is a type of non-photorealistic rendering designed to make computer graphics appear to be hand-drawn. Cel-shading is often used to mimic the style of a comic book or cartoon. It is a somewhat recent addition to computer graphics, most commonly turning up in video games...

: A technique used to imitate the look of hand-drawn animation.

Shading

ShadingShading

Shading refers to depicting depth perception in 3D models or illustrations by varying levels of darkness.-Drawing:Shading is a process used in drawing for depicting levels of darkness on paper by applying media more densely or with a darker shade for darker areas, and less densely or with a lighter...

addresses how different types of scattering are distributed across the surface (i.e., which scattering function applies where). Descriptions of this kind are typically expressed with a program called a shader

Shader

In the field of computer graphics, a shader is a computer program that is used primarily to calculate rendering effects on graphics hardware with a high degree of flexibility...

. (Note that there is some confusion since the word "shader" is sometimes used for programs that describe local geometric variation.) A simple example of shading is texture mapping

Texture mapping

Texture mapping is a method for adding detail, surface texture , or color to a computer-generated graphic or 3D model. Its application to 3D graphics was pioneered by Dr Edwin Catmull in his Ph.D. thesis of 1974.-Texture mapping:...

, which uses an image to specify the diffuse color at each point on a surface, giving it more apparent detail.

Transport

TransportLight transport theory

Light transport theory deals with the mathematics behind calculating the energy transfers between media that affect visibility. This article is currently specific to light transport in rendering processes such as global illumination and HDRI....

describes how illumination in a scene gets from one place to another. Visibility

Visibility (geometry)

Visibility is a mathematical abstraction of the real-life notion of visibility.Given a set of obstacles in the Euclidean space, two points in the space are said to be visible to each other, if the line segment that joins them does not intersect any obstacles.Computation of visibility is among the...

is a major component of light transport.

Projection

3D projection

3D projection is any method of mapping three-dimensional points to a two-dimensional plane. As most current methods for displaying graphical data are based on planar two-dimensional media, the use of this type of projection is widespread, especially in computer graphics, engineering and drafting.-...

. This is done using projection and, for most applications, perspective projection. The basic idea behind perspective projection is that objects that are further away are made smaller in relation to those that are closer to the eye. Programs produce perspective by multiplying a dilation constant raised to the power of the negative of the distance from the observer. A dilation constant of one means that there is no perspective. High dilation constants can cause a "fish-eye" effect in which image distortion begins to occur. Orthographic projection

Orthographic projection

Orthographic projection is a means of representing a three-dimensional object in two dimensions. It is a form of parallel projection, where all the projection lines are orthogonal to the projection plane, resulting in every plane of the scene appearing in affine transformation on the viewing surface...

is used mainly in CAD or CAM

Cam

A cam is a rotating or sliding piece in a mechanical linkage used especially in transforming rotary motion into linear motion or vice-versa. It is often a part of a rotating wheel or shaft that strikes a lever at one or more points on its circular path...

applications where scientific modeling requires precise measurements and preservation of the third dimension.

See also

- Ambient occlusionAmbient occlusionAmbient occlusion is a shading method used in 3D computer graphics which helps add realism to local reflection models by taking into account attenuation of light due to occlusion...

- Computer visionComputer visionComputer vision is a field that includes methods for acquiring, processing, analysing, and understanding images and, in general, high-dimensional data from the real world in order to produce numerical or symbolic information, e.g., in the forms of decisions...

- Geometry pipeline

- Geometry processingGeometry ProcessingGeometry processing, or mesh processing, is a fast-growing area of research that uses concepts from applied mathematics, computer science and engineering to design efficient algorithms for the acquisition, reconstruction, analysis, manipulation, simulation and transmission of complex 3D models...

- GraphicsGraphicsGraphics are visual presentations on some surface, such as a wall, canvas, computer screen, paper, or stone to brand, inform, illustrate, or entertain. Examples are photographs, drawings, Line Art, graphs, diagrams, typography, numbers, symbols, geometric designs, maps, engineering drawings,or...

- Graphics processing unitGraphics processing unitA graphics processing unit or GPU is a specialized circuit designed to rapidly manipulate and alter memory in such a way so as to accelerate the building of images in a frame buffer intended for output to a display...

(GPU) - Graphical output devices

- Image processingImage processingIn electrical engineering and computer science, image processing is any form of signal processing for which the input is an image, such as a photograph or video frame; the output of image processing may be either an image or, a set of characteristics or parameters related to the image...

- Industrial CT scanningIndustrial CT ScanningIndustrial CT scanning is a process which uses X-ray equipment to produce three-dimensional representations of components both externally and internally. Industrial CT scanning has been used in many areas of industry for internal inspection of components...

- Painter's algorithmPainter's algorithmThe painter's algorithm, also known as a priority fill, is one of the simplest solutions to the visibility problem in 3D computer graphics...

- Parallel renderingParallel renderingParallel rendering is the application of parallel programming to the computational domain of computer graphics. Rendering graphics can require massive computational resources for complex scenes that arise in scientific visualization, medical visualization, CAD applications, and virtual reality...

- Reflection (computer graphics)Reflection (computer graphics)Reflection in computer graphics is used to emulate reflective objects like mirrors and shiny surfaces.Reflection is accomplished in a ray trace renderer by following a ray from the eye to the mirror and then calculating where it bounces from, and continuing the process until no surface is found, or...

- Rendering (computer graphics)Rendering (computer graphics)Rendering is the process of generating an image from a model , by means of computer programs. A scene file contains objects in a strictly defined language or data structure; it would contain geometry, viewpoint, texture, lighting, and shading information as a description of the virtual scene...

- SIGGRAPHSIGGRAPHSIGGRAPH is the name of the annual conference on computer graphics convened by the ACM SIGGRAPH organization. The first SIGGRAPH conference was in 1974. The conference is attended by tens of thousands of computer professionals...

External links

- Art is the basis of Industrial Design

- A Critical History of Computer Graphics and Animation

- The ARTS: Episode 5 An in depth interview with Legalize on the subject of the History of Computer Graphics. (Available in MP3 audio format)

- CGSociety The Computer Graphics Society

- How Stuff Works - 3D Graphics

- History of Computer Graphics series of articles

- Open Inventor by VSG 3D Graphics Toolkit for Applications Developers