Nyquist–Shannon sampling theorem

Encyclopedia

Harry Nyquist

Harry Nyquist was an important contributor to information theory.-Personal life:...

and Claude Shannon, is a fundamental result in the field of information theory

Information theory

Information theory is a branch of applied mathematics and electrical engineering involving the quantification of information. Information theory was developed by Claude E. Shannon to find fundamental limits on signal processing operations such as compressing data and on reliably storing and...

, in particular telecommunication

Telecommunication

Telecommunication is the transmission of information over significant distances to communicate. In earlier times, telecommunications involved the use of visual signals, such as beacons, smoke signals, semaphore telegraphs, signal flags, and optical heliographs, or audio messages via coded...

s and signal processing

Signal processing

Signal processing is an area of systems engineering, electrical engineering and applied mathematics that deals with operations on or analysis of signals, in either discrete or continuous time...

. Sampling

Sampling (signal processing)

In signal processing, sampling is the reduction of a continuous signal to a discrete signal. A common example is the conversion of a sound wave to a sequence of samples ....

is the process of converting a signal

Signal (electrical engineering)

In the fields of communications, signal processing, and in electrical engineering more generally, a signal is any time-varying or spatial-varying quantity....

(for example, a function of continuous time or space) into a numeric sequence (a function of discrete time or space). Shannon's version of the theorem states:

If a function x(t) contains no frequencies higher than B hertzHertzThe hertz is the SI unit of frequency defined as the number of cycles per second of a periodic phenomenon. One of its most common uses is the description of the sine wave, particularly those used in radio and audio applications....

, it is completely determined by giving its ordinates at a series of points spaced 1/(2B) seconds apart.

The theorem is commonly called the Nyquist sampling theorem; since it was also discovered independently by E. T. Whittaker

E. T. Whittaker

Edmund Taylor Whittaker FRS FRSE was an English mathematician who contributed widely to applied mathematics, mathematical physics and the theory of special functions. He had a particular interest in numerical analysis, but also worked on celestial mechanics and the history of physics...

, by Vladimir Kotelnikov

Vladimir Kotelnikov

Vladimir Aleksandrovich Kotelnikov was an information theory and radar astronomy pioneer from the Soviet Union...

, and by others, it is also known as Nyquist–Shannon–Kotelnikov, Whittaker–Shannon–Kotelnikov, Whittaker–Nyquist–Kotelnikov–Shannon, WKS, etc., sampling theorem, as well as the Cardinal Theorem of Interpolation Theory. It is often referred to simply as the sampling theorem.

In essence, the theorem shows that a bandlimited

Bandlimited

Bandlimiting is the limiting of a deterministic or stochastic signal's Fourier transform or power spectral density to zero above a certain finite frequency...

analog signal

Analog signal

An analog or analogue signal is any continuous signal for which the time varying feature of the signal is a representation of some other time varying quantity, i.e., analogous to another time varying signal. It differs from a digital signal in terms of small fluctuations in the signal which are...

that has been sampled can be perfectly reconstructed from an infinite sequence of samples if the sampling rate exceeds 2B samples per second, where B is the highest frequency

Frequency

Frequency is the number of occurrences of a repeating event per unit time. It is also referred to as temporal frequency.The period is the duration of one cycle in a repeating event, so the period is the reciprocal of the frequency...

of the original signal. If a signal contains a component at exactly B hertz, then samples spaced at exactly 1/(2B) seconds do not completely determine the signal, Shannon's statement notwithstanding. This sufficient condition can be weakened, as discussed at Sampling of non-baseband signals below.

More recent statements of the theorem are sometimes careful to exclude the equality condition; that is, the condition is if x(t) contains no frequencies higher than or equal to B; this condition is equivalent to Shannon's except when the function includes a steady sinusoidal component at exactly frequency B.

The theorem assumes an idealization of any real-world situation, as it only applies to signals that are sampled for infinite time; any time-limited x(t) cannot be perfectly bandlimited. Perfect reconstruction is mathematically possible for the idealized model but only an approximation for real-world signals and sampling techniques, albeit in practice often a very good one.

The theorem also leads to a formula for reconstruction of the original signal. The constructive proof of the theorem leads to an understanding of the aliasing

Aliasing

In signal processing and related disciplines, aliasing refers to an effect that causes different signals to become indistinguishable when sampled...

that can occur when a sampling system does not satisfy the conditions of the theorem.

The sampling theorem provides a sufficient condition, but not a necessary one, for perfect reconstruction. The field of compressed sensing

Compressed sensing

Compressed sensing, also known as compressive sensing, compressive sampling and sparse sampling, is a technique for finding sparse solutions to underdetermined linear systems...

provides a stricter sampling condition when the underlying signal is known to be sparse. Compressed sensing

Compressed sensing

Compressed sensing, also known as compressive sensing, compressive sampling and sparse sampling, is a technique for finding sparse solutions to underdetermined linear systems...

specifically yields a sub-Nyquist sampling criterion.

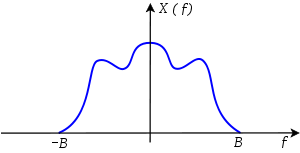

Introduction

A signal or function is bandlimited if it contains no energy at frequencies higher than some bandlimit or bandwidth B. The sampling theorem asserts that, given such a bandlimited signal, the uniformly spaced discrete samples are a complete representation of the signal as long as the sampling rate is larger than twice the bandwidth B. To formalize these concepts, let x(t) represent a continuous-time signal and X(f) be the continuous Fourier transformFourier transform

In mathematics, Fourier analysis is a subject area which grew from the study of Fourier series. The subject began with the study of the way general functions may be represented by sums of simpler trigonometric functions...

of that signal:

The signal x(t) is said to be bandlimited to a one-sided baseband bandwidth, B, if

for all

for all

or, equivalently, . Then the sufficient condition for exact reconstructability from samples at a uniform sampling rate fs (in samples per unit time) is:

The quantity 2B is called the Nyquist rate

Nyquist rate

In signal processing, the Nyquist rate, named after Harry Nyquist, is two times the bandwidth of a bandlimited signal or a bandlimited channel...

and is a property of the bandlimited signal, while fs/2 is called the Nyquist frequency

Nyquist frequency

The Nyquist frequency, named after the Swedish-American engineer Harry Nyquist or the Nyquist–Shannon sampling theorem, is half the sampling frequency of a discrete signal processing system...

and is a property of this sampling system.

The time interval between successive samples is referred to as the sampling interval:

and the samples of x(t) are denoted by:

where n is an integer. The sampling theorem leads to a procedure for reconstructing the original x(t) from the samples and states sufficient conditions for such a reconstruction to be exact.

The sampling process

The theorem describes two processes in signal processingSignal processing

Signal processing is an area of systems engineering, electrical engineering and applied mathematics that deals with operations on or analysis of signals, in either discrete or continuous time...

: a sampling

Sampling (signal processing)

In signal processing, sampling is the reduction of a continuous signal to a discrete signal. A common example is the conversion of a sound wave to a sequence of samples ....

process, in which a continuous time signal is converted to a discrete time

Discrete time

Discrete time is the discontinuity of a function's time domain that results from sampling a variable at a finite interval. For example, consider a newspaper that reports the price of crude oil once every day at 6:00AM. The newspaper is described as sampling the cost at a frequency of once per 24...

signal, and a reconstruction process, in which the original continuous signal is recovered from the discrete time signal.

The continuous signal varies over time (or space in a digitized image

Image

An image is an artifact, for example a two-dimensional picture, that has a similar appearance to some subject—usually a physical object or a person.-Characteristics:...

, or another independent variable in some other application) and the sampling process is performed by measuring the continuous signal's value every T units of time (or space), which is called the sampling interval. Sampling results in a sequence of numbers, called samples, to represent the original signal. Each sample value is associated with the instant in time when it was measured. The reciprocal of the sampling interval (1/T) is the sampling frequency denoted fs, which is measured in samples per unit of time. If T is expressed in second

Second

The second is a unit of measurement of time, and is the International System of Units base unit of time. It may be measured using a clock....

s, then fs is expressed in hertz

Hertz

The hertz is the SI unit of frequency defined as the number of cycles per second of a periodic phenomenon. One of its most common uses is the description of the sine wave, particularly those used in radio and audio applications....

.

Reconstruction

Reconstruction of the original signal is an interpolationInterpolation

In the mathematical field of numerical analysis, interpolation is a method of constructing new data points within the range of a discrete set of known data points....

process that mathematically defines a continuous-time signal x(t) from the discrete samples x[n] and at times in between the sample instants nT.

.svg.png)

- The procedure: Each sample value is multiplied by the sinc function scaled so that the zero-crossings of the sinc function occur at the sampling instants and that the sinc function's central point is shifted to the time of that sample, nT. All of these shifted and scaled functions are then added together to recover the original signal. The scaled and time-shifted sinc functions are continuousContinuous functionIn mathematics, a continuous function is a function for which, intuitively, "small" changes in the input result in "small" changes in the output. Otherwise, a function is said to be "discontinuous". A continuous function with a continuous inverse function is called "bicontinuous".Continuity of...

making the sum of these also continuous, so the result of this operation is a continuous signal. This procedure is represented by the Whittaker–Shannon interpolation formulaWhittaker–Shannon interpolation formulaThe Whittaker–Shannon interpolation formula or sinc interpolation is a method to reconstruct a continuous-time bandlimited signal from a set of equally spaced samples.-Definition:...

.

- The condition: The signal obtained from this reconstruction process can have no frequencies higher than one-half the sampling frequency. According to the theorem, the reconstructed signal will match the original signal provided that the original signal contains no frequencies at or above this limit. This condition is called the Nyquist criterion, or sometimes the Raabe condition.

If the original signal contains a frequency component equal to one-half the sampling rate, the condition is not satisfied. The resulting reconstructed signal may have a component at that frequency, but the amplitude and phase of that component generally will not match the original component.

This reconstruction or interpolation using sinc functions is not the only interpolation scheme. Indeed, it is impossible in practice because it requires summing an infinite number of terms. However, it is the interpolation method that in theory exactly reconstructs any given bandlimited x(t) with any bandlimit B < 1/(2T); any other method that does so is formally equivalent to it.

Practical considerations

A few consequences can be drawn from the theorem:- If the highest frequency B in the original signal is known, the theorem gives the lower bound on the sampling frequency for which perfect reconstruction can be assured. This lower bound to the sampling frequency, 2B, is called the Nyquist rateNyquist rateIn signal processing, the Nyquist rate, named after Harry Nyquist, is two times the bandwidth of a bandlimited signal or a bandlimited channel...

.

- If instead the sampling frequency is known, the theorem gives us an upper bound for frequency components, B<fs/2, of the signal to allow for perfect reconstruction. This upper bound is the Nyquist frequencyNyquist frequencyThe Nyquist frequency, named after the Swedish-American engineer Harry Nyquist or the Nyquist–Shannon sampling theorem, is half the sampling frequency of a discrete signal processing system...

, denoted fN.

- Both of these cases imply that the signal to be sampled must be bandlimitedBandlimitedBandlimiting is the limiting of a deterministic or stochastic signal's Fourier transform or power spectral density to zero above a certain finite frequency...

; that is, any component of this signal which has a frequency above a certain bound should be zero, or at least sufficiently close to zero to allow us to neglect its influence on the resulting reconstruction. In the first case, the condition of bandlimitation of the sampled signal can be accomplished by assuming a model of the signal which can be analysed in terms of the frequency components it contains; for example, sounds that are made by a speaking human normally contain very small frequency components at or above 10 kHz and it is then sufficient to sample such an audio signal with a sampling frequency of at least 20 kHz. For the second case, we have to assure that the sampled signal is bandlimited such that frequency components at or above half of the sampling frequency can be neglected. This is usually accomplished by means of a suitable low-pass filter; for example, if it is desired to sample speech waveforms at 8 kHz, the signals should first be lowpass filtered to below 4 kHz.

- In practice, neither of the two statements of the sampling theorem described above can be completely satisfied, and neither can the reconstruction formula be precisely implemented. The reconstruction process that involves scaled and delayed sinc functions can be described as ideal. It cannot be realized in practice since it implies that each sample contributes to the reconstructed signal at almost all time points, requiring summing an infinite number of terms. Instead, some type of approximation of the sinc functions, finite in length, has to be used. The error that corresponds to the sinc-function approximation is referred to as interpolation error. Practical digital-to-analog converterDigital-to-analog converterIn electronics, a digital-to-analog converter is a device that converts a digital code to an analog signal . An analog-to-digital converter performs the reverse operation...

s produce neither scaled and delayed sinc functions nor ideal impulses (that if ideally low-pass filtered would yield the original signal), but a sequence of scaled and delayed rectangular pulses. This practical piecewise-constant output can be modeled as a zero-order holdZero-order holdThe zero-order hold is a mathematical model of the practical signal reconstruction done by a conventional digital-to-analog converter . That is, it describes the effect of converting a discrete-time signal to a continuous-time signal by holding each sample value for one sample interval...

filter driven by the sequence of scaled and delayed dirac impulses referred to in the mathematical basis section below. A shaping filter is sometimes used after the DAC with zero-order hold to make a better overall approximation.

- Furthermore, in practice, a signal can never be perfectly bandlimited, since ideal "brick-wall" filters cannot be realized. All practical filters can only attenuate frequencies outside a certain range, not remove them entirely. In addition to this, a "time-limited" signal can never be bandlimited. This means that even if an ideal reconstruction could be made, the reconstructed signal would not be exactly the original signal. The error that corresponds to the failure of bandlimitation is referred to as aliasing.

- The sampling theorem does not say what happens when the conditions and procedures are not exactly met, but its proof suggests an analytical framework in which the non-ideality can be studied. A designer of a system that deals with sampling and reconstruction processes needs a thorough understanding of the signal to be sampled, in particular its frequency content, the sampling frequency, how the signal is reconstructed in terms of interpolation, and the requirement for the total reconstruction error, including aliasing, sampling, interpolation and other errors. These properties and parameters may need to be carefully tuned in order to obtain a useful system.

Aliasing

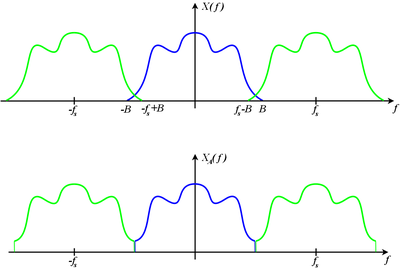

The Poisson summation formulaPoisson summation formula

In mathematics, the Poisson summation formula is an equation that relates the Fourier series coefficients of the periodic summation of a function to values of the function's continuous Fourier transform. Consequently, the periodic summation of a function is completely defined by discrete samples...

shows that the samples, x[n]=x(nT), of function x(t) are sufficient to create a periodic summation of function X(f). The result is:

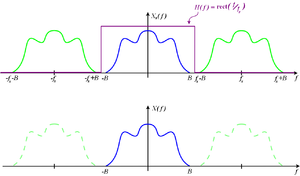

As depicted in Figures 3, 4, and 8, copies of X(f) are shifted by multiples of fs and combined by addition.

If the sampling condition is not satisfied, adjacent copies overlap, and it is not possible in general to discern an unambiguous X(f). Any frequency component above fs/2 is indistinguishable from a lower-frequency component, called an alias, associated with one of the copies. The reconstruction technique described below produces the alias, rather than the original component, in such cases.

To prevent or reduce aliasing, two things can be done:

- Increase the sampling rate, to above twice some or all of the frequencies that are aliasing.

- Introduce an anti-aliasing filterAnti-aliasing filterAn anti-aliasing filter is a filter used before a signal sampler, to restrict the bandwidth of a signal to approximately satisfy the sampling theorem....

or make the anti-aliasing filter more stringent.

The anti-aliasing filter is to restrict the bandwidth of the signal to satisfy the condition for proper sampling. Such a restriction works in theory, but is not precisely satisfiable in reality, because realizable filters will always allow some leakage of high frequencies. However, the leakage energy can be made small enough so that the aliasing effects are negligible.

Application to multivariable signals and images

Pixel

In digital imaging, a pixel, or pel, is a single point in a raster image, or the smallest addressable screen element in a display device; it is the smallest unit of picture that can be represented or controlled....

s (picture elements) located at the intersections of row and column sample locations. As a result, images require two independent variables, or indices, to specify each pixel uniquely — one for the row, and one for the column.

Color images typically consist of a composite of three separate grayscale images, one to represent each of the three primary colors — red, green, and blue, or RGB for short. Other colorspaces using 3-vectors for colors include HSV, LAB, XYZ, etc. Some colorspaces such as cyan, magenta, yellow, and black (CMYK) may represent color by four dimensions. All of these are treated as vector-valued function

Vector-valued function

A vector-valued function also referred to as a vector function is a mathematical function of one or more variables whose range is a set of multidimensional vectors or infinite-dimensional vectors. The input of a vector-valued function could be a scalar or a vector...

s over a two-dimensional sampled domain.

Similar to one-dimensional discrete-time signals, images can also suffer from aliasing if the sampling resolution, or pixel density, is inadequate. For example, a digital photograph of a striped shirt with high frequencies (in other words, the distance between the stripes is small), can cause aliasing of the shirt when it is sampled by the camera's image sensor

Image sensor

An image sensor is a device that converts an optical image into an electronic signal. It is used mostly in digital cameras and other imaging devices...

. The aliasing appears as a moiré pattern

Moiré pattern

In physics, a moiré pattern is an interference pattern created, for example, when two grids are overlaid at an angle, or when they have slightly different mesh sizes.- Etymology :...

. The "solution" to higher sampling in the spatial domain for this case would be to move closer to the shirt, use a higher resolution sensor, or to optically blur the image before acquiring it with the sensor.

Another example is shown to the left in the brick patterns. The top image shows the effects when the sampling theorem's condition is not satisfied. When software rescales an image (the same process that creates the thumbnail shown in the lower image) it, in effect, runs the image through a low-pass filter first and then downsamples

Downsampling

In signal processing, downsampling is the process of reducing the sampling rate of a signal. This is usually done to reduce the data rate or the size of the data....

the image to result in a smaller image that does not exhibit the moiré pattern

Moiré pattern

In physics, a moiré pattern is an interference pattern created, for example, when two grids are overlaid at an angle, or when they have slightly different mesh sizes.- Etymology :...

. The top image is what happens when the image is downsampled without low-pass filtering: aliasing results.

The application of the sampling theorem to images should be made with care. For example, the sampling process in any standard image sensor (CCD or CMOS camera) is relatively far from the ideal sampling which would measure the image intensity at a single point. Instead these devices have a relatively large sensor area at each sample point in order to obtain sufficient amount of light. In other words, any detector has a finite-width point spread function

Point spread function

The point spread function describes the response of an imaging system to a point source or point object. A more general term for the PSF is a system's impulse response, the PSF being the impulse response of a focused optical system. The PSF in many contexts can be thought of as the extended blob...

. The analog optical image intensity function which is sampled by the sensor device is not in general bandlimited, and the non-ideal sampling is itself a useful type of low-pass filter, though not always sufficient to remove enough high frequencies to sufficiently reduce aliasing. When the area of the sampling spot (the size of the pixel sensor) is not large enough to provide sufficient anti-aliasing

Anti-aliasing

In digital signal processing, spatial anti-aliasing is the technique of minimizing the distortion artifacts known as aliasing when representing a high-resolution image at a lower resolution...

, a separate anti-aliasing filter

Anti-aliasing filter

An anti-aliasing filter is a filter used before a signal sampler, to restrict the bandwidth of a signal to approximately satisfy the sampling theorem....

(optical low-pass filter) is typically included in a camera system to further blur the optical image. Despite images having these problems in relation to the sampling theorem, the theorem can be used to describe the basics of down and up sampling of images.

Downsampling

When a signal is downsampledDownsampling

In signal processing, downsampling is the process of reducing the sampling rate of a signal. This is usually done to reduce the data rate or the size of the data....

, the sampling theorem can be invoked via the artifice of resampling a hypothetical continuous-time reconstruction. The Nyquist criterion must still be satisfied with respect to the new lower sampling frequency in order to avoid aliasing. To meet the requirements of the theorem, the signal must usually pass through a low-pass filter

Low-pass filter

A low-pass filter is an electronic filter that passes low-frequency signals but attenuates signals with frequencies higher than the cutoff frequency. The actual amount of attenuation for each frequency varies from filter to filter. It is sometimes called a high-cut filter, or treble cut filter...

of appropriate cutoff frequency as part of the downsampling operation. This low-pass filter, which prevents aliasing, is called an anti-aliasing filter

Anti-aliasing filter

An anti-aliasing filter is a filter used before a signal sampler, to restrict the bandwidth of a signal to approximately satisfy the sampling theorem....

.

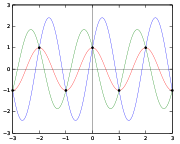

Critical frequency

With fs = 2B or equivalently T = 1/(2B), the samples are given by:

Those samples cannot be distinguished from the samples of:

But for any θ such that sin(θ) ≠ 0, x(t) and xA(t) have different amplitudes and different phase. This and other ambiguities are the reason for the strict inequality of the sampling theorem's condition.

Mathematical reasoning for the theorem

where:

where:

H(f) need not be precisely defined in the region because Xs(f) is zero in that region. However, the worst case is when B = fs/2, the Nyquist frequency. A function that is sufficient for that and all less severe cases is:

where rect(u) is the rectangular function.

Therefore:

-

-

(from , above).

(from , above).

-

The original function that was sampled can be recovered by an inverse Fourier transform:

which is the Whittaker–Shannon interpolation formula

Whittaker–Shannon interpolation formula

The Whittaker–Shannon interpolation formula or sinc interpolation is a method to reconstruct a continuous-time bandlimited signal from a set of equally spaced samples.-Definition:...

. It shows explicitly how the samples, x(nT), can be combined to reconstruct x(t).

- From Figure 8, it is clear that larger-than-necessary values of fs (smaller values of T), called oversampling, have no effect on the outcome of the reconstruction and have the benefit of leaving room for a transition band in which H(f) is free to take intermediate values. UndersamplingUndersamplingIn signal processing, undersampling or bandpass sampling is a technique where one samples a bandpass filtered signal at a sample rate below the usual Nyquist rate In signal processing, undersampling or bandpass sampling is a technique where one samples a bandpass filtered signal at a sample rate...

, which causes aliasing, is not in general a reversible operation. - Theoretically, the interpolation formula can be implemented as a low pass filter, whose impulse response is sinc(t/T) and whose input is

which is a Dirac combDirac combIn mathematics, a Dirac comb is a periodic Schwartz distribution constructed from Dirac delta functions...

which is a Dirac combDirac combIn mathematics, a Dirac comb is a periodic Schwartz distribution constructed from Dirac delta functions...

function modulated by the signal samples. Practical digital-to-analog converterDigital-to-analog converterIn electronics, a digital-to-analog converter is a device that converts a digital code to an analog signal . An analog-to-digital converter performs the reverse operation...

s (DAC) implement an approximation like the zero-order holdZero-order holdThe zero-order hold is a mathematical model of the practical signal reconstruction done by a conventional digital-to-analog converter . That is, it describes the effect of converting a discrete-time signal to a continuous-time signal by holding each sample value for one sample interval...

. In that case, oversampling can reduce the approximation error.

Shannon's original proof

The original proof presented by Shannon is elegant and quite brief, but it offers less intuitive insight into the subtleties of aliasing, both unintentional and intentional. Quoting Shannon's original paper, which uses f for the function, F for the spectrum, and W for the bandwidth limit:- Let

be the spectrum of

be the spectrum of  . Then

. Then

|-

|

|

|-

|

|

|}

- since

is assumed to be zero outside the band W. If we let

is assumed to be zero outside the band W. If we let

- where n is any positive or negative integer, we obtain

- On the left are values of

at the sampling points. The integral on the right will be recognized as essentially the nth coefficient in a Fourier-series expansion of the function

at the sampling points. The integral on the right will be recognized as essentially the nth coefficient in a Fourier-series expansion of the function  , taking the interval –W to W as a fundamental period. This means that the values of the samples

, taking the interval –W to W as a fundamental period. This means that the values of the samples  determine the Fourier coefficients in the series expansion of

determine the Fourier coefficients in the series expansion of  . Thus they determine

. Thus they determine  , since

, since  is zero for frequencies greater than W, and for lower frequencies

is zero for frequencies greater than W, and for lower frequencies  is determined if its Fourier coefficients are determined. But

is determined if its Fourier coefficients are determined. But  determines the original function

determines the original function  completely, since a function is determined if its spectrum is known. Therefore the original samples determine the function

completely, since a function is determined if its spectrum is known. Therefore the original samples determine the function  completely.

completely.

Shannon's proof of the theorem is complete at that point, but he goes on to discuss reconstruction via sinc functions, what we now call the Whittaker–Shannon interpolation formula

Whittaker–Shannon interpolation formula

The Whittaker–Shannon interpolation formula or sinc interpolation is a method to reconstruct a continuous-time bandlimited signal from a set of equally spaced samples.-Definition:...

as discussed above. He does not derive or prove the properties of the sinc function, but these would have been familiar to engineers reading his works at the time, since the Fourier pair relationship between rect (the rectangular function) and sinc was well known. Quoting Shannon:

- Let

be the nth sample. Then the function

be the nth sample. Then the function  is represented by:

is represented by:

As in the other proof, the existence of the Fourier transform of the original signal is assumed, so the proof does not say whether the sampling theorem extends to bandlimited stationary random processes.

Sampling of non-baseband signals

As discussed by Shannon:A similar result is true if the band does not start at zero frequency but at some higher value, and can be proved by a linear translation (corresponding physically to single-sideband modulation

Single-sideband modulationSingle-sideband modulation or Single-sideband suppressed-carrier is a refinement of amplitude modulation that more efficiently uses electrical power and bandwidth....

) of the zero-frequency case. In this case the elementary pulse is obtained from sin(x)/x by single-side-band modulation.

That is, a sufficient no-loss condition for sampling signals that do not have baseband

Baseband

In telecommunications and signal processing, baseband is an adjective that describes signals and systems whose range of frequencies is measured from close to 0 hertz to a cut-off frequency, a maximum bandwidth or highest signal frequency; it is sometimes used as a noun for a band of frequencies...

components exists that involves the width of the non-zero frequency interval as opposed to its highest frequency component. See Sampling (signal processing)

Sampling (signal processing)

In signal processing, sampling is the reduction of a continuous signal to a discrete signal. A common example is the conversion of a sound wave to a sequence of samples ....

for more details and examples.

A bandpass condition is that X(f) = 0, for all nonnegative f outside the open band of frequencies:

for some nonnegative integer N. This formulation includes the normal baseband condition as the case N=0.

The corresponding interpolation function is the impulse response of an ideal brick-wall bandpass filter (as opposed to the ideal brick-wall lowpass filter used above) with cutoffs at the upper and lower edges of the specified band, which is the difference between a pair of lowpass impulse responses:

Other generalizations, for example to signals occupying multiple non-contiguous bands, are possible as well. Even the most generalized form of the sampling theorem does not have a provably true converse. That is, one cannot conclude that information is necessarily lost just because the conditions of the sampling theorem are not satisfied; from an engineering perspective, however, it is generally safe to assume that if the sampling theorem is not satisfied then information will most likely be lost.

Nonuniform sampling

The sampling theory of Shannon can be generalized for the case of nonuniform samples, that is, samples not taken equally spaced in time. The Shannon sampling theory for non-uniform sampling states that a band-limited signal can be perfectly reconstructed from its samples if the average sampling rate satisfies the Nyquist condition. Therefore, although uniformly spaced samples may result in easier reconstruction algorithms, it is not a necessary condition for perfect reconstruction.The general theory for non-baseband and nonuniform samples was developed in 1967 by Landau

Henry Landau

Henry Jacob Landau is an American mathematician, known forhis contributions to information theory, in particular to the theory of bandlimited functions and on moment issues.He received an A.B. , A.M. and Ph.D...

. He proved that, to paraphrase roughly, the average sampling rate (uniform or otherwise) must be twice the occupied bandwidth of the signal, assuming it is a priori known what portion of the spectrum was occupied.

In the late 1990s, this work was partially extended to cover signals of when the amount of occupied bandwidth was known, but the actual occupied portion of the spectrum was unknown. In the 2000s, a complete theory was developed

(see the section Beyond Nyquist below) using compressed sensing

Compressed sensing

Compressed sensing, also known as compressive sensing, compressive sampling and sparse sampling, is a technique for finding sparse solutions to underdetermined linear systems...

. In particular, the theory, using signal processing language, is described in this 2009 paper. They show, among other things, that if the frequency locations are unknown, then it is necessary to sample at least at twice the Nyquist criteria; in other words, you must pay at least a factor of 2 for not knowing the location of the spectrum

Spectrum

A spectrum is a condition that is not limited to a specific set of values but can vary infinitely within a continuum. The word saw its first scientific use within the field of optics to describe the rainbow of colors in visible light when separated using a prism; it has since been applied by...

. Note that minimum sampling requirements do not necessarily guarantee stability

Numerical stability

In the mathematical subfield of numerical analysis, numerical stability is a desirable property of numerical algorithms. The precise definition of stability depends on the context, but it is related to the accuracy of the algorithm....

.

Beyond Nyquist

The Nyquist–Shannon sampling theorem provides a sufficient condition for the sampling and reconstruction of a band-limited signal. When reconstruction is done via the Whittaker–Shannon interpolation formulaWhittaker–Shannon interpolation formula

The Whittaker–Shannon interpolation formula or sinc interpolation is a method to reconstruct a continuous-time bandlimited signal from a set of equally spaced samples.-Definition:...

, the Nyquist criterion is also a necessary condition to avoid aliasing, in the sense that if samples are taken at a slower rate than twice the band limit, then there are some signals that will not be correctly reconstructed. However, if further restrictions are imposed on the signal, then the Nyquist criterion may no longer be a necessary condition.

A non-trivial example of exploiting extra assumptions about the signal is given by the recent field of compressed sensing

Compressed sensing

Compressed sensing, also known as compressive sensing, compressive sampling and sparse sampling, is a technique for finding sparse solutions to underdetermined linear systems...

, which allows for full reconstruction with a sub-Nyquist sampling rate. Specifically, this applies to signals that are sparse (or compressible) in some domain. As an example, compressed sensing deals with signals that may have a low over-all bandwidth (say, the effective bandwidth

), but the frequency locations are unknown, rather than all together in a single band, so that the passband technique doesn't apply. In other words, the frequency spectrum is sparse. Traditionally, the necessary sampling rate is thus

), but the frequency locations are unknown, rather than all together in a single band, so that the passband technique doesn't apply. In other words, the frequency spectrum is sparse. Traditionally, the necessary sampling rate is thus  . Using compressed sensing techniques, the signal could be perfectly reconstructed if it is sampled at a rate slightly greater than the

. Using compressed sensing techniques, the signal could be perfectly reconstructed if it is sampled at a rate slightly greater than the  . The downside of this approach is that reconstruction is no longer given by a formula, but instead by the solution to a convex optimization program which requires well-studied but nonlinear methods.

. The downside of this approach is that reconstruction is no longer given by a formula, but instead by the solution to a convex optimization program which requires well-studied but nonlinear methods.Historical background

The sampling theorem was implied by the work of Harry NyquistHarry Nyquist

Harry Nyquist was an important contributor to information theory.-Personal life:...

in 1928 ("Certain topics in telegraph transmission theory"), in which he showed that up to 2B independent pulse samples could be sent through a system of bandwidth B; but he did not explicitly consider the problem of sampling and reconstruction of continuous signals. About the same time, Karl Küpfmüller

Karl Küpfmüller

Karl Küpfmüller was a German electrical engineer, who was prolific in the areas of communications technology, measurement and control engineering, acoustics, communication theory and theoretical electro-technology....

showed a similar result, and discussed the sinc-function impulse response of a band-limiting filter, via its integral, the step response Integralsinus; this bandlimiting and reconstruction filter that is so central to the sampling theorem is sometimes referred to as a Küpfmüller filter (but seldom so in English).

The sampling theorem, essentially a dual

Dual

Dual may refer to:* Dual , a notion of paired concepts that mirror one another** Dual , a formalization of mathematical duality** . . ...

of Nyquist's result, was proved by Claude E. Shannon in 1949 ("Communication in the presence of noise").

V. A. Kotelnikov published similar results in 1933 ("On the transmission capacity of the 'ether' and of cables in electrical communications", translation from the Russian), as did the mathematician E. T. Whittaker

E. T. Whittaker

Edmund Taylor Whittaker FRS FRSE was an English mathematician who contributed widely to applied mathematics, mathematical physics and the theory of special functions. He had a particular interest in numerical analysis, but also worked on celestial mechanics and the history of physics...

in 1915 ("Expansions of the Interpolation-Theory", "Theorie der Kardinalfunktionen"), J. M. Whittaker in 1935 ("Interpolatory function theory"), and Gabor

Dennis Gabor

Dennis Gabor CBE, FRS was a Hungarian-British electrical engineer and inventor, most notable for inventing holography, for which he later received the 1971 Nobel Prize in Physics....

in 1946 ("Theory of communication").

Other discoverers

Others who have independently discovered or played roles in the development of the sampling theorem have been discussed in several historical articles, for example by Jerri and by Lüke. For example, Lüke points out that H. Raabe, an assistant to Küpfmüller, proved the theorem in his 1939 Ph.D. dissertation; the term Raabe condition came to be associated with the criterion for unambiguous representation (sampling rate greater than twice the bandwidth).Meijering mentions several other discoverers and names in a paragraph and pair of footnotes:

As pointed out by Higgins [135], the sampling theorem should really be considered in two parts, as done above: the first stating the fact that a bandlimited function is completely determined by its samples, the second describing how to reconstruct the function using its samples. Both parts of the sampling theorem were given in a somewhat different form by J. M. Whittaker [350, 351, 353] and before him also by Ogura [241, 242]. They were probably not aware of the fact that the first part of the theorem had been stated as early as 1897 by Borel [25].27 As we have seen, Borel also used around that time what became known as the cardinal series. However, he appears not to have made the link [135]. In later years it became known that the sampling theorem had been presented before Shannon to the Russian communication community by Kotel'nikov [173]. In more implicit, verbal form, it had also been described in the German literature by Raabe [257]. Several authors [33, 205] have mentioned that Someya [296] introduced the theorem in the Japanese literature parallel to Shannon. In the English literature, Weston [347] introduced it independently of Shannon around the same time.28

27 Several authors, following Black [16], have claimed that this first part of the sampling theorem was stated even earlier by Cauchy, in a paper [41] published in 1841. However, the paper of Cauchy does not contain such a statement, as has been pointed out by Higgins [135].

28 As a consequence of the discovery of the several independent introductions of the sampling theorem, people started to refer to the theorem by including the names of the aforementioned authors, resulting in such catchphrases as “the Whittaker-Kotel’nikov-Shannon (WKS) sampling theorem" [155] or even "the Whittaker-Kotel'nikov-Raabe-Shannon-Someya sampling theorem" [33]. To avoid confusion, perhaps the best thing to do is to refer to it as the sampling theorem, "rather than trying to find a title that does justice to all claimants" [136].

Why Nyquist?

Exactly how, when, or why Harry NyquistHarry Nyquist

Harry Nyquist was an important contributor to information theory.-Personal life:...

had his name attached to the sampling theorem remains obscure. The term Nyquist Sampling Theorem (capitalized thus) appeared as early as 1959 in a book from his former employer, Bell Labs

Bell Labs

Bell Laboratories is the research and development subsidiary of the French-owned Alcatel-Lucent and previously of the American Telephone & Telegraph Company , half-owned through its Western Electric manufacturing subsidiary.Bell Laboratories operates its...

, and appeared again in 1963, and not capitalized in 1965. It had been called the Shannon Sampling Theorem as early as 1954, but also just the sampling theorem by several other books in the early 1950s.

In 1958, Blackman and Tukey cited Nyquist's 1928 paper as a reference for the sampling theorem of information theory, even though that paper does not treat sampling and reconstruction of continuous signals as others did. Their glossary of terms includes these entries:

- Sampling theorem (of information theory)

- Nyquist's result that equi-spaced data, with two or more points per cycle of highest frequency, allows reconstruction of band-limited functions. (See Cardinal theorem.)

- Cardinal theorem (of interpolation theory)

- A precise statement of the conditions under which values given at a doubly infinite set of equally spaced points can be interpolated to yield a continuous band-limited function with the aid of the function

Exactly what "Nyquist's result" they are referring to remains mysterious.

When Shannon stated and proved the sampling theorem in his 1949 paper, according to Meijering "he referred to the critical sampling interval T = 1/(2W) as the Nyquist interval corresponding to the band W, in recognition of Nyquist’s discovery of the fundamental importance of this interval in connection with telegraphy." This explains Nyquist's name on the critical interval, but not on the theorem.

Similarly, Nyquist's name was attached to Nyquist rate

Nyquist rate

In signal processing, the Nyquist rate, named after Harry Nyquist, is two times the bandwidth of a bandlimited signal or a bandlimited channel...

in 1953 by Harold S. Black

Harold Stephen Black

Harold Stephen Black was an American electrical engineer, who revolutionized the field of applied electronics by inventing the negative feedback amplifier in 1927. To some, his invention is considered the most important breakthrough of the twentieth century in the field of electronics, since it...

:

- "If the essential frequency range is limited to B cycles per second, 2B was given by Nyquist as the maximum number of code elements per second that could be unambiguously resolved, assuming the peak interference is less half a quantum step. This rate is generally referred to as signaling at the Nyquist rate and 1/(2B) has been termed a Nyquist interval." (bold added for emphasis; italics as in the original)

According to the OED, this may be the origin of the term Nyquist rate. In Black's usage, it is not a sampling rate, but a signaling rate.

See also

- Hartley's law

- Reconstruction from zero crossingsReconstruction from zero crossingsThe problem of reconstruction from zero crossings can be stated as: given the zero crossings of a continuous signal, is it possible to reconstruct the signal ? Worded differently, what are the conditions under which a signal can be reconstructed from its zero crossings?This problem has 2 parts...

- Zero-order holdZero-order holdThe zero-order hold is a mathematical model of the practical signal reconstruction done by a conventional digital-to-analog converter . That is, it describes the effect of converting a discrete-time signal to a continuous-time signal by holding each sample value for one sample interval...

- The Cheung–Marks theoremCheung–Marks theoremIn information theory, the Cheung–Marks theorem, named after K. F. Cheung and Robert J. Marks II, specifies conditions where restoration of a signal by the sampling theorem can become ill-posed...

specifies conditions where restoration of a signal by the sampling theorem can become ill-posed. - Balian–Low theorem, a similar theoretical lower-bound on sampling rates, but which applies to time–frequency transforms.

External links

- Learning by Simulations Interactive simulation of the effects of inadequate sampling

- Undersampling and an application of it

- Sampling Theory For Digital Audio

- Journal devoted to Sampling Theory

- "The Origins of the Sampling Theorem" by Hans Dieter Lüke published in "IEEE Communications Magazine" April 1999