History of entropy

Encyclopedia

The concept of entropy

developed in response to the observation that a certain amount of functional energy released from combustion reactions is always lost to dissipation or friction and is thus not transformed into useful work

. Early heat-powered engines such as Thomas Savery

's (1698), the Newcomen engine (1712) and the Cugnot steam tricycle

(1769) were inefficient, converting less than two percent of the input energy into useful work output

; a great deal of useful energy was dissipated or lost into what seemed like a state of immeasurable randomness. Over the next two centuries, physicists investigated this puzzle of lost energy; the result was the concept of entropy

.

In the early 1850s, Rudolf Clausius

set forth the concept of the thermodynamic system

and posited the argument that in any irreversible process a small amount of heat energy δQ is incrementally dissipated across the system boundary. Clausius continued to develop his ideas of lost energy, and coined the term entropy.

Since the mid-20th century the concept of entropy has found application in the analogous field of data loss in information transmission systems.

published a work entitled Fundamental Principles of Equilibrium and Movement. This work includes a discussion on the efficiency of fundamental machines, i.e. pulleys and inclined planes. Lazare Carnot saw through all the details of the mechanisms to develop a general discussion on the conservation of mechanical energy. Over the next three decades, Lazare Carnot’s theorem was taken as a statement that in any machine the accelerations and shocks of the moving parts all represent losses of moment of activity, i.e. the useful work

done. From this Lazare drew the inference that perpetual motion was impossible. This loss of moment of activity was the first-ever rudimentary statement of the second law of thermodynamics

and the concept of 'transformation-energy' or entropy, i.e. energy lost to dissipation and friction.

Lazare Carnot died in exile in 1823. During the following year Lazare’s son Sadi Carnot

, having graduated from the École Polytechnique

training school for engineers, but now living on half-pay with his brother Hippolyte in a small apartment in Paris, wrote the Reflections on the Motive Power of Fire. In this paper, Sadi visualized an ideal engine in which the heat of caloric converted into work could be reinstated by reversing the motion of the cycle, a concept subsequently known as thermodynamic reversibility. Building on his father's work, Sadi postulated the concept that “some caloric is always lost”, not being converted to mechanical work. Hence any real heat engine could not realize the Carnot cycle's reversibility and was condemned to be less efficient. This lost caloric was a precursory form of entropy loss as we now know it. Though formulated in terms of caloric, rather than entropy, this was an early insight into the second law of thermodynamics

.

In his 1854 memoir, Clausius first develops the concepts of interior work, i.e. that "which the atoms of the body exert upon each other", and exterior work, i.e. that "which arise from foreign influences [to] which the body may be exposed", which may act on a working body of fluid or gas, typically functioning to work a piston. He then discusses the three categories into which heat Q may be divided:

In his 1854 memoir, Clausius first develops the concepts of interior work, i.e. that "which the atoms of the body exert upon each other", and exterior work, i.e. that "which arise from foreign influences [to] which the body may be exposed", which may act on a working body of fluid or gas, typically functioning to work a piston. He then discusses the three categories into which heat Q may be divided:

Building on this logic, and following a mathematical presentation of the first fundamental theorem, Clausius then presented the first-ever mathematical formulation of entropy, although at this point in the development of his theories he called it "equivalence-value", perhaps referring to the concept of the mechanical equivalent of heat

which was developing at the time rather than entropy, a term which was to come into use later. He stated:

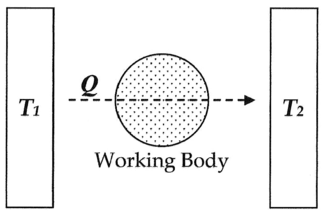

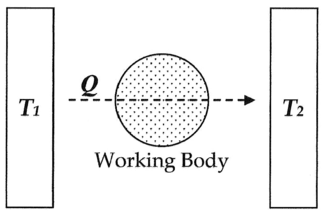

In modern terminology, we think of this equivalence-value as "entropy", symbolized by S. Thus, using the above description, we can calculate the entropy change ΔS for the passage of the quantity of heat

Q from the temperature

T1, through the "working body" of fluid (see heat engine

), which was typically a body of steam, to the temperature T2 as shown below:

If we make the assignment:

If we make the assignment:

Then, the entropy change or "equivalence-value" for this transformation is:

which equals:

and by factoring out Q, we have the following form, as was derived by Clausius:

where N is the "equivalence-value" of all uncompensated transformations involved in a cyclical process. This equivalence-value was a precursory formulation of entropy.

, as such:

Quantitatively, Clausius states the mathematical expression for this theorem is as follows. Let δQ be an element of the heat given up by the body to any reservoir of heat during its own changes, heat which it may absorb from a reservoir being here reckoned as negative, and T the absolute temperature of the body at the moment of giving up this heat, then the equation:

must be true for every reversible cyclical process, and the relation:

must hold good for every cyclical process which is in any way possible. This was an early formulation of the second law and one of the original forms of the concept of entropy.

Although Clausius did not specify why he chose the symbol "S" to represent entropy, it is arguable that Clausius chose "S" in honor of Sadi Carnot

, to whose 1824 article Clausius devoted over 15 years of work and research. On the first page of his original 1850 article "On the Motive Power of Heat, and on the Laws which can be Deduced from it for the Theory of Heat", Clausius calls Carnot the most important of the researchers in the theory of heat

.

and others, proposed that the measurement of "available energy" ΔG in a thermodynamic system could be mathematically accounted for by subtracting the "energy loss" TΔS from total energy change of the system ΔH. These concepts were further developed by James Clerk Maxwell

[1871] and Max Planck

[1903].

formulated the alternative definition of entropy S defined as:

where

Boltzmann saw entropy as a measure of statistical "mixedupness" or disorder. This concept was soon refined by J. Willard Gibbs, and is now regarded as one of the cornerstones of the theory of statistical mechanics

.

Laboratories electrical engineer Claude Shannon

set out to mathematically quantify the statistical nature of “lost information” in phone-line signals. To do this, Shannon developed the very general concept of information entropy

, a fundamental cornerstone of information theory

. Although the story varies, initially it seems that Shannon was not particularly aware of the close similarity between his new quantity and earlier work in thermodynamics. In 1949, however, when Shannon had been working on his equations for some time, he happened to visit the mathematician John von Neumann

. During their discussions, regarding what Shannon should call the “measure of uncertainty” or attenuation in phone-line signals with reference to his new information theory, according to one source:

According to another source, when von Neumann asked him how he was getting on with his information theory, Shannon replied:

In 1948 Shannon published his famous paper A Mathematical Theory of Communication, in which he devoted a section to what he calls Choice, Uncertainty, and Entropy. In this section, Shannon introduces an H function of the following form:

where K is a positive constant. Shannon then states that “any quantity of this form, where K merely amounts to a choice of a unit of measurement, plays a central role in information theory as measures of information, choice, and uncertainty.” Then, as an example of how this expression applies in a number of different fields, he references R.C. Tolman’s 1938 Principles of Statistical Mechanics, stating that “the form of H will be recognized as that of entropy as defined in certain formulations of statistical mechanics where pi is the probability of a system being in cell i of its phase space… H is then, for example, the H in Boltzmann’s famous H theorem

.” As such, over the last fifty years, ever since this statement was made, people have been overlapping the two concepts or even stating that they are exactly the same.

Shannon's information entropy is a much more general concept than statistical thermodynamic entropy. Information entropy is present whenever there are unknown quantities that can be described only by a probability distribution. In a series of papers by E. T. Jaynes starting in 1957, the statistical thermodynamic entropy can be seen as just a particular application of Shannon's information entropy to the probabilities of particular microstates of a system occurring in order to produce a particular macrostate.

Entropy

Entropy is a thermodynamic property that can be used to determine the energy available for useful work in a thermodynamic process, such as in energy conversion devices, engines, or machines. Such devices can only be driven by convertible energy, and have a theoretical maximum efficiency when...

developed in response to the observation that a certain amount of functional energy released from combustion reactions is always lost to dissipation or friction and is thus not transformed into useful work

Work (thermodynamics)

In thermodynamics, work performed by a system is the energy transferred to another system that is measured by the external generalized mechanical constraints on the system. As such, thermodynamic work is a generalization of the concept of mechanical work in mechanics. Thermodynamic work encompasses...

. Early heat-powered engines such as Thomas Savery

Thomas Savery

Thomas Savery was an English inventor, born at Shilstone, a manor house near Modbury, Devon, England.-Career:Savery became a military engineer, rising to the rank of Captain by 1702, and spent his free time performing experiments in mechanics...

's (1698), the Newcomen engine (1712) and the Cugnot steam tricycle

Steam tricycle

A steam tricycle is a steam-driven three-wheeled vehicle.-History:In the early days of motorised vehicle development, a number of experimenters built steam-powered vehicles with three wheels....

(1769) were inefficient, converting less than two percent of the input energy into useful work output

Work output

In physics, work output is the work done by a simple machine, compound machine, or any type of engine model. In common terms, it is the energy output, which for simple machines is always less than the energy input, even though the forces may be drastically different.In thermodynamics, work output...

; a great deal of useful energy was dissipated or lost into what seemed like a state of immeasurable randomness. Over the next two centuries, physicists investigated this puzzle of lost energy; the result was the concept of entropy

Entropy

Entropy is a thermodynamic property that can be used to determine the energy available for useful work in a thermodynamic process, such as in energy conversion devices, engines, or machines. Such devices can only be driven by convertible energy, and have a theoretical maximum efficiency when...

.

In the early 1850s, Rudolf Clausius

Rudolf Clausius

Rudolf Julius Emanuel Clausius , was a German physicist and mathematician and is considered one of the central founders of the science of thermodynamics. By his restatement of Sadi Carnot's principle known as the Carnot cycle, he put the theory of heat on a truer and sounder basis...

set forth the concept of the thermodynamic system

Thermodynamic system

A thermodynamic system is a precisely defined macroscopic region of the universe, often called a physical system, that is studied using the principles of thermodynamics....

and posited the argument that in any irreversible process a small amount of heat energy δQ is incrementally dissipated across the system boundary. Clausius continued to develop his ideas of lost energy, and coined the term entropy.

Since the mid-20th century the concept of entropy has found application in the analogous field of data loss in information transmission systems.

Classical thermodynamic views

In 1803, mathematician Lazare CarnotLazare Carnot

Lazare Nicolas Marguerite, Comte Carnot , the Organizer of Victory in the French Revolutionary Wars, was a French politician, engineer, and mathematician.-Education and early life:...

published a work entitled Fundamental Principles of Equilibrium and Movement. This work includes a discussion on the efficiency of fundamental machines, i.e. pulleys and inclined planes. Lazare Carnot saw through all the details of the mechanisms to develop a general discussion on the conservation of mechanical energy. Over the next three decades, Lazare Carnot’s theorem was taken as a statement that in any machine the accelerations and shocks of the moving parts all represent losses of moment of activity, i.e. the useful work

Work (thermodynamics)

In thermodynamics, work performed by a system is the energy transferred to another system that is measured by the external generalized mechanical constraints on the system. As such, thermodynamic work is a generalization of the concept of mechanical work in mechanics. Thermodynamic work encompasses...

done. From this Lazare drew the inference that perpetual motion was impossible. This loss of moment of activity was the first-ever rudimentary statement of the second law of thermodynamics

Second law of thermodynamics

The second law of thermodynamics is an expression of the tendency that over time, differences in temperature, pressure, and chemical potential equilibrate in an isolated physical system. From the state of thermodynamic equilibrium, the law deduced the principle of the increase of entropy and...

and the concept of 'transformation-energy' or entropy, i.e. energy lost to dissipation and friction.

Lazare Carnot died in exile in 1823. During the following year Lazare’s son Sadi Carnot

Nicolas Léonard Sadi Carnot

Nicolas Léonard Sadi Carnot was a French military engineer who, in his 1824 Reflections on the Motive Power of Fire, gave the first successful theoretical account of heat engines, now known as the Carnot cycle, thereby laying the foundations of the second law of thermodynamics...

, having graduated from the École Polytechnique

École Polytechnique

The École Polytechnique is a state-run institution of higher education and research in Palaiseau, Essonne, France, near Paris. Polytechnique is renowned for its four year undergraduate/graduate Master's program...

training school for engineers, but now living on half-pay with his brother Hippolyte in a small apartment in Paris, wrote the Reflections on the Motive Power of Fire. In this paper, Sadi visualized an ideal engine in which the heat of caloric converted into work could be reinstated by reversing the motion of the cycle, a concept subsequently known as thermodynamic reversibility. Building on his father's work, Sadi postulated the concept that “some caloric is always lost”, not being converted to mechanical work. Hence any real heat engine could not realize the Carnot cycle's reversibility and was condemned to be less efficient. This lost caloric was a precursory form of entropy loss as we now know it. Though formulated in terms of caloric, rather than entropy, this was an early insight into the second law of thermodynamics

Second law of thermodynamics

The second law of thermodynamics is an expression of the tendency that over time, differences in temperature, pressure, and chemical potential equilibrate in an isolated physical system. From the state of thermodynamic equilibrium, the law deduced the principle of the increase of entropy and...

.

1854 definition

- Heat employed in increasing the heat actually existing in the body.

- Heat employed in producing the interior work.

- Heat employed in producing the exterior work.

Building on this logic, and following a mathematical presentation of the first fundamental theorem, Clausius then presented the first-ever mathematical formulation of entropy, although at this point in the development of his theories he called it "equivalence-value", perhaps referring to the concept of the mechanical equivalent of heat

Mechanical equivalent of heat

In the history of science, the mechanical equivalent of heat was a concept that had an important part in the development and acceptance of the conservation of energy and the establishment of the science of thermodynamics in the 19th century....

which was developing at the time rather than entropy, a term which was to come into use later. He stated:

the second fundamental theorem in the mechanical theory of heatTheory of heatIn the history of science, the theory of heat or mechanical theory of heat was a theory, introduced predominantly in 1824 by the French physicist Sadi Carnot, that heat and mechanical work are equivalent. It is related to the mechanical equivalent of heat...

may thus be enunciated:

If two transformations which, without necessitating any other permanent change, can mutually replace one another, be called equivalent, then the generations of the quantity of heatHeatIn physics and thermodynamics, heat is energy transferred from one body, region, or thermodynamic system to another due to thermal contact or thermal radiation when the systems are at different temperatures. It is often described as one of the fundamental processes of energy transfer between...

Q from workWork (thermodynamics)In thermodynamics, work performed by a system is the energy transferred to another system that is measured by the external generalized mechanical constraints on the system. As such, thermodynamic work is a generalization of the concept of mechanical work in mechanics. Thermodynamic work encompasses...

at the temperature T , has the equivalence-value:

and the passage of the quantity of heat Q from the temperatureTemperatureTemperature is a physical property of matter that quantitatively expresses the common notions of hot and cold. Objects of low temperature are cold, while various degrees of higher temperatures are referred to as warm or hot...

T1 to the temperature T2, has the equivalence-value:

wherein T is a function of the temperature, independent of the nature of the process by which the transformation is effected.

In modern terminology, we think of this equivalence-value as "entropy", symbolized by S. Thus, using the above description, we can calculate the entropy change ΔS for the passage of the quantity of heat

Heat

In physics and thermodynamics, heat is energy transferred from one body, region, or thermodynamic system to another due to thermal contact or thermal radiation when the systems are at different temperatures. It is often described as one of the fundamental processes of energy transfer between...

Q from the temperature

Temperature

Temperature is a physical property of matter that quantitatively expresses the common notions of hot and cold. Objects of low temperature are cold, while various degrees of higher temperatures are referred to as warm or hot...

T1, through the "working body" of fluid (see heat engine

Heat engine

In thermodynamics, a heat engine is a system that performs the conversion of heat or thermal energy to mechanical work. It does this by bringing a working substance from a high temperature state to a lower temperature state. A heat "source" generates thermal energy that brings the working substance...

), which was typically a body of steam, to the temperature T2 as shown below:

Then, the entropy change or "equivalence-value" for this transformation is:

which equals:

and by factoring out Q, we have the following form, as was derived by Clausius:

1856 definition

In 1856, Clausius stated what he called the "second fundamental theorem in the mechanical theory of heat" in the following form:

where N is the "equivalence-value" of all uncompensated transformations involved in a cyclical process. This equivalence-value was a precursory formulation of entropy.

1862 definition

In 1862, Clausius stated what he calls the “theorem respecting the equivalence-values of the transformations” or what is now known as the second law of thermodynamicsSecond law of thermodynamics

The second law of thermodynamics is an expression of the tendency that over time, differences in temperature, pressure, and chemical potential equilibrate in an isolated physical system. From the state of thermodynamic equilibrium, the law deduced the principle of the increase of entropy and...

, as such:

- The algebraic sum of all the transformations occurring in a cyclical process can only be positive, or, as an extreme case, equal to nothing.

Quantitatively, Clausius states the mathematical expression for this theorem is as follows. Let δQ be an element of the heat given up by the body to any reservoir of heat during its own changes, heat which it may absorb from a reservoir being here reckoned as negative, and T the absolute temperature of the body at the moment of giving up this heat, then the equation:

must be true for every reversible cyclical process, and the relation:

must hold good for every cyclical process which is in any way possible. This was an early formulation of the second law and one of the original forms of the concept of entropy.

1865 definition

In 1865, Clausius gave irreversible heat loss, or what he had previously been calling "equivalence-value", a name:Although Clausius did not specify why he chose the symbol "S" to represent entropy, it is arguable that Clausius chose "S" in honor of Sadi Carnot

Nicolas Léonard Sadi Carnot

Nicolas Léonard Sadi Carnot was a French military engineer who, in his 1824 Reflections on the Motive Power of Fire, gave the first successful theoretical account of heat engines, now known as the Carnot cycle, thereby laying the foundations of the second law of thermodynamics...

, to whose 1824 article Clausius devoted over 15 years of work and research. On the first page of his original 1850 article "On the Motive Power of Heat, and on the Laws which can be Deduced from it for the Theory of Heat", Clausius calls Carnot the most important of the researchers in the theory of heat

Theory of heat

In the history of science, the theory of heat or mechanical theory of heat was a theory, introduced predominantly in 1824 by the French physicist Sadi Carnot, that heat and mechanical work are equivalent. It is related to the mechanical equivalent of heat...

.

Later developments

In 1876, physicist J. Willard Gibbs, building on the work of Clausius, Hermann von HelmholtzHermann von Helmholtz

Hermann Ludwig Ferdinand von Helmholtz was a German physician and physicist who made significant contributions to several widely varied areas of modern science...

and others, proposed that the measurement of "available energy" ΔG in a thermodynamic system could be mathematically accounted for by subtracting the "energy loss" TΔS from total energy change of the system ΔH. These concepts were further developed by James Clerk Maxwell

James Clerk Maxwell

James Clerk Maxwell of Glenlair was a Scottish physicist and mathematician. His most prominent achievement was formulating classical electromagnetic theory. This united all previously unrelated observations, experiments and equations of electricity, magnetism and optics into a consistent theory...

[1871] and Max Planck

Max Planck

Max Karl Ernst Ludwig Planck, ForMemRS, was a German physicist who actualized the quantum physics, initiating a revolution in natural science and philosophy. He is regarded as the founder of the quantum theory, for which he received the Nobel Prize in Physics in 1918.-Life and career:Planck came...

[1903].

Statistical thermodynamic views

In 1877, Ludwig BoltzmannLudwig Boltzmann

Ludwig Eduard Boltzmann was an Austrian physicist famous for his founding contributions in the fields of statistical mechanics and statistical thermodynamics...

formulated the alternative definition of entropy S defined as:

where

- kB is Boltzmann's constant and

- Ω is the number of microstates consistent with the given macrostate.

Boltzmann saw entropy as a measure of statistical "mixedupness" or disorder. This concept was soon refined by J. Willard Gibbs, and is now regarded as one of the cornerstones of the theory of statistical mechanics

Statistical mechanics

Statistical mechanics or statistical thermodynamicsThe terms statistical mechanics and statistical thermodynamics are used interchangeably...

.

Information theory

An analog to thermodynamic entropy is information entropy. In 1948, while working at Bell TelephoneBell Telephone

Bell Telephone may refer to:* Bell Telephone Company, several telephone companies with similar names* Bell Telephone Building , various* The Bell Telephone Hour, a long-running radio and television concert program...

Laboratories electrical engineer Claude Shannon

Claude Elwood Shannon

Claude Elwood Shannon was an American mathematician, electronic engineer, and cryptographer known as "the father of information theory"....

set out to mathematically quantify the statistical nature of “lost information” in phone-line signals. To do this, Shannon developed the very general concept of information entropy

Information entropy

In information theory, entropy is a measure of the uncertainty associated with a random variable. In this context, the term usually refers to the Shannon entropy, which quantifies the expected value of the information contained in a message, usually in units such as bits...

, a fundamental cornerstone of information theory

Information theory

Information theory is a branch of applied mathematics and electrical engineering involving the quantification of information. Information theory was developed by Claude E. Shannon to find fundamental limits on signal processing operations such as compressing data and on reliably storing and...

. Although the story varies, initially it seems that Shannon was not particularly aware of the close similarity between his new quantity and earlier work in thermodynamics. In 1949, however, when Shannon had been working on his equations for some time, he happened to visit the mathematician John von Neumann

John von Neumann

John von Neumann was a Hungarian-American mathematician and polymath who made major contributions to a vast number of fields, including set theory, functional analysis, quantum mechanics, ergodic theory, geometry, fluid dynamics, economics and game theory, computer science, numerical analysis,...

. During their discussions, regarding what Shannon should call the “measure of uncertainty” or attenuation in phone-line signals with reference to his new information theory, according to one source:

According to another source, when von Neumann asked him how he was getting on with his information theory, Shannon replied:

In 1948 Shannon published his famous paper A Mathematical Theory of Communication, in which he devoted a section to what he calls Choice, Uncertainty, and Entropy. In this section, Shannon introduces an H function of the following form:

where K is a positive constant. Shannon then states that “any quantity of this form, where K merely amounts to a choice of a unit of measurement, plays a central role in information theory as measures of information, choice, and uncertainty.” Then, as an example of how this expression applies in a number of different fields, he references R.C. Tolman’s 1938 Principles of Statistical Mechanics, stating that “the form of H will be recognized as that of entropy as defined in certain formulations of statistical mechanics where pi is the probability of a system being in cell i of its phase space… H is then, for example, the H in Boltzmann’s famous H theorem

H-theorem

In Classical Statistical Mechanics, the H-theorem, introduced by Ludwig Boltzmann in 1872, describes the increase in the entropy of an ideal gas in an irreversible process. H-theorem follows from considerations of Boltzmann's equation...

.” As such, over the last fifty years, ever since this statement was made, people have been overlapping the two concepts or even stating that they are exactly the same.

Shannon's information entropy is a much more general concept than statistical thermodynamic entropy. Information entropy is present whenever there are unknown quantities that can be described only by a probability distribution. In a series of papers by E. T. Jaynes starting in 1957, the statistical thermodynamic entropy can be seen as just a particular application of Shannon's information entropy to the probabilities of particular microstates of a system occurring in order to produce a particular macrostate.