Conditional independence

Encyclopedia

Probability theory

Probability theory is the branch of mathematics concerned with analysis of random phenomena. The central objects of probability theory are random variables, stochastic processes, and events: mathematical abstractions of non-deterministic events or measured quantities that may either be single...

, two events R and B are conditionally independent given a third event Y precisely if the occurrence or non-occurrence of R and the occurrence or non-occurrence of B are independent

Statistical independence

In probability theory, to say that two events are independent intuitively means that the occurrence of one event makes it neither more nor less probable that the other occurs...

events in their conditional probability distribution

Probability distribution

In probability theory, a probability mass, probability density, or probability distribution is a function that describes the probability of a random variable taking certain values....

given Y. In other words, R and B are conditionally independent if and only if, given knowledge of whether Y occurs, knowledge of whether R occurs provides no information on the likelihood of B occurring, and knowledge of whether B occurs provides no information on the likehood of R occurring.

In the standard notation of probability theory, R and B are conditionally independent given Y if and only if

or equivalently,

Two random variable

Random variable

In probability and statistics, a random variable or stochastic variable is, roughly speaking, a variable whose value results from a measurement on some type of random process. Formally, it is a function from a probability space, typically to the real numbers, which is measurable functionmeasurable...

s X and Y are conditionally independent given a third random variable Z if and only if they are independent in their conditional probability distribution given Z. That is, X and Y are conditionally independent given Z if and only if, given any value of Z, the probability distribution of X is the same for all values of Y and the probability distribution of Y is the same for all values of X.

Two events R and B are conditionally independent given a σ-algebra

Sigma-algebra

In mathematics, a σ-algebra is a technical concept for a collection of sets satisfying certain properties. The main use of σ-algebras is in the definition of measures; specifically, the collection of sets over which a measure is defined is a σ-algebra...

Σ if

where

denotes the conditional expectation

denotes the conditional expectationConditional expectation

In probability theory, a conditional expectation is the expected value of a real random variable with respect to a conditional probability distribution....

of the indicator function of the event

,

,  , given the sigma algebra

, given the sigma algebra  . That is,

. That is,

Two random variables X and Y are conditionally independent given a σ-algebra Σ if the above equation holds for all R in σ(X) and B in σ(Y).

Two random variables X and Y are conditionally independent given a random variable W if they are independent given σ(W): the σ-algebra generated by W. This is commonly written:

or

or

This is read "X is independent of Y, given W"; the conditioning applies to the whole statement: "(X is independent of Y) given W".

If W assumes a countable set of values, this is equivalent to the conditional independence of X and Y for the events of the form [W = w].

Conditional independence of more than two events, or of more than two random variables, is defined analogously.

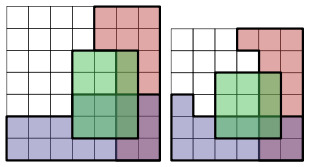

The following two examples show that X Y

neither implies nor is implied by X Y | W.

First, suppose W is 0 with probability 0.5 and is the value 1 otherwise. When

W = 0 take X and Y to be independent, each having the value 0 with probability 0.99 and the value 1 otherwise. When W = 1, X and Y are again independent, but this time they take the value 1

with probability 0.99. Then X Y | W. But X and Y are dependent, because Pr(X = 0) < Pr(X = 0|Y = 0). This is because Pr(X = 0) = 0.5, but if Y = 0 then it's very likely that W = 0 and thus that X = 0 as well, so Pr(X = 0|Y = 0) > 0.5. For the second example, suppose X Y, each taking the values 0 and 1 with probability 0.5. Let W be the product XY. Then when W = 0, Pr(X = 0) = 2/3, but Pr(X = 0|Y = 0) = 1/2, so X Y | W is false.

This also an example of Explaining Away. See Kevin Murphy's tutorial

where X and Y take the values "brainy" and "sporty".

Uses in Bayesian statistics

Let p be the proportion of voters who will vote "yes" in an upcoming referendumReferendum

A referendum is a direct vote in which an entire electorate is asked to either accept or reject a particular proposal. This may result in the adoption of a new constitution, a constitutional amendment, a law, the recall of an elected official or simply a specific government policy. It is a form of...

. In taking an opinion poll

Opinion poll

An opinion poll, sometimes simply referred to as a poll is a survey of public opinion from a particular sample. Opinion polls are usually designed to represent the opinions of a population by conducting a series of questions and then extrapolating generalities in ratio or within confidence...

, one chooses n voters randomly from the population. For i = 1, ..., n, let Xi = 1 or 0 according as the ith chosen voter will or will not vote "yes".

In a frequentist approach to statistical inference

Statistical inference

In statistics, statistical inference is the process of drawing conclusions from data that are subject to random variation, for example, observational errors or sampling variation...

one would not attribute any probability distribution to p (unless the probabilities could be somehow interpreted as relative frequencies of occurrence of some event or as proportions of some population) and one would say that X1, ..., Xn are independent

Statistical independence

In probability theory, to say that two events are independent intuitively means that the occurrence of one event makes it neither more nor less probable that the other occurs...

random variables.

By contrast, in a Bayesian

Bayesian probability

Bayesian probability is one of the different interpretations of the concept of probability and belongs to the category of evidential probabilities. The Bayesian interpretation of probability can be seen as an extension of logic that enables reasoning with propositions, whose truth or falsity is...

approach to statistical inference, one would assign a probability distribution

Probability distribution

In probability theory, a probability mass, probability density, or probability distribution is a function that describes the probability of a random variable taking certain values....

to p regardless of the non-existence of any such "frequency" interpretation, and one would construe the probabilities as degrees of belief that p is in any interval to which a probability is assigned. In that model, the random variables X1, ..., Xn are not independent, but they are conditionally independent given the value of p. In particular, if a large number of the Xs are observed to be equal to 1, that would imply a high conditional probability, given that observation, that p is near 1, and thus a high conditional probability, given that observation, that the next X to be observed will be equal to 1.

Rules of conditional independence

A set of rules governing statements of conditional independence have been derived from the basic definition.Note: since these implications hold for any probability space, they will still hold if considers a sub-universe by conditioning everything on another variable, say K. For example,

would also mean that

would also mean that  .

.Note: below, the comma can be read as an "AND".

Decomposition

Proof:

-

(meaning of

(meaning of  )

)

-

(ignore variable by integrating it out)

(ignore variable by integrating it out)

-

repeat proof to show independence of X and B.

Weak union

Contraction

Contraction-weak-union-decomposition

Putting the above three together, we have:

-

Intersection

If the probabilities of X, A, B are all positive, then the following also holds:

See also

- de Finetti's theoremDe Finetti's theoremIn probability theory, de Finetti's theorem explains why exchangeable observations are conditionally independent given some latent variable to which an epistemic probability distribution would then be assigned...

- conditional expectationConditional expectationIn probability theory, a conditional expectation is the expected value of a real random variable with respect to a conditional probability distribution....

- de Finetti's theorem

-

-

-