Point location

Encyclopedia

The point location problem is a fundamental topic of computational geometry

. It finds applications in areas that deal with processing geometrical data: computer graphics

, geographic information system

s (GIS), motion planning

, and computer aided design (CAD).

In its most general form, the problem is, given a partition of the space into disjoint regions, determine the region where a query point lies. As an example application, each time you click a mouse to follow a link in a web browser

, this problem must be solved in order to determine which area of the computer screen is under the mouse pointer. A simple special case is the point in polygon

problem. In this case, we need to determine whether the point is inside, outside, or on the boundary of a single polygon.

In many applications, we need to determine the location of several different points with respect to the same partition of the space. To solve this problem efficiently, it is useful to build a data structure

that, given a query point, quickly determines which region contains the query point.

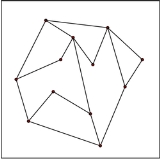

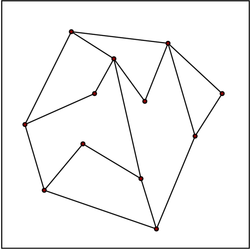

In the planar case, we are given a planar subdivision S, formed by multiple polygon

In the planar case, we are given a planar subdivision S, formed by multiple polygon

s called faces, and need to determine which face contains a query point. A brute force search of each face using the point-in-polygon algorithm is possible, but usually not feasible for subdivisions of high complexity. Several different approaches lead to optimal data structures, with O

(n) storage space and O(log n) query time, where n is the total number of vertices in S. For simplicity, we assume that the planar subdivision is contained inside a square bounding box.

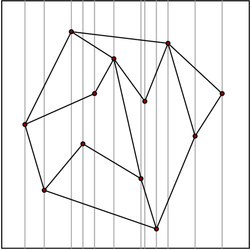

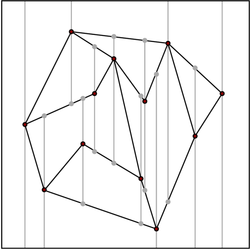

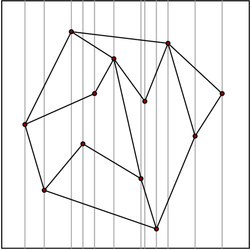

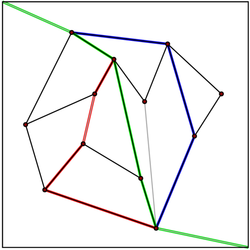

The simplest and earliest data structure to achieve O(log n) time was discovered by Dobkin and Lipton

The simplest and earliest data structure to achieve O(log n) time was discovered by Dobkin and Lipton

in 1976. It is based on subdividing S using vertical lines that pass through each vertex in S. The region between two consecutive vertical lines is called a slab. Notice that each slab is divided by non-intersecting line segments that completely cross the slab from left to right. The region between two consecutive segments inside a slab corresponds to a unique face of S. Therefore, we reduce our point location problem to two simpler problems:

The first problem can be solved by binary search on the x coordinate of the vertical lines in O(log n) time. The second problem can also be solved in O(log n) time by binary search. To see how, notice that, as the segments do not intersect and completely cross the slab, the segments can be sorted vertically inside each slab.

While this algorithm allows point location in logarithmic time and is easy to implement, the space required to build the slabs and the regions contained within the slabs can be as high as O(n²), since each slab can cross a significant fraction of the segments.

Several authors noticed that the segments that cross two adjacent slabs are mostly the same. Therefore, the size of the data structure could potentially be reduced by applying some kind of compression, where only the difference between two adjacent slabs is stored. Sarnak and Tarjan managed to use this idea to reduce the storage space to O(n), while maintaining the O(log n) query time. Unfortunately, the data structure becomes highly complex.

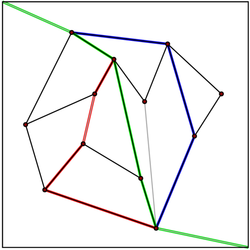

A (vertical) monotone chain is a path

A (vertical) monotone chain is a path

such that the y-coordinate never increases along the path. A simple polygon

is (vertical) monotone if it is formed by two monotone chains, with the first and last vertices in common. It is possible to add some edges to a planar subdivision, in order to make all faces monotone, obtaining what is called a monotone subdivision. This process does not add any vertices to the subdivision (therefore, the size remains O(n)), and can be performed in O(n log n) time by plane sweep (it can also be performed in linear time, using polygon triangulation

). Therefore, there is no loss of generality, if we restrict our data structure to the case of monotone subdivisions, as we do in this section.

The weakness of the slab decomposition is that the vertical lines create additional segments in the decomposition, making it difficult to achieve O(n) storage space. Edelsbrunner

, Guibas

, and Stolfi

discovered an optimal data structure that only uses the edges in a monotone subdivision. The idea is to use vertical monotone chains, instead of using vertical lines to partition the subdivision.

Converting this general idea to an actual efficient data structure is not a simple task. First, we need to be able to compute a monotone chain that divides the subdivision into two halves of similar sizes. Second, since some edges may be contained in several monotone chains, we need to be careful to guarantee that the storage space is O(n). Third, testing whether a point is on the left or the right side of a monotone subdivision takes O(n) time if performed naively.

Details on how to solve the first two issues are beyond the scope of this article. We briefly mention how to address the third issue. Using binary search, we can test whether a point is to the left or right of a monotone chain in O(log n) time. As we need to perform another nested binary search through O(log n) chains to actually determine the point location, the query time is O(log² n). To achieve O(log n) query time, we need to use fractional cascading

, keeping pointers between the edges of different monotone chains.

A polygon with m vertices can be partitioned into m-2 triangles. There are numerous algorithms to triangulate a polygon

A polygon with m vertices can be partitioned into m-2 triangles. There are numerous algorithms to triangulate a polygon

efficiently, the fastest having O(n) worst case time. Therefore, we can decompose each polygon of our subdivision in triangles, and restrict our data structure to the case of subdivisions formed exclusively by triangles. Kirkpatrick gives a data structure for point location in triangulated subdivisions with O(n) storage space and O(log n) query time.

The general idea is to build a hierarchy of triangles. To perform a query, we start by finding the top-level triangle that contains the query point. Since the number of top-level triangles is bounded by a constant, this operation can be performed in O(1) time. Each triangle has pointers to the triangles it intersects in the next level of the hierarchy, and the number of pointers is also bounded by a constant. We proceed with the query by finding which triangle contains the query point level by level.

The data structure is built in the opposite order, that is, bottom-up. We start with the triangulated subdivision, and choose an independent set

of vertices to be removed. After removing the vertices, we retriangulate the subdivision. Because the subdivision is formed by triangles, a greedy algorithm can find an independent set that contains a constant fraction of the vertices. Therefore, the number of removal steps is O(log n).

A randomized

A randomized

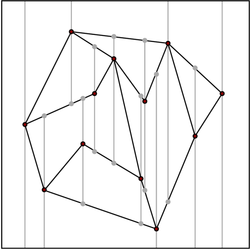

approach to this problem, and probably the most practical one, is based on trapezoidal decomposition, or trapezoidal map. A trapezoidal decomposition is obtained by shooting vertical bullets going both up and down from each vertex in the original subdivision. The bullets stop when they hit an edge, and form a new edge in the subdivision. This way, we obtain a subset of the slab decomposition, with only O(n) edges and vertices, since we only add two edges and two vertices for each vertex in the original subdivision.

It is not easy to see how to use a trapezoidal decomposition for point location, since a binary search similar to the one used in the slab decomposition can no longer be performed. Instead, we need to answer a query in the same fashion as the triangulation refinement approach, but the data structure is constructed top-down. Initially, we build a trapezoidal decomposition containing only the bounding box, and no internal vertex. Then, we add the segments from the subdivision, one by one, in random order, refining the trapezoidal decomposition. Using backwards analysis, we can show that the expected number of trapezoids created for each insertion is bounded by a constant.

We build a directed acyclic graph

, where the vertices are the trapezoids that existed at some point in the refinement, and the directed edges connect the trapezoids obtained by subdivision. The expected depth of a search in this digraph, starting from the vertex corresponding to the bounding box, is O(log n).

In three-dimensional space, it is possible to answer point location queries in O(log² n) using O(n log n) space. The general idea is to maintain several planar point location data structures, corresponding to the intersection of the subdivision with n parallel planes that contain each subdivision vertex. A naive use of this idea would increase the storage space to O(n²). In the same fashion as in the slab decomposition, the similarity between consecutive data structures can be exploited in order to reduce the storage space to O(n log n), but the query time increases to O(log² n).

In d-dimensional space, point location can be solved by recursively projecting the faces into a (d-1)-dimensional space. While the query time is O(log n), the storage space can be as high as . The high complexity of the d-dimensional data structures led to the study of special types of subdivision.

. The high complexity of the d-dimensional data structures led to the study of special types of subdivision.

One important example is the case of arrangements of hyperplanes

. An arrangement of n hyperplanes defines O(nd) cells, but point location can be performed in O(log n) time with O(nd) space by using Chazelle

's hierarchical cuttings.

Another special type of subdivision is called rectilinear (or orthogonal) subdivision. In a rectilinear subdivision, all edges are parallel to one of the d orthogonal axis. In this case, point location can be answered in O(logd-1 n) time with O(n) space.

Computational geometry

Computational geometry is a branch of computer science devoted to the study of algorithms which can be stated in terms of geometry. Some purely geometrical problems arise out of the study of computational geometric algorithms, and such problems are also considered to be part of computational...

. It finds applications in areas that deal with processing geometrical data: computer graphics

Computer graphics

Computer graphics are graphics created using computers and, more generally, the representation and manipulation of image data by a computer with help from specialized software and hardware....

, geographic information system

Geographic Information System

A geographic information system, geographical information science, or geospatial information studies is a system designed to capture, store, manipulate, analyze, manage, and present all types of geographically referenced data...

s (GIS), motion planning

Motion planning

Motion planning is a term used in robotics for the process of detailing a task into discrete motions....

, and computer aided design (CAD).

In its most general form, the problem is, given a partition of the space into disjoint regions, determine the region where a query point lies. As an example application, each time you click a mouse to follow a link in a web browser

Web browser

A web browser is a software application for retrieving, presenting, and traversing information resources on the World Wide Web. An information resource is identified by a Uniform Resource Identifier and may be a web page, image, video, or other piece of content...

, this problem must be solved in order to determine which area of the computer screen is under the mouse pointer. A simple special case is the point in polygon

Point in polygon

In computational geometry, the point-in-polygon problem asks whether a given point in the plane lies inside, outside, or on the boundary of a polygon...

problem. In this case, we need to determine whether the point is inside, outside, or on the boundary of a single polygon.

In many applications, we need to determine the location of several different points with respect to the same partition of the space. To solve this problem efficiently, it is useful to build a data structure

Data structure

In computer science, a data structure is a particular way of storing and organizing data in a computer so that it can be used efficiently.Different kinds of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks...

that, given a query point, quickly determines which region contains the query point.

Planar case

Polygon

In geometry a polygon is a flat shape consisting of straight lines that are joined to form a closed chain orcircuit.A polygon is traditionally a plane figure that is bounded by a closed path, composed of a finite sequence of straight line segments...

s called faces, and need to determine which face contains a query point. A brute force search of each face using the point-in-polygon algorithm is possible, but usually not feasible for subdivisions of high complexity. Several different approaches lead to optimal data structures, with O

Big O notation

In mathematics, big O notation is used to describe the limiting behavior of a function when the argument tends towards a particular value or infinity, usually in terms of simpler functions. It is a member of a larger family of notations that is called Landau notation, Bachmann-Landau notation, or...

(n) storage space and O(log n) query time, where n is the total number of vertices in S. For simplicity, we assume that the planar subdivision is contained inside a square bounding box.

Slab decomposition

Richard J. Lipton

Richard Jay "Dick" Lipton is an American computer scientist who has worked in computer science theory, cryptography, and DNA computing. Lipton is presently Associate Dean of Research, Professor, and the Frederick G...

in 1976. It is based on subdividing S using vertical lines that pass through each vertex in S. The region between two consecutive vertical lines is called a slab. Notice that each slab is divided by non-intersecting line segments that completely cross the slab from left to right. The region between two consecutive segments inside a slab corresponds to a unique face of S. Therefore, we reduce our point location problem to two simpler problems:

- Given a subdivision of the plane into vertical slabs, determine which slab contains a given point.

- Given a slab subdivided into regions by non-intersecting segments that completely cross the slab from left to right, determine which region contains a given point.

The first problem can be solved by binary search on the x coordinate of the vertical lines in O(log n) time. The second problem can also be solved in O(log n) time by binary search. To see how, notice that, as the segments do not intersect and completely cross the slab, the segments can be sorted vertically inside each slab.

While this algorithm allows point location in logarithmic time and is easy to implement, the space required to build the slabs and the regions contained within the slabs can be as high as O(n²), since each slab can cross a significant fraction of the segments.

Several authors noticed that the segments that cross two adjacent slabs are mostly the same. Therefore, the size of the data structure could potentially be reduced by applying some kind of compression, where only the difference between two adjacent slabs is stored. Sarnak and Tarjan managed to use this idea to reduce the storage space to O(n), while maintaining the O(log n) query time. Unfortunately, the data structure becomes highly complex.

Monotone subdivisions

Path (graph theory)

In graph theory, a path in a graph is a sequence of vertices such that from each of its vertices there is an edge to the next vertex in the sequence. A path may be infinite, but a finite path always has a first vertex, called its start vertex, and a last vertex, called its end vertex. Both of them...

such that the y-coordinate never increases along the path. A simple polygon

Simple polygon

In geometry, a simple polygon is a closed polygonal chain of line segments in the plane which do not have points in common other than the common vertices of pairs of consecutive segments....

is (vertical) monotone if it is formed by two monotone chains, with the first and last vertices in common. It is possible to add some edges to a planar subdivision, in order to make all faces monotone, obtaining what is called a monotone subdivision. This process does not add any vertices to the subdivision (therefore, the size remains O(n)), and can be performed in O(n log n) time by plane sweep (it can also be performed in linear time, using polygon triangulation

Polygon triangulation

In computational geometry, polygon triangulation is the decomposition of a polygonal area P into a set of triangles, i.e., finding the set of triangles with pairwise non-intersecting interiors whose union is P....

). Therefore, there is no loss of generality, if we restrict our data structure to the case of monotone subdivisions, as we do in this section.

The weakness of the slab decomposition is that the vertical lines create additional segments in the decomposition, making it difficult to achieve O(n) storage space. Edelsbrunner

Herbert Edelsbrunner

Herbert Edelsbrunner is a computer scientist working in the field of computational geometry, the Arts & Science Professor of Computer Science and Mathematics at Duke University, Professor at the Institute of Science and Technology Austria , and the co-founder of Geomagic, Inc...

, Guibas

Leonidas J. Guibas

Leonidas John Guibas is a professor of computer science at Stanford University, where he heads the geometric computation group and is a member of the computer graphics and artificial intelligence laboratories. Guibas was a student of Donald Knuth at Stanford, where he received his Ph.D. in 1976...

, and Stolfi

Jorge Stolfi

Jorge Stolfi is a full professor of computer science at the State University of Campinas, working in computer vision, image processing, splines and other function approximation methods, graph theory, computational geometry, and several other fields...

discovered an optimal data structure that only uses the edges in a monotone subdivision. The idea is to use vertical monotone chains, instead of using vertical lines to partition the subdivision.

Converting this general idea to an actual efficient data structure is not a simple task. First, we need to be able to compute a monotone chain that divides the subdivision into two halves of similar sizes. Second, since some edges may be contained in several monotone chains, we need to be careful to guarantee that the storage space is O(n). Third, testing whether a point is on the left or the right side of a monotone subdivision takes O(n) time if performed naively.

Details on how to solve the first two issues are beyond the scope of this article. We briefly mention how to address the third issue. Using binary search, we can test whether a point is to the left or right of a monotone chain in O(log n) time. As we need to perform another nested binary search through O(log n) chains to actually determine the point location, the query time is O(log² n). To achieve O(log n) query time, we need to use fractional cascading

Fractional cascading

In computer science, fractional cascading is a technique to speed up a sequence of binary searches for the same value in a sequence of related data structures. The first binary search in the sequence takes a logarithmic amount of time, as is standard for binary searches, but successive searches in...

, keeping pointers between the edges of different monotone chains.

Triangulation refinement

Polygon triangulation

In computational geometry, polygon triangulation is the decomposition of a polygonal area P into a set of triangles, i.e., finding the set of triangles with pairwise non-intersecting interiors whose union is P....

efficiently, the fastest having O(n) worst case time. Therefore, we can decompose each polygon of our subdivision in triangles, and restrict our data structure to the case of subdivisions formed exclusively by triangles. Kirkpatrick gives a data structure for point location in triangulated subdivisions with O(n) storage space and O(log n) query time.

The general idea is to build a hierarchy of triangles. To perform a query, we start by finding the top-level triangle that contains the query point. Since the number of top-level triangles is bounded by a constant, this operation can be performed in O(1) time. Each triangle has pointers to the triangles it intersects in the next level of the hierarchy, and the number of pointers is also bounded by a constant. We proceed with the query by finding which triangle contains the query point level by level.

The data structure is built in the opposite order, that is, bottom-up. We start with the triangulated subdivision, and choose an independent set

Independent set

In graph theory, an independent set or stable set is a set of vertices in a graph, no two of which are adjacent. That is, it is a set I of vertices such that for every two vertices in I, there is no edge connecting the two. Equivalently, each edge in the graph has at most one endpoint in I...

of vertices to be removed. After removing the vertices, we retriangulate the subdivision. Because the subdivision is formed by triangles, a greedy algorithm can find an independent set that contains a constant fraction of the vertices. Therefore, the number of removal steps is O(log n).

Trapezoidal decomposition

Randomized algorithm

A randomized algorithm is an algorithm which employs a degree of randomness as part of its logic. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random bits...

approach to this problem, and probably the most practical one, is based on trapezoidal decomposition, or trapezoidal map. A trapezoidal decomposition is obtained by shooting vertical bullets going both up and down from each vertex in the original subdivision. The bullets stop when they hit an edge, and form a new edge in the subdivision. This way, we obtain a subset of the slab decomposition, with only O(n) edges and vertices, since we only add two edges and two vertices for each vertex in the original subdivision.

It is not easy to see how to use a trapezoidal decomposition for point location, since a binary search similar to the one used in the slab decomposition can no longer be performed. Instead, we need to answer a query in the same fashion as the triangulation refinement approach, but the data structure is constructed top-down. Initially, we build a trapezoidal decomposition containing only the bounding box, and no internal vertex. Then, we add the segments from the subdivision, one by one, in random order, refining the trapezoidal decomposition. Using backwards analysis, we can show that the expected number of trapezoids created for each insertion is bounded by a constant.

We build a directed acyclic graph

Directed acyclic graph

In mathematics and computer science, a directed acyclic graph , is a directed graph with no directed cycles. That is, it is formed by a collection of vertices and directed edges, each edge connecting one vertex to another, such that there is no way to start at some vertex v and follow a sequence of...

, where the vertices are the trapezoids that existed at some point in the refinement, and the directed edges connect the trapezoids obtained by subdivision. The expected depth of a search in this digraph, starting from the vertex corresponding to the bounding box, is O(log n).

Higher dimensions

There are no known general point location data structures with linear space and logarithmic query time for dimensions greater than 2. Therefore, we need to sacrifice either query time, or storage space, or restrict ourselves to some less general type of subdivision.In three-dimensional space, it is possible to answer point location queries in O(log² n) using O(n log n) space. The general idea is to maintain several planar point location data structures, corresponding to the intersection of the subdivision with n parallel planes that contain each subdivision vertex. A naive use of this idea would increase the storage space to O(n²). In the same fashion as in the slab decomposition, the similarity between consecutive data structures can be exploited in order to reduce the storage space to O(n log n), but the query time increases to O(log² n).

In d-dimensional space, point location can be solved by recursively projecting the faces into a (d-1)-dimensional space. While the query time is O(log n), the storage space can be as high as

. The high complexity of the d-dimensional data structures led to the study of special types of subdivision.

. The high complexity of the d-dimensional data structures led to the study of special types of subdivision.One important example is the case of arrangements of hyperplanes

Arrangement of hyperplanes

In geometry and combinatorics, an arrangement of hyperplanes is a finite set A of hyperplanes in a linear, affine, or projective space S....

. An arrangement of n hyperplanes defines O(nd) cells, but point location can be performed in O(log n) time with O(nd) space by using Chazelle

Bernard Chazelle

Bernard Chazelle is the Eugene Higgins Professor of Computer Science at Princeton University. Much of his work is in computational geometry, where he has found many of the best-known algorithms, such as linear-time triangulation of a simple polygon, as well as many useful complexity results, such...

's hierarchical cuttings.

Another special type of subdivision is called rectilinear (or orthogonal) subdivision. In a rectilinear subdivision, all edges are parallel to one of the d orthogonal axis. In this case, point location can be answered in O(logd-1 n) time with O(n) space.

External links

- Point-Location Source Repository at Stony Brook University

- Point-Location Queries in CGALCGALThe Computational Geometry Algorithms Library is a software library that aims to provide easy access to efficient and reliable algorithms in computational geometry. While primarily written in C++, Python and Scilab bindings are also available....

, the Computational Geometry Algorithms Library