LZW

Encyclopedia

Lempel–Ziv–Welch (LZW) is a universal lossless data compression

algorithm

created by Abraham Lempel

, Jacob Ziv

, and Terry Welch

. It was published by Welch in 1984 as an improved implementation of the LZ78

algorithm published by Lempel and Ziv in 1978. The algorithm is simple to implement, and has the potential for very high throughput in hardware implementations.

The idea was quickly adapted to other situations. In an image based on a color table, for example, the natural character alphabet is the set of color table indexes, and in the 1980s, many images had small color tables (on the order of 16 colors). For such a reduced alphabet, the full 12-bit codes yielded poor compression unless the image was large, so the idea of a variable-width code was introduced: codes typically start one bit wider than the symbols being encoded, and as each code size is used up, the code width increases by 1 bit, up to some prescribed maximum (typically 12 bits).

Further refinements include reserving a code to indicate that the code table should be cleared (a "clear code", typically the first value immediately after the values for the individual alphabet characters), and a code to indicate the end of data (a "stop code", typically one greater than the clear code). The clear code allows the table to be reinitialized after it fills up, which lets the encoding adapt to changing patterns in the input data. Smart encoders can monitor the compression efficiency and clear the table whenever the existing table no longer matches the input well.

Since the codes are added in a manner determined by the data, the decoder mimics building the table as it sees the resulting codes. It is critical that the encoder and decoder agree on which variety of LZW is being used: the size of the alphabet, the maximum code width, whether variable-width encoding is being used, the initial code size, whether to use the clear and stop codes (and what values they have). Most formats that employ LZW build this information into the format specification or provide explicit fields for them in a compression header for the data.

A dictionary is initialized to contain the single-character strings corresponding to all the possible input characters (and nothing else except the clear and stop codes if they're being used). The algorithm works by scanning through the input string for successively longer substrings until it finds one that is not in the dictionary. When such a string is found, the index for the string less the last character (i.e., the longest substring that is in the dictionary) is retrieved from the dictionary and sent to output, and the new string (including the last character) is added to the dictionary with the next available code. The last input character is then used as the next starting point to scan for substrings.

In this way, successively longer strings are registered in the dictionary and made available for subsequent encoding as single output values. The algorithm works best on data with repeated patterns, so the initial parts of a message will see little compression. As the message grows, however, the compression ratio

tends asymptotically to the maximum.

of the string just output and the first character of the string obtained by decoding the next input value. The decoder then proceeds to the next input value (which was already read in as the "next value" in the previous pass) and repeats the process until there is no more input, at which point the final input value is decoded without any more additions to the dictionary.

In this way the decoder builds up a dictionary which is identical to that used by the encoder, and uses it to decode subsequent input values. Thus the full dictionary does not need be sent with the encoded data; just the initial dictionary containing the single-character strings is sufficient (and is typically defined beforehand within the encoder and decoder rather than being explicitly sent with the encoded data.)

The decoder is always one code behind the encoder in building the table, so when it sees the code for ω, it will generate an entry for code 2p − 1. Since this is the point where the encoder will increase the code width, the decoder must increase the width here as well: at the point where it generates the largest code that will fit in p bits.

Unfortunately some early implementations of the encoding algorithm increase the code width and then emit ω at the new width instead of the old width, so that to the decoder it looks like the width changes one code too early. This is called "Early Change"; it caused so much confusion that Adobe now allows both versions in PDF files, but includes an explicit flag in the header of each LZW-compressed stream to indicate whether Early Change is being used. Most graphic file formats do not use Early Change.

When the table is cleared in response to a clear code, both encoder and decoder change the code width after the clear code back to the initial code width, starting with the code immediately following the clear code.

GIF files use LSB-First packing order. TIFF files and PDF files use MSB-First packing order.

at every stage, both in encoding and decoding the data. This example has been constructed to give reasonable compression on a very short message. In real text data, repetition is generally less pronounced, so longer input streams are typically necessary before the compression builds up efficiency.

The plaintext to be encoded (from an alphabet using only the capital letters) is:

TOBEORNOTTOBEORTOBEORNOT#

The # is a marker used to show that the end of the message has been reached. There are thus 26 symbols in the plaintext alphabet (the 26 capital letters A through Z), plus the stop code #. We arbitrarily assign these the values 1 through 26 for the letters, and 0 for '#'. (Most flavors of LZW would put the stop code after the data alphabet, but nothing in the basic algorithm requires that. The encoder and decoder only have to agree what value it has.)

A computer will render these as strings of bit

s. Five-bit codes are needed to give sufficient combinations to encompass this set of 27 values. The dictionary is initialized with these 27 values. As the dictionary grows, the codes will need to grow in width to accommodate the additional entries. A 5-bit code gives 25 = 32 possible combinations of bits, so when the 33rd dictionary word is created, the algorithm will have to switch at that point from 5-bit strings to 6-bit strings (for all code values, including those which were previously output with only five bits). Note that since the all-zero code 00000 is used, and is labeled "0", the 33rd dictionary entry will be labeled 32. (Previously generated output is not affected by the code-width change, but once a 6-bit value is generated in the dictionary, it could conceivably be the next code emitted, so the width for subsequent output shifts to 6 bits to accommodate that.)

The initial dictionary, then, will consist of the following entries:

Unencoded length = 25 symbols × 5 bits/symbol = 125 bits

Encoded length = (6 codes × 5 bits/code) + (11 codes × 6 bits/code) = 96 bits.

Using LZW has saved 29 bits out of 125, reducing the message by almost 22%. If the message were longer, then the dictionary words would begin to represent longer and longer sections of text, allowing repeated words to be sent very compactly.

s of previous entries.

At each stage, the decoder receives a code X; it looks X up in the table and outputs the sequence χ it codes, and it conjectures χ + ? as the entry the encoder just added – because the encoder emitted X for χ precisely because χ + ? was not in the table, and the encoder goes ahead and adds it. But what is the missing letter? It is the first letter in the sequence coded by the next code Z that the decoder receives. So the decoder looks up Z, decodes it into the sequence ω and takes the first letter z and tacks it onto the end of χ as the next dictionary entry.

This works as long as the codes received are in the decoder's dictionary, so that they can be decoded into sequences. What happens if the decoder receives a code Z that is not yet in its dictionary? Since the decoder is always just one code behind the encoder, Z can be in the encoder's dictionary only if the encoder just generated it, when emitting the previous code X for χ. Thus Z codes some ω that is χ + ?, and the decoder can determine the unknown character as follows:

This situation occurs whenever the encoder encounters input of the form cScSc, where c is a single character, S is a string and cS is already in the dictionary, but cSc is not. The encoder emits the code for cS, putting a new code for cSc into the dictionary. Next it sees cSc in the input (starting at the second c of cScSc) and emits the new code it just inserted. The argument above shows that whenever the decoder receives a code not in its dictionary, the situation must look like this.

Although input of form cScSc might seem unlikely, this pattern is fairly common when the input stream is characterized by significant repetition. In particular, long strings of a single character (which are common in the kinds of images LZW is often used to encode) repeatedly generate patterns of this sort.

or arithmetic coding

then uses shorter codes for values with higher probabilities.

text file can typically be compressed via LZW to about half its original size.

LZW was used in the public-domain program compress

, which became a more or less standard utility in Unix

systems circa 1986. It has since disappeared from many distributions, both because it infringed the LZW patent and because gzip

produced better compression ratios using the LZ77-based DEFLATE

algorithm, but as of 2008 at least FreeBSD includes both compress

and uncompress

as a part of the distribution. Several other popular compression utilities also used LZW, or closely related methods.

LZW became very widely used when it became part of the GIF image format in 1987. It may also (optionally) be used in TIFF and PDF files. (Although LZW is available in Adobe Acrobat software, Acrobat by default uses DEFLATE

for most text and color-table-based image data in PDF files.)

s have been issued in the United States

and other countries for LZW and similar algorithms. LZ78 was covered by by Lempel, Ziv, Cohn, and Eastman, assigned to Sperry Corporation

, later Unisys

Corporation, filed on August 10, 1981. Two US patents were issued for the LZW algorithm: by Victor S. Miller

and Mark N. Wegman and assigned to IBM, originally filed on June 1, 1983, and by Welch, assigned to Sperry Corporation, later Unisys Corporation, filed on June 20, 1983. On June 20, 2003, this patent on the LZW algorithm expired http://www.unisys.com/about__unisys/lzw. All patents on the LZW algorithm worldwide have also expired (see Graphics Interchange Format#Unisys and LZW patent enforcement).

US Patent 4,558,302 received a lot of negative press after LZW compression was used in the GIF image format.

Lossless data compression

Lossless data compression is a class of data compression algorithms that allows the exact original data to be reconstructed from the compressed data. The term lossless is in contrast to lossy data compression, which only allows an approximation of the original data to be reconstructed, in exchange...

algorithm

Algorithm

In mathematics and computer science, an algorithm is an effective method expressed as a finite list of well-defined instructions for calculating a function. Algorithms are used for calculation, data processing, and automated reasoning...

created by Abraham Lempel

Abraham Lempel

Abraham Lempel is an Israeli computer scientist and one of the fathers of the LZ family of lossless data compression algorithms.Lempel was born on 10 February 1936 in Lwów, Poland . He studied at Technion - Israel Institute of Technology, and received a B.Sc. in 1963, M.Sc. in 1965, and D.Sc. in...

, Jacob Ziv

Jacob Ziv

Jacob Ziv is an Israeli computer scientist who, along with Abraham Lempel, developed the LZ family of lossless data compression algorithms.-Biography:...

, and Terry Welch

Terry Welch

Terry A. Welch is an American computer scientist. Along with Abraham Lempel and Jacob Ziv, he developed the lossless LZW compression algorithm which was published in 1984.Welch received a B.S., M.S. and Ph.D. degree at MIT in electrical engineering...

. It was published by Welch in 1984 as an improved implementation of the LZ78

LZ77 and LZ78

LZ77 and LZ78 are the names for the two lossless data compression algorithms published in papers by Abraham Lempel and Jacob Ziv in 1977 and 1978. They are also known as LZ1 and LZ2 respectively. These two algorithms form the basis for most of the LZ variations including LZW, LZSS, LZMA and...

algorithm published by Lempel and Ziv in 1978. The algorithm is simple to implement, and has the potential for very high throughput in hardware implementations.

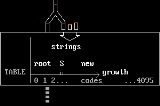

Algorithm

The scenario described in Welch's 1984 paper encodes sequences of 8-bit data as fixed-length 12-bit codes. The codes from 0 to 255 represent 1-character sequences consisting of the corresponding 8-bit character, and the codes 256 through 4095 are created in a dictionary for sequences encountered in the data as it is encoded. At each stage in compression, input bytes are gathered into a sequence until the next character would make a sequence for which there is no code yet in the dictionary. The code for the sequence (without that character) is emitted, and a new code (for the sequence with that character) is added to the dictionary.The idea was quickly adapted to other situations. In an image based on a color table, for example, the natural character alphabet is the set of color table indexes, and in the 1980s, many images had small color tables (on the order of 16 colors). For such a reduced alphabet, the full 12-bit codes yielded poor compression unless the image was large, so the idea of a variable-width code was introduced: codes typically start one bit wider than the symbols being encoded, and as each code size is used up, the code width increases by 1 bit, up to some prescribed maximum (typically 12 bits).

Further refinements include reserving a code to indicate that the code table should be cleared (a "clear code", typically the first value immediately after the values for the individual alphabet characters), and a code to indicate the end of data (a "stop code", typically one greater than the clear code). The clear code allows the table to be reinitialized after it fills up, which lets the encoding adapt to changing patterns in the input data. Smart encoders can monitor the compression efficiency and clear the table whenever the existing table no longer matches the input well.

Since the codes are added in a manner determined by the data, the decoder mimics building the table as it sees the resulting codes. It is critical that the encoder and decoder agree on which variety of LZW is being used: the size of the alphabet, the maximum code width, whether variable-width encoding is being used, the initial code size, whether to use the clear and stop codes (and what values they have). Most formats that employ LZW build this information into the format specification or provide explicit fields for them in a compression header for the data.

Encoding

A high level view of the encoding algorithm is shown here:- Initialize the dictionary to contain all strings of length one.

- Find the longest string W in the dictionary that matches the current input.

- Emit the dictionary index for W to output and remove W from the input.

- Add W followed by the next symbol in the input to the dictionary.

- Go to Step 2.

A dictionary is initialized to contain the single-character strings corresponding to all the possible input characters (and nothing else except the clear and stop codes if they're being used). The algorithm works by scanning through the input string for successively longer substrings until it finds one that is not in the dictionary. When such a string is found, the index for the string less the last character (i.e., the longest substring that is in the dictionary) is retrieved from the dictionary and sent to output, and the new string (including the last character) is added to the dictionary with the next available code. The last input character is then used as the next starting point to scan for substrings.

In this way, successively longer strings are registered in the dictionary and made available for subsequent encoding as single output values. The algorithm works best on data with repeated patterns, so the initial parts of a message will see little compression. As the message grows, however, the compression ratio

Data compression ratio

Data compression ratio, also known as compression power, is a computer-science term used to quantify the reduction in data-representation size produced by a data compression algorithm...

tends asymptotically to the maximum.

Decoding

The decoding algorithm works by reading a value from the encoded input and outputting the corresponding string from the initialized dictionary. At the same time it obtains the next value from the input, and adds to the dictionary the concatenationConcatenation

In computer programming, string concatenation is the operation of joining two character strings end-to-end. For example, the strings "snow" and "ball" may be concatenated to give "snowball"...

of the string just output and the first character of the string obtained by decoding the next input value. The decoder then proceeds to the next input value (which was already read in as the "next value" in the previous pass) and repeats the process until there is no more input, at which point the final input value is decoded without any more additions to the dictionary.

In this way the decoder builds up a dictionary which is identical to that used by the encoder, and uses it to decode subsequent input values. Thus the full dictionary does not need be sent with the encoded data; just the initial dictionary containing the single-character strings is sufficient (and is typically defined beforehand within the encoder and decoder rather than being explicitly sent with the encoded data.)

Variable-width codes

If variable-width codes are being used, the encoder and decoder must be careful to change the width at the same points in the encoded data, or they will disagree about where the boundaries between individual codes fall in the stream. In the standard version, the encoder increases the width from p to p + 1 when a sequence ω + s is encountered that is not in the table (so that a code must be added for it) but the next available code in the table is 2p (the first code requiring p + 1 bits). The encoder emits the code for ω at width p (since that code does not require p + 1 bits), and then increases the code width so that the next code emitted will be p + 1 bits wide.The decoder is always one code behind the encoder in building the table, so when it sees the code for ω, it will generate an entry for code 2p − 1. Since this is the point where the encoder will increase the code width, the decoder must increase the width here as well: at the point where it generates the largest code that will fit in p bits.

Unfortunately some early implementations of the encoding algorithm increase the code width and then emit ω at the new width instead of the old width, so that to the decoder it looks like the width changes one code too early. This is called "Early Change"; it caused so much confusion that Adobe now allows both versions in PDF files, but includes an explicit flag in the header of each LZW-compressed stream to indicate whether Early Change is being used. Most graphic file formats do not use Early Change.

When the table is cleared in response to a clear code, both encoder and decoder change the code width after the clear code back to the initial code width, starting with the code immediately following the clear code.

Packing order

Since the codes emitted typically do not fall on byte boundaries, the encoder and decoder must agree on how codes are packed into bytes. The two common methods are LSB-First ("Least Significant Bit First") and MSB-First ("Most Significant Bit First"). In LSB-First packing, the first code is aligned so that the least significant bit of the code falls in the least significant bit of the first stream byte, and if the code has more than 8 bits, the high order bits left over are aligned with the least significant bits of the next byte; further codes are packed with LSB going into the least significant bit not yet used in the current stream byte, proceeding into further bytes as necessary. MSB-first packing aligns the first code so that its most significant bit falls in the MSB of the first stream byte, with overflow aligned with the MSB of the next byte; further codes are written with MSB going into the most significant bit not yet used in the current stream byte.GIF files use LSB-First packing order. TIFF files and PDF files use MSB-First packing order.

Example

The following example illustrates the LZW algorithm in action, showing the status of the output and the dictionaryDictionary coder

A dictionary coder, also sometimes known as a substitution coder, is a class of lossless data compression algorithms which operate by searching for matches between the text to be compressed and a set of strings contained in a data structure maintained by the encoder...

at every stage, both in encoding and decoding the data. This example has been constructed to give reasonable compression on a very short message. In real text data, repetition is generally less pronounced, so longer input streams are typically necessary before the compression builds up efficiency.

The plaintext to be encoded (from an alphabet using only the capital letters) is:

TOBEORNOTTOBEORTOBEORNOT#

The # is a marker used to show that the end of the message has been reached. There are thus 26 symbols in the plaintext alphabet (the 26 capital letters A through Z), plus the stop code #. We arbitrarily assign these the values 1 through 26 for the letters, and 0 for '#'. (Most flavors of LZW would put the stop code after the data alphabet, but nothing in the basic algorithm requires that. The encoder and decoder only have to agree what value it has.)

A computer will render these as strings of bit

Bit

A bit is the basic unit of information in computing and telecommunications; it is the amount of information stored by a digital device or other physical system that exists in one of two possible distinct states...

s. Five-bit codes are needed to give sufficient combinations to encompass this set of 27 values. The dictionary is initialized with these 27 values. As the dictionary grows, the codes will need to grow in width to accommodate the additional entries. A 5-bit code gives 25 = 32 possible combinations of bits, so when the 33rd dictionary word is created, the algorithm will have to switch at that point from 5-bit strings to 6-bit strings (for all code values, including those which were previously output with only five bits). Note that since the all-zero code 00000 is used, and is labeled "0", the 33rd dictionary entry will be labeled 32. (Previously generated output is not affected by the code-width change, but once a 6-bit value is generated in the dictionary, it could conceivably be the next code emitted, so the width for subsequent output shifts to 6 bits to accommodate that.)

The initial dictionary, then, will consist of the following entries:

| Symbol | Binary | Decimal |

|---|---|---|

| # | 00000 | 0 |

| A | 00001 | 1 |

| B | 00010 | 2 |

| C | 00011 | 3 |

| D | 00100 | 4 |

| E | 00101 | 5 |

| F | 00110 | 6 |

| G | 00111 | 7 |

| H | 01000 | 8 |

| I | 01001 | 9 |

| J | 01010 | 10 |

| K | 01011 | 11 |

| L | 01100 | 12 |

| M | 01101 | 13 |

| N | 01110 | 14 |

| O | 01111 | 15 |

| P | 10000 | 16 |

| Q | 10001 | 17 |

| R | 10010 | 18 |

| S | 10011 | 19 |

| T | 10100 | 20 |

| U | 10101 | 21 |

| V | 10110 | 22 |

| W | 10111 | 23 |

| X | 11000 | 24 |

| Y | 11001 | 25 |

| Z | 11010 | 26 |

Encoding

Buffer input characters in a sequence ω until ω + next character is not in the dictionary. Emit the code for ω, and add ω + next character to the dictionary. Start buffering again with the next character.| Current Sequence | Next Char | Output | Extended Dictionary | Comments | ||

|---|---|---|---|---|---|---|

| Code | Bits | |||||

| NULL | T | |||||

| T | O | 20 | 10100 | 27: | TO | 27 = first available code after 0 through 26 |

| O | B | 15 | 01111 | 28: | OB | |

| B | E | 2 | 00010 | 29: | BE | |

| E | O | 5 | 00101 | 30: | EO | |

| O | R | 15 | 01111 | 31: | OR | |

| R | N | 18 | 10010 | 32: | RN | 32 requires 6 bits, so for next output use 6 bits |

| N | O | 14 | 001110 | 33: | NO | |

| O | T | 15 | 001111 | 34: | OT | |

| T | T | 20 | 010100 | 35: | TT | |

| TO | B | 27 | 011011 | 36: | TOB | |

| BE | O | 29 | 011101 | 37: | BEO | |

| OR | T | 31 | 011111 | 38: | ORT | |

| TOB | E | 36 | 100100 | 39: | TOBE | |

| EO | R | 30 | 011110 | 40: | EOR | |

| RN | O | 32 | 100000 | 41: | RNO | |

| OT | # | 34 | 100010 | # stops the algorithm; send the cur seq | ||

| 0 | 000000 | and the stop code | ||||

Unencoded length = 25 symbols × 5 bits/symbol = 125 bits

Encoded length = (6 codes × 5 bits/code) + (11 codes × 6 bits/code) = 96 bits.

Using LZW has saved 29 bits out of 125, reducing the message by almost 22%. If the message were longer, then the dictionary words would begin to represent longer and longer sections of text, allowing repeated words to be sent very compactly.

Decoding

To decode an LZW-compressed archive, one needs to know in advance the initial dictionary used, but additional entries can be reconstructed as they are always simply concatenationConcatenation

In computer programming, string concatenation is the operation of joining two character strings end-to-end. For example, the strings "snow" and "ball" may be concatenated to give "snowball"...

s of previous entries.

| Input | Output Sequence | New Dictionary Entry | Comments | ||||

|---|---|---|---|---|---|---|---|

| Bits | Code | Full | Conjecture | ||||

| 10100 | 20 | T | 27: | T? | |||

| 01111 | 15 | O | 27: | TO | 28: | O? | |

| 00010 | 2 | B | 28: | OB | 29: | B? | |

| 00101 | 5 | E | 29: | BE | 30: | E? | |

| 01111 | 15 | O | 30: | EO | 31: | O? | |

| 10010 | 18 | R | 31: | OR | 32: | R? | created code 31 (last to fit in 5 bits) |

| 001110 | 14 | N | 32: | RN | 33: | N? | so start using 6 bits |

| 001111 | 15 | O | 33: | NO | 34: | O? | |

| 010100 | 20 | T | 34: | OT | 35: | T? | |

| 011011 | 27 | TO | 35: | TT | 36: | TO? | |

| 011101 | 29 | BE | 36: | TOB | 37: | BE? | 36 = TO + 1st symbol (B) of |

| 011111 | 31 | OR | 37: | BEO | 38: | OR? | next coded sequence received (BE) |

| 100100 | 36 | TOB | 38: | ORT | 39: | TOB? | |

| 011110 | 30 | EO | 39: | TOBE | 40: | EO? | |

| 100000 | 32 | RN | 40: | EOR | 41: | RN? | |

| 100010 | 34 | OT | 41: | RNO | 42: | OT? | |

| 000000 | 0 | # | |||||

At each stage, the decoder receives a code X; it looks X up in the table and outputs the sequence χ it codes, and it conjectures χ + ? as the entry the encoder just added – because the encoder emitted X for χ precisely because χ + ? was not in the table, and the encoder goes ahead and adds it. But what is the missing letter? It is the first letter in the sequence coded by the next code Z that the decoder receives. So the decoder looks up Z, decodes it into the sequence ω and takes the first letter z and tacks it onto the end of χ as the next dictionary entry.

This works as long as the codes received are in the decoder's dictionary, so that they can be decoded into sequences. What happens if the decoder receives a code Z that is not yet in its dictionary? Since the decoder is always just one code behind the encoder, Z can be in the encoder's dictionary only if the encoder just generated it, when emitting the previous code X for χ. Thus Z codes some ω that is χ + ?, and the decoder can determine the unknown character as follows:

- The decoder sees X and then Z.

- It knows X codes the sequence χ and Z codes some unknown sequence ω.

- It knows the encoder just added Z to code χ + some unknown character,

- and it knows that the unknown character is the first letter z of ω.

- But the first letter of ω (= χ + ?) must then also be the first letter of χ.

- So ω must be χ + x, where x is the first letter of χ.

- So the decoder figures out what Z codes even though it's not in the table,

- and upon receiving Z, the decoder decodes it as χ + x, and adds χ + x to the table as the value of Z.

This situation occurs whenever the encoder encounters input of the form cScSc, where c is a single character, S is a string and cS is already in the dictionary, but cSc is not. The encoder emits the code for cS, putting a new code for cSc into the dictionary. Next it sees cSc in the input (starting at the second c of cScSc) and emits the new code it just inserted. The argument above shows that whenever the decoder receives a code not in its dictionary, the situation must look like this.

Although input of form cScSc might seem unlikely, this pattern is fairly common when the input stream is characterized by significant repetition. In particular, long strings of a single character (which are common in the kinds of images LZW is often used to encode) repeatedly generate patterns of this sort.

Further coding

The simple scheme described above focuses on the LZW algorithm itself. Many applications apply further encoding to the sequence of output symbols. Some package the coded stream as printable characters using some form of Binary-to-text encoding; this will increase the encoded length and decrease the compression frequency. Conversely, increased compression can often be achieved with an adaptive entropy encoder. Such a coder estimates the probability distribution for the value of the next symbol, based on the observed frequencies of values so far. A standard entropy encoding such as Huffman codingHuffman coding

In computer science and information theory, Huffman coding is an entropy encoding algorithm used for lossless data compression. The term refers to the use of a variable-length code table for encoding a source symbol where the variable-length code table has been derived in a particular way based on...

or arithmetic coding

Arithmetic coding

Arithmetic coding is a form of variable-length entropy encoding used in lossless data compression. Normally, a string of characters such as the words "hello there" is represented using a fixed number of bits per character, as in the ASCII code...

then uses shorter codes for values with higher probabilities.

Uses

LZW compression became the first widely used universal data compression method on computers. A large EnglishEnglish language

English is a West Germanic language that arose in the Anglo-Saxon kingdoms of England and spread into what was to become south-east Scotland under the influence of the Anglian medieval kingdom of Northumbria...

text file can typically be compressed via LZW to about half its original size.

LZW was used in the public-domain program compress

Compress

Compress is a UNIX compression program based on the LZC compression method, which is an LZW implementation using variable size pointers as in LZ78.- Description of program :Files compressed by compress are typically given the extension .Z...

, which became a more or less standard utility in Unix

Unix

Unix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

systems circa 1986. It has since disappeared from many distributions, both because it infringed the LZW patent and because gzip

Gzip

Gzip is any of several software applications used for file compression and decompression. The term usually refers to the GNU Project's implementation, "gzip" standing for GNU zip. It is based on the DEFLATE algorithm, which is a combination of Lempel-Ziv and Huffman coding...

produced better compression ratios using the LZ77-based DEFLATE

DEFLATE

Deflate is a lossless data compression algorithm that uses a combination of the LZ77 algorithm and Huffman coding. It was originally defined by Phil Katz for version 2 of his PKZIP archiving tool and was later specified in RFC 1951....

algorithm, but as of 2008 at least FreeBSD includes both compress

Compress

Compress is a UNIX compression program based on the LZC compression method, which is an LZW implementation using variable size pointers as in LZ78.- Description of program :Files compressed by compress are typically given the extension .Z...

and uncompress

Uncompress

uncompress is a shell command in Unix-like environments.The uncompress utility will restore files to their original state after they have been compressed using the compress utility. If no files are specified, the standard input will be uncompressed to the standard output.This utility supports...

as a part of the distribution. Several other popular compression utilities also used LZW, or closely related methods.

LZW became very widely used when it became part of the GIF image format in 1987. It may also (optionally) be used in TIFF and PDF files. (Although LZW is available in Adobe Acrobat software, Acrobat by default uses DEFLATE

DEFLATE

Deflate is a lossless data compression algorithm that uses a combination of the LZ77 algorithm and Huffman coding. It was originally defined by Phil Katz for version 2 of his PKZIP archiving tool and was later specified in RFC 1951....

for most text and color-table-based image data in PDF files.)

Patents

Various patentPatent

A patent is a form of intellectual property. It consists of a set of exclusive rights granted by a sovereign state to an inventor or their assignee for a limited period of time in exchange for the public disclosure of an invention....

s have been issued in the United States

United States

The United States of America is a federal constitutional republic comprising fifty states and a federal district...

and other countries for LZW and similar algorithms. LZ78 was covered by by Lempel, Ziv, Cohn, and Eastman, assigned to Sperry Corporation

Sperry Corporation

Sperry Corporation was a major American equipment and electronics company whose existence spanned more than seven decades of the twentieth century...

, later Unisys

Unisys

Unisys Corporation , headquartered in Blue Bell, Pennsylvania, United States, and incorporated in Delaware, is a long established business whose core products now involves computing and networking.-History:...

Corporation, filed on August 10, 1981. Two US patents were issued for the LZW algorithm: by Victor S. Miller

Victor S. Miller

Victor Saul Miller is an American mathematician at the Center for Communications Research of the Institute for Defense Analyses in Princeton, New Jersey, US. He received his A.B. in mathematics from Columbia University in 1968, and his Ph.D. in mathematics from Harvard University in 1975...

and Mark N. Wegman and assigned to IBM, originally filed on June 1, 1983, and by Welch, assigned to Sperry Corporation, later Unisys Corporation, filed on June 20, 1983. On June 20, 2003, this patent on the LZW algorithm expired http://www.unisys.com/about__unisys/lzw. All patents on the LZW algorithm worldwide have also expired (see Graphics Interchange Format#Unisys and LZW patent enforcement).

US Patent 4,558,302 received a lot of negative press after LZW compression was used in the GIF image format.

Variants

- LZMW (1985, by V. Miller, M. Wegman) – Searches input for the longest string already in the dictionary (the "current" match); adds the concatenation of the previous match with the current match to the dictionary. (Dictionary entries thus grow more rapidly; but this scheme is much more complicated to implement.) Miller and Wegman also suggest deleting low frequency entries from the dictionary when the dictionary fills up.

- LZAP (1988, by James Storer) – modification of LZMW: instead of adding just the concatenation of the previous match with the current match to the dictionary, add the concatenations of the previous match with each initial substring of the current match. ("AP" stands for "all prefixes".) For example, if the previous match is "wiki" and current match is "pedia", then the LZAP encoder adds 5 new sequences to the dictionary: "wikip", "wikipe", "wikiped", "wikipedi", and "wikipedia", where the LZMW encoder adds only the one sequence "wikipedia". This eliminates some of the complexity of LZMW, at the price of adding more dictionary entries.

- LZWLLZWLLZWL is a syllable-based variant of the character-based LZW compression algorithm.LZWL can work with syllables obtained by all algorithms of decomposition into syllables...

is a syllable-based variant of LZW.

See also

- LZ77 and LZ78LZ77 and LZ78LZ77 and LZ78 are the names for the two lossless data compression algorithms published in papers by Abraham Lempel and Jacob Ziv in 1977 and 1978. They are also known as LZ1 and LZ2 respectively. These two algorithms form the basis for most of the LZ variations including LZW, LZSS, LZMA and...

- LZMA

- Lempel-Ziv-Storer-Szymanski

- LZJBLZJBLZJB is a lossless data compression algorithm invented by Jeff Bonwick to compress crash dumps and data in ZFS. It includes a number of improvements to the LZRW1 algorithm, a member of the Lempel-Ziv family of compression algorithms.-External links:* * *...

- Context tree weightingContext tree weightingThe context tree weighting method is a lossless compression and prediction algorithm by . The CTW algorithm is among the very few such algorithms that offer both theoretical guarantees and good practical performance ....

External links

- Welch, T.A., A Technique for High-Performance Data Compression, Computer, vol. 17, no. 6, pp. 8–19, June 1984.

Alternative link., Terry A. Welch, High speed data compression and decompression apparatus and method - SharpLZW - C# open source implementation