Core dump

Encyclopedia

Computing

Computing is usually defined as the activity of using and improving computer hardware and software. It is the computer-specific part of information technology...

, a core dump (more properly a memory dump or storage dump) consists of the recorded state of the working memory

Computer storage

Computer data storage, often called storage or memory, refers to computer components and recording media that retain digital data. Data storage is one of the core functions and fundamental components of computers....

of a computer program

Computer program

A computer program is a sequence of instructions written to perform a specified task with a computer. A computer requires programs to function, typically executing the program's instructions in a central processor. The program has an executable form that the computer can use directly to execute...

at a specific time, generally when the program has terminated abnormally (crash

Crash (computing)

A crash in computing is a condition where a computer or a program, either an application or part of the operating system, ceases to function properly, often exiting after encountering errors. Often the offending program may appear to freeze or hang until a crash reporting service documents...

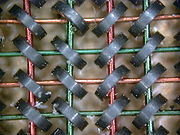

ed). The name comes from the days when magnetic core memory

Magnetic core memory

Magnetic-core memory was the predominant form of random-access computer memory for 20 years . It uses tiny magnetic toroids , the cores, through which wires are threaded to write and read information. Each core represents one bit of information...

was used, before the introduction of semiconductor memory

Semiconductor memory

Semiconductor memory is an electronic data storage device, often used as computer memory, implemented on a semiconductor-based integrated circuit. Examples of semiconductor memory include non-volatile memory such as Read-only memory , magnetoresistive random access memory , and flash memory...

; the name has remained established in systems inspired by UNIX

Unix

Unix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

long after the technology had faded from use. In practice, other key pieces of program state

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

are usually dumped at the same time, including the processor register

Processor register

In computer architecture, a processor register is a small amount of storage available as part of a CPU or other digital processor. Such registers are addressed by mechanisms other than main memory and can be accessed more quickly...

s, which may include the program counter and stack pointer, memory management information, and other processor and operating system flags and information. Core dumps are often used to assist in diagnosing and debugging

Debugging

Debugging is a methodical process of finding and reducing the number of bugs, or defects, in a computer program or a piece of electronic hardware, thus making it behave as expected. Debugging tends to be harder when various subsystems are tightly coupled, as changes in one may cause bugs to emerge...

errors in computer programs.

On many operating systems, a fatal error

Fatal error

In computing, a fatal error or fatal exception error is an error that causes a program to abort and may therefore return the user to the operating system. When this happens, data that the program was processing may be lost. A fatal error is usually distinguished from a fatal system error...

in a program automatically triggers a core dump; by extension the phrase "to dump core" has come to mean, in many cases, any fatal error, regardless of whether a record of the program memory results.

The term "core dump", "memory dump", or just "dump" has become jargon to indicate any storing of a large amount of raw data for further examination.

Background

Before the advent of disk operating systemDisk operating system

Disk Operating System and disk operating system , most often abbreviated as DOS, refers to an operating system software used in most computers that provides the abstraction and management of secondary storage devices and the information on them...

s and the ability to record large data file

Data file

A data file is a computer file which stores data to use by a computer application or system. It generally does not refer to files that contain instructions or code to be executed , or to files which define the operation or structure of an application or system ; but specifically to information...

s, core dumps were paper printouts of the contents of memory, typically arranged in columns of octal

Octal

The octal numeral system, or oct for short, is the base-8 number system, and uses the digits 0 to 7. Numerals can be made from binary numerals by grouping consecutive binary digits into groups of three...

or hexadecimal

Hexadecimal

In mathematics and computer science, hexadecimal is a positional numeral system with a radix, or base, of 16. It uses sixteen distinct symbols, most often the symbols 0–9 to represent values zero to nine, and A, B, C, D, E, F to represent values ten to fifteen...

numbers (a "hex dump

Hex dump

In computing, a hex dump is a hexadecimal view of computer data, from RAM or from a file or storage device. Each byte is represented as a two-digit hexadecimal number. Hex dumps are commonly organized into rows of 8 or 16 bytes, sometimes separated by whitespaces...

"), sometimes accompanied by their interpretations as machine language instructions, text strings, or decimal or floating-point numbers (cf. disassembler

Disassembler

A disassembler is a computer program that translates machine language into assembly language—the inverse operation to that of an assembler. A disassembler differs from a decompiler, which targets a high-level language rather than an assembly language...

). In more recent operating systems, a "core dump" is a file containing the memory image of a particular process

Process (computing)

In computing, a process is an instance of a computer program that is being executed. It contains the program code and its current activity. Depending on the operating system , a process may be made up of multiple threads of execution that execute instructions concurrently.A computer program is a...

, or the memory images of parts of the address space

Address space

In computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

of that process, along with other information such as the values of processor register

Processor register

In computer architecture, a processor register is a small amount of storage available as part of a CPU or other digital processor. Such registers are addressed by mechanisms other than main memory and can be accessed more quickly...

s. These files can be printed or viewed as text, or analysed with specialised tools such as objdump

Objdump

objdump is a program for displaying various information about object files. For instance, it can be used as a disassembler to view executable in assembly form...

.

Uses of core dumps

Core dumps can serve as useful debugging aids in several situations. On early standalone or batch-processingBatch processing

Batch processing is execution of a series of programs on a computer without manual intervention.Batch jobs are set up so they can be run to completion without manual intervention, so all input data is preselected through scripts or command-line parameters...

systems, core dumps allowed a user to debug a program without monopolizing the (very expensive) computing facility for debugging; a printout could also be more convenient than debugging using switches and lights. On shared computers, whether time-sharing, batch processing, or server systems, core dumps allow off-line debugging of the operating system

Operating system

An operating system is a set of programs that manage computer hardware resources and provide common services for application software. The operating system is the most important type of system software in a computer system...

, so that the system can go back into operation immediately. Core dumps allow a user to save a crash for later or off-site analysis, or comparison with other crashes. For embedded computers

Embedded system

An embedded system is a computer system designed for specific control functions within a larger system. often with real-time computing constraints. It is embedded as part of a complete device often including hardware and mechanical parts. By contrast, a general-purpose computer, such as a personal...

, it may be impractical to support debugging on the computer itself, so analysis of a dump may take place on a different computer. Some operating systems such as early versions of Unix

Unix

Unix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

did not support attaching debugger

Debugger

A debugger or debugging tool is a computer program that is used to test and debug other programs . The code to be examined might alternatively be running on an instruction set simulator , a technique that allows great power in its ability to halt when specific conditions are encountered but which...

s to running processes, so core dumps were necessary to run a debugger on a process's memory contents. Core dumps can be used to capture data freed during dynamic memory allocation and may thus be used to retrieve information from a program that is no longer running. In the absence of an interactive debugger, the core dump may be used by an assiduous programmer to determine the error from direct examination.

A core dump represents the complete contents of the dumped regions of the address space of the dumped process. Depending on the operating system, the dump may contain few or no data structures to aid interpretation of the memory regions. In these systems, successful interpretation requires that the program or user trying to interpret the dump understands the structure of the program's memory use.

A debugger can use a symbol table

Symbol table

In computer science, a symbol table is a data structure used by a language translator such as a compiler or interpreter, where each identifier in a program's source code is associated with information relating to its declaration or appearance in the source, such as its type, scope level and...

, if one exists, to help the programmer interpret dumps, identifying variables symbolically and displaying source code; if the symbol table is not available, less interpretation of the dump is possible, but there might still be enough possible to determine the cause of the problem. There are also special-purpose tools called dump analyzer

Dump analyzer

A dump analyzer is a programming tool which is used for understanding a machine readable core dump.The GNU utils strings, file, objdump, readelf and the powerful gdb can all be used to look inside core files....

s to analyze dumps. One popular tool, available on many operating systems, is the GNU binutils' objdump

Objdump

objdump is a program for displaying various information about object files. For instance, it can be used as a disassembler to view executable in assembly form...

.

On modern Unix-like

Unix-like

A Unix-like operating system is one that behaves in a manner similar to a Unix system, while not necessarily conforming to or being certified to any version of the Single UNIX Specification....

operating systems, administrators and programmers can read core dump files using the GNU Binutils Binary File Descriptor library (BFD), and the GNU Debugger

GNU Debugger

The GNU Debugger, usually called just GDB and named gdb as an executable file, is the standard debugger for the GNU software system. It is a portable debugger that runs on many Unix-like systems and works for many programming languages, including Ada, C, C++, Objective-C, Free Pascal, Fortran, Java...

(gdb) and objdump that use this library. This library will supply the raw data for a given address in a memory region from a core dump; it does not know anything about variables or data structures in that memory region, so the application using the library to read the core dump will have to determine the addresses of variables and determine the layout of data structures itself, for example by using the symbol table for the program undergoing debugging.

Analysts of crash dumps from Linux

Linux

Linux is a Unix-like computer operating system assembled under the model of free and open source software development and distribution. The defining component of any Linux system is the Linux kernel, an operating system kernel first released October 5, 1991 by Linus Torvalds...

systems can use

kdump or the Linux Kernel Crash Dump (LKCD).Core dumps can save the context (state) of a process at a given state for returning to it later. Systems can be made highly available by transferring core between processors, sometimes via core dump files themselves.

Core can also be dumped onto a remote host over a network.

Format

In older and simpler operating systems, each process had a contiguous address-space, so a core dump file was simply a binary file with the sequence of bytes or words. In modern operating systems, a process address space may have gaps, and share pages with other processes or files, so more elaborate representations are used; they may also include other information about the state of the program at the time of the dump.In Unix-like

Unix-like

A Unix-like operating system is one that behaves in a manner similar to a Unix system, while not necessarily conforming to or being certified to any version of the Single UNIX Specification....

systems, core dumps generally use the standard executable

Executable

In computing, an executable file causes a computer "to perform indicated tasks according to encoded instructions," as opposed to a data file that must be parsed by a program to be meaningful. These instructions are traditionally machine code instructions for a physical CPU...

image-format

File format

A file format is a particular way that information is encoded for storage in a computer file.Since a disk drive, or indeed any computer storage, can store only bits, the computer must have some way of converting information to 0s and 1s and vice-versa. There are different kinds of formats for...

:

- a.outA.out (file format)a.out is a file format used in older versions of Unix-like computer operating systems for executables, object code, and, in later systems, shared libraries...

in older versions of UnixUnixUnix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

, - ELFExecutable and Linkable FormatIn computing, the Executable and Linkable Format is a common standard file format for executables, object code, shared libraries, and core dumps. First published in the System V Application Binary Interface specification, and later in the Tool Interface Standard, it was quickly accepted among...

in modern LinuxLinuxLinux is a Unix-like computer operating system assembled under the model of free and open source software development and distribution. The defining component of any Linux system is the Linux kernel, an operating system kernel first released October 5, 1991 by Linus Torvalds...

, System VUNIX System VUnix System V, commonly abbreviated SysV , is one of the first commercial versions of the Unix operating system. It was originally developed by American Telephone & Telegraph and first released in 1983. Four major versions of System V were released, termed Releases 1, 2, 3 and 4...

, SolarisSolaris Operating SystemSolaris is a Unix operating system originally developed by Sun Microsystems. It superseded their earlier SunOS in 1993. Oracle Solaris, as it is now known, has been owned by Oracle Corporation since Oracle's acquisition of Sun in January 2010....

, and BSD systems, - Mach-OMach-OMach-O, short for Mach object file format, is a file format for executables, object code, shared libraries, dynamically-loaded code, and core dumps. A replacement for the a.out format, Mach-O offered more extensibility and faster access to information in the symbol table.Mach-O was once used by...

in Mac OS XMac OS XMac OS X is a series of Unix-based operating systems and graphical user interfaces developed, marketed, and sold by Apple Inc. Since 2002, has been included with all new Macintosh computer systems...

, etc.

Naming

- Dumps of user-processes traditionally get created as "

core". - System-wide dumps on modern Unix-like systems often appear as "

vmcore" or as "vmcore.incomplete". - Systems such as Microsoft WindowsMicrosoft WindowsMicrosoft Windows is a series of operating systems produced by Microsoft.Microsoft introduced an operating environment named Windows on November 20, 1985 as an add-on to MS-DOS in response to the growing interest in graphical user interfaces . Microsoft Windows came to dominate the world's personal...

which use filename extensionFilename extensionA filename extension is a suffix to the name of a computer file applied to indicate the encoding of its contents or usage....

s may use the extension ".dmp", e.g., "memory.dmp" or "\Minidump\Mini051509-01.dmp".

External links

Descriptions for the file format:- Minidump Files

- Enabling core dumps in embedded systems - An article from the Real-Time embedded blog.