Deep content inspection

Encyclopedia

Deep Content Inspection (DCI) is a form of network filtering that examines an entire file or MIME object as it passes an inspection point, searching for viruses, spam, data loss, key words or other content level criteria. Deep Content Inspection is considered the evolution of Deep Packet Inspection

with the ability to look at what the actual content contains instead of focusing on individual or multiple packets. Deep Content Inspection allows services to keep track of content across multiple packets so that the signatures they may be searching for can cross packet boundaries and yet they will still be found. An exhaustive form of network traffic inspection in which Internet traffic is examined across all the seven OSI ISO layers

, and most importantly, the application layer.

, where only the data part (and possibly also the header) of a packet are inspected, DCI-based systems are exhaustive, such that network traffic packets are reassembled into their constituting objects, un-encoded and/or decompressed as required, and finally presented to be inspected for malware, right-of-use, compliance, and understanding of the traffic’s intent. If this reconstruction and comprehension can be done in real-time, then real-time policies can be applied to traffic, preventing the propagation of malware, spam and valuable data loss. Further, with DCI, the correlation and comprehension of the digital objects transmitted in many communication sessions leads to new ways of network performance optimization and intelligence regardless of protocol or blended communication sessions.

Historically, DPI was developed to detect and prevent intrusion. It was then used to provide Quality of Service

where the flow of network traffic can be prioritized such that latency-sensitive traffic types (e.g., Voice over IP) can be utilized to provide higher flow priority.

New generation of Network Content Security devices such as Unified Threat Management

or Next Generation Firewalls (Garner RAS Core Research Note G00174908) use DPI to prevent attacks from a small percentage of viruses and worms; the signatures of these malware fit within the payload of a DPI’s inspection scope. However, the detection and prevention the new generation of malware such as Conficker

and Stuxnet

is only possible, through the exhaustive analysis provided by DCI.

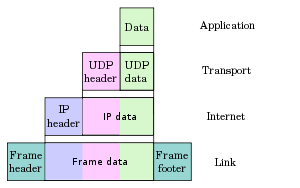

*The IP Header provides address information - the sender and destination addresses, while the TCP/UDP Header provided other pertinent information such as the port number, etc.

*The IP Header provides address information - the sender and destination addresses, while the TCP/UDP Header provided other pertinent information such as the port number, etc.

As networks evolve, inspection techniques evolve; all attempting to understand the payload. Throughout the last decade there have been vast improvements including;

To be effective, Deep Packet Inspection Systems must ‘string’ match Packet Payloads to malware signatures and specification signatures (which dictate what the request/response should like) at wire speeds. To do so, FPGAs, or Field Programmable Gateway Arrays, Network Processors, or even Graphics Processing Units (GPUs) are programmed to be hardwired with these signatures and, as such, traffic that passes through such circuitry is quickly matched.

While using hardware allows for quick and inline ‘matches, DPI systems have the following limitations including;

Hardware limitations:, since DPI systems implement their pattern matching (or searches for ‘offending’ patterns) through hardware, these systems are typically limited by:

Payload limitations: Web applications communicate content using binary-to-text encoding

, compression (zipped, archived, etc.), obfuscation

and even encryption

. As such payload structure is becoming more complex such that straight ‘string’ matching of the signatures is no longer sufficient. The common workaround is to have signatures be similarly ‘encoded’ or zipped which, given the above ‘search limitations’, cannot scale to support every application type, or nested zipped or archived files

.

Deep Content Inspection, the process through which network content is exhaustively examined, can be traced back as early as 2006, when the open-source, cross-platform antivirus software ClamAV provided support for caching proxies, Squid and NetCache

. Using the Internet Content Adaptation Protocol (ICAP)

, a proxy will pass the downloaded content for scanning to an ICAP server running an anti-virus software. Since complete ‘objects’ were passed for scanning, proxy-based anti-virus solutions are considered the first generation of DCI.

This approach was first pioneered by BlueCoat, WebWasher and Secure Computing Inc. (now McAfee), eventually becoming a standard network element in most enterprise networks.

Limitations:

While proxies (or secure web gateways) provide in-depth network traffic inspection, their use is limited as they:

(acquired by Juniper Networks Inc.) and Fortinet Inc..

Content based focus, instead of a packet or application viewpoint. Understanding content and its intent is the highest level of intelligence to be gained from network traffic. This is important as information flow is moving away from Packet, towards Application, and ultimately to Content.

Example Inspection Levels

Opening Traffic and understanding it. Then you can apply services to it.

Some Service/Inspection examples:

This type of inspection deals with real time protocols that only continue to increase in complexity and size. One of the key barriers for providing this level of inspection, that is looking at all content, is dealing with network throughput. Solutions must overcome this issue while not introducing latency into the network environment. They must also be able to effectively scale up to meet tomorrow's demands and the demands envisioned by the growing Cloud Computing trend. One approach is to use selective scanning; however, to avoid compromising accuracy, the selection criteria should be based on recurrence. The following patent USPTO# 7,630,379 provides a scheme as to how Deep Content Inspection can be carried out effectively using a recurrence selection scheme. The novelty introduced by this patent is that it addresses issues such as content (E.g., an mp3 file) that could have been renamed before transmission.

Dealing with the amount of traffic and information and then applying services requires very high speed look ups to be able to be effective. Need to compare against full services platforms or else having all traffic is not being utilized effectively. An example is often found in dealing with Viruses and Malicious content where solutions only compare content against a small virus database instead of a full and complete one.

Deep packet inspection

Deep Packet Inspection is a form of computer network packet filtering that examines the data part of a packet as it passes an inspection point, searching for protocol non-compliance, viruses, spam, intrusions or predefined criteria to decide if the packet can...

with the ability to look at what the actual content contains instead of focusing on individual or multiple packets. Deep Content Inspection allows services to keep track of content across multiple packets so that the signatures they may be searching for can cross packet boundaries and yet they will still be found. An exhaustive form of network traffic inspection in which Internet traffic is examined across all the seven OSI ISO layers

OSI model

The Open Systems Interconnection model is a product of the Open Systems Interconnection effort at the International Organization for Standardization. It is a prescription of characterizing and standardizing the functions of a communications system in terms of abstraction layers. Similar...

, and most importantly, the application layer.

Background

Traditional inspection technologies are unable to keep up with the recent outbreaks of widespread attacks. Unlike shallow inspection methods such as Deep Packet InspectionDeep packet inspection

Deep Packet Inspection is a form of computer network packet filtering that examines the data part of a packet as it passes an inspection point, searching for protocol non-compliance, viruses, spam, intrusions or predefined criteria to decide if the packet can...

, where only the data part (and possibly also the header) of a packet are inspected, DCI-based systems are exhaustive, such that network traffic packets are reassembled into their constituting objects, un-encoded and/or decompressed as required, and finally presented to be inspected for malware, right-of-use, compliance, and understanding of the traffic’s intent. If this reconstruction and comprehension can be done in real-time, then real-time policies can be applied to traffic, preventing the propagation of malware, spam and valuable data loss. Further, with DCI, the correlation and comprehension of the digital objects transmitted in many communication sessions leads to new ways of network performance optimization and intelligence regardless of protocol or blended communication sessions.

Historically, DPI was developed to detect and prevent intrusion. It was then used to provide Quality of Service

Quality of service

The quality of service refers to several related aspects of telephony and computer networks that allow the transport of traffic with special requirements...

where the flow of network traffic can be prioritized such that latency-sensitive traffic types (e.g., Voice over IP) can be utilized to provide higher flow priority.

New generation of Network Content Security devices such as Unified Threat Management

Unified threat management

Unified Threat Management is a comprehensive solution that has recently emerged in the network security industry and since 2004, has gained widespread currency as a primary network gateway defense solution for organizations...

or Next Generation Firewalls (Garner RAS Core Research Note G00174908) use DPI to prevent attacks from a small percentage of viruses and worms; the signatures of these malware fit within the payload of a DPI’s inspection scope. However, the detection and prevention the new generation of malware such as Conficker

Conficker

Conficker, also known as Downup, Downadup and Kido, is a computer worm targeting the Microsoft Windows operating system that was first detected in November 2008...

and Stuxnet

Stuxnet

Stuxnet is a computer worm discovered in June 2010. It initially spreads via Microsoft Windows, and targets Siemens industrial software and equipment...

is only possible, through the exhaustive analysis provided by DCI.

The Evolution of DPI Systems

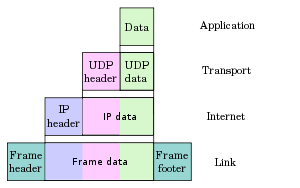

Computer networks send information across a network from one point to another, the data (sometimes referred to as the payload) is ‘encapsulated’ within an IP packet, which looks as follows:

As networks evolve, inspection techniques evolve; all attempting to understand the payload. Throughout the last decade there have been vast improvements including;

Packet Filtering

Historically, inspection technology examined only the IP Header and the TCP/UDP Header. Dubbed as ‘Packet Filtering’, these devices would drop sequence packets, or packets that are not allowed on a network. This scheme of network traffic inspection was first used by firewalls to protect against packet attacks.Stateful Packet Inspection

Stateful packet inspection was developed to examine header information and the packet content to increase source and destination understanding. Instead of letting the packets through as a result of their addresses and ports, packets stayed on the network if the context was appropriate to the networks’ current ‘state’. This scheme was first used by Checkpoint firewalls and eventually Intrusion Prevention/Detection Systems.Deep Packet Inspection: Implementations, Limitations and Typical Work Arounds

Deep Packet Inspection is currently the predominant inspection tool used to analyze data packets passing through the network, including the headers and the data protocol structures. These technologies scan packet streams and look for offending patterns.To be effective, Deep Packet Inspection Systems must ‘string’ match Packet Payloads to malware signatures and specification signatures (which dictate what the request/response should like) at wire speeds. To do so, FPGAs, or Field Programmable Gateway Arrays, Network Processors, or even Graphics Processing Units (GPUs) are programmed to be hardwired with these signatures and, as such, traffic that passes through such circuitry is quickly matched.

While using hardware allows for quick and inline ‘matches, DPI systems have the following limitations including;

Hardware limitations:, since DPI systems implement their pattern matching (or searches for ‘offending’ patterns) through hardware, these systems are typically limited by:

- The number of circuits a high-end DPI chip can have; as of 2011, this of a high end DPI system can, optimally, process around 512 request/response per session.

- The memory available for pattern matches; as of 2011, high-end DPI systems are capable of matches of up to 60,000 unique signatures

Payload limitations: Web applications communicate content using binary-to-text encoding

Uuencode

Uuencoding is a form of binary-to-text encoding that originated in the Unix program uuencode, for encoding binary data for transmission over the uucp mail system.The name "uuencoding" is derived from "Unix-to-Unix encoding"...

, compression (zipped, archived, etc.), obfuscation

Obfuscation

Obfuscation is the hiding of intended meaning in communication, making communication confusing, wilfully ambiguous, and harder to interpret.- Background :Obfuscation may be used for many purposes...

and even encryption

Https

Hypertext Transfer Protocol Secure is a combination of the Hypertext Transfer Protocol with SSL/TLS protocol to provide encrypted communication and secure identification of a network web server...

. As such payload structure is becoming more complex such that straight ‘string’ matching of the signatures is no longer sufficient. The common workaround is to have signatures be similarly ‘encoded’ or zipped which, given the above ‘search limitations’, cannot scale to support every application type, or nested zipped or archived files

Zip bomb

A zip bomb, also known as a Zip of Death or decompression bomb, is a malicious archive file designed to crash or render useless the program or system reading it...

.

First Generation - Secure Web Gateway or Proxy-based Anti-Virus Solutions

Proxies have been deployed to provide internet caching services to retrieve objects and then forward them. Consequently, all network traffic is intercepted, and potentially stored. These graduated to what is now known as secure web gateways, proxy-based inspections retrieve and scans object, script, and images.Deep Content Inspection, the process through which network content is exhaustively examined, can be traced back as early as 2006, when the open-source, cross-platform antivirus software ClamAV provided support for caching proxies, Squid and NetCache

NetCache

NetCache is a former web cache software product which was owned and developed by NetApp between 1997 and 2006, and a hardware product family incorporating the NetCache software.-History:...

. Using the Internet Content Adaptation Protocol (ICAP)

Internet Content Adaptation Protocol

The Internet Content Adaptation Protocol is a lightweight HTTP-like protocol specified in RFC 3507 which is used to extend transparent proxy servers, thereby freeing up resources and standardizing the way in which new features are implemented. ICAP is generally used to implement virus scanning,...

, a proxy will pass the downloaded content for scanning to an ICAP server running an anti-virus software. Since complete ‘objects’ were passed for scanning, proxy-based anti-virus solutions are considered the first generation of DCI.

This approach was first pioneered by BlueCoat, WebWasher and Secure Computing Inc. (now McAfee), eventually becoming a standard network element in most enterprise networks.

Limitations:

While proxies (or secure web gateways) provide in-depth network traffic inspection, their use is limited as they:

- require network reconfiguration which is accomplished through – a) end-devices to get their browsers to point to these proxies; or b) on the network routers to get traffic routed through these devices

- are limited to web (http) and ftp protocols; cannot scan other protocols such as e-mail

- and finally, proxy architectures which are typically built around Squid, which cannot scale with concurrent sessions, limiting their deployment to enterprises.

Second Generation – Gateway/Firewall-based Deep Content Inspection

The Second generation of Deep Content Inspection solutions were implemented in firewalls and/or UTMs. Given that network traffic is choked through these devices, full deep content inspection is possible. However, given the expensive cost of such operation, this feature was applied in tandem with a DPI system and was only activated on a-per-need basis or when content failed to be qualified through the DPI system. This approach was first pioneered by NetScreen Technologies Inc.NetScreen Technologies

NetScreen Technologies was acquired by Juniper Networks for US$ 4 billion stock for stock in 2004.NetScreen Technologies developed ASIC-based Internet security systems and appliances that delivered high performance firewall, VPN and traffic shaping functionality to Internet data centers, e-business...

(acquired by Juniper Networks Inc.) and Fortinet Inc..

Third Generation – Transparent Deep Content Inspection

The third, and current, generation of Deep Content Inspection solutions are implemented as fully transparent devices that perform full application level inspections at wire speed in order to understand the communication session’s intent¬¬¬—in entirety— by scanning both the handshake and payload. Once the digital objects (executables, images, JavaScript’s, .pdfs, etc.) in a Data-In-Motion session can be comprehended, many new insights into the session can be gained not only with simple pattern matching and reputation search, but also with unpacking, and behaviour analysis. This approach was first pioneered by Wedge Networks Inc..Content

Content based focus, instead of a packet or application viewpoint. Understanding content and its intent is the highest level of intelligence to be gained from network traffic. This is important as information flow is moving away from Packet, towards Application, and ultimately to Content.

Example Inspection Levels

- Packet: Random Sample to get larger picture

- Application: Group or application profiling. Certain applications, or areas of applications, are allowed / not allowed or scanned further.

- Content: Look at everything. Scan everything. Understand the intent.

Inspection

Opening Traffic and understanding it. Then you can apply services to it.

Some Service/Inspection examples:

- Anti-Virus

- Data Loss

- Unknown Threats

- Analytics

- Code Attacks/Injection

- Content Manipulation

Applications of Deep Content Inspection

DCI is currently being adopted by enterprises, service providers and governments as a reaction to increasingly complex internet traffic with the benefits of understanding complete file types and their intent. Typically, these organizations have mission-critical applications with rigid requirements.Network Throughput

This type of inspection deals with real time protocols that only continue to increase in complexity and size. One of the key barriers for providing this level of inspection, that is looking at all content, is dealing with network throughput. Solutions must overcome this issue while not introducing latency into the network environment. They must also be able to effectively scale up to meet tomorrow's demands and the demands envisioned by the growing Cloud Computing trend. One approach is to use selective scanning; however, to avoid compromising accuracy, the selection criteria should be based on recurrence. The following patent USPTO# 7,630,379 provides a scheme as to how Deep Content Inspection can be carried out effectively using a recurrence selection scheme. The novelty introduced by this patent is that it addresses issues such as content (E.g., an mp3 file) that could have been renamed before transmission.

Accuracy of Services

Dealing with the amount of traffic and information and then applying services requires very high speed look ups to be able to be effective. Need to compare against full services platforms or else having all traffic is not being utilized effectively. An example is often found in dealing with Viruses and Malicious content where solutions only compare content against a small virus database instead of a full and complete one.