Bulk synchronous parallel

Encyclopedia

The Bulk Synchronous Parallel (BSP) abstract computer

is a bridging model

for designing parallel algorithm

s. A bridging model "is intended neither as a hardware nor a programming model but something in between" . It serves a purpose similar to the Parallel Random Access Machine

(PRAM) model. BSP differs from PRAM by not taking communication and synchronization for granted. An important part of analysing a BSP algorithm rests on quantifying the synchronisation and communication needed.

BSP was developed by Leslie Valiant

during the 1980s. The definitive article was published in 1990.

of computation.

A BSP computation proceeds in a series of global supersteps. A superstep consists of three ordered stages:

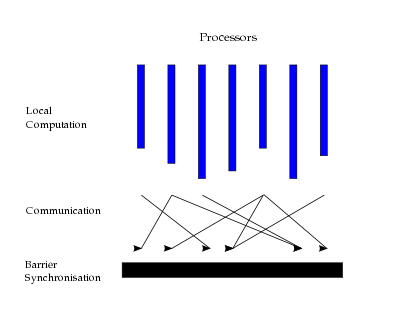

The figure below shows this in a diagrammatic form. The processes are not regarded as having a particular linear order (from left to right or otherwise), and may be mapped to processors in any way.

their interactions are typically complex. In particular, it is difficult to say much about the time any single communication action will take to complete.

The BSP model considers communication actions en masse. This has the effect that an upper bound on the time taken to communicate a set of data can be given. BSP considers all communication actions of a superstep as one unit, and assumes all messages have a fixed size.

The maximum number of incoming or outgoing messages for a superstep is denoted by . The ability of a communication network to deliver data is captured by a parameter

. The ability of a communication network to deliver data is captured by a parameter  , defined such that it takes time

, defined such that it takes time  for a processor to deliver

for a processor to deliver  messages of size 1.

messages of size 1.

A message of length obviously takes longer to send than a message of size 1. However, the BSP model does not make a distinction between a message length of

obviously takes longer to send than a message of size 1. However, the BSP model does not make a distinction between a message length of  or

or  messages of length 1. In either case the cost is said to be

messages of length 1. In either case the cost is said to be  .

.

The parameter is dependent on the following factors:

is dependent on the following factors:

A value for is, in practice, determined empirically for each parallel computer. Note that

is, in practice, determined empirically for each parallel computer. Note that  is not the normalised single-word delivery time, but the single-word delivery time under continuous traffic conditions.

is not the normalised single-word delivery time, but the single-word delivery time under continuous traffic conditions.

is often

expensive, so should be used sparingly. However, future architecture developments may make them much cheaper. The cost of barrier synchronization is influenced by a couple of issues:

The cost of a barrier synchronization is denoted by . In practice, a value of

. In practice, a value of  is determined empirically.

is determined empirically.

Barriers

are potentially costly, but have a number of attractions. They do not introduce the possibility of deadlock

or livelock

,

since barriers do not create circular data dependencies. Therefore tools to detect and deal with them are unnecessary. Barriers also permit novel forms of fault tolerance

.

for processors:

processors:

where is the cost for the local computation in process

is the cost for the local computation in process  , and

, and  is the number of messages sent or received by process

is the number of messages sent or received by process  . Note that homogeneous processors are assumed here. It is more common for the expression to be written as

. Note that homogeneous processors are assumed here. It is more common for the expression to be written as  where

where  and

and  are maxima. The cost of the algorithm then, is the sum of the costs of each superstep.

are maxima. The cost of the algorithm then, is the sum of the costs of each superstep.

where is the number of supersteps.

is the number of supersteps.

,

,  , and

, and  are usually modelled as functions, that vary with problem size. These three characteristics of a BSP algorithm are usually described in terms of asymptotic notation, e.g.

are usually modelled as functions, that vary with problem size. These three characteristics of a BSP algorithm are usually described in terms of asymptotic notation, e.g.  .

.

, MapReduce

, and Pregel.

Abstract machine

An abstract machine, also called an abstract computer, is a theoretical model of a computer hardware or software system used in automata theory...

is a bridging model

Bridging model

In computer science, a bridging model is an abstract model of a computer which provides a conceptual bridge between the physical implementation of the machine and the abstraction available to a programmer of that machine; in other words, it is intended to provide a common level of understanding...

for designing parallel algorithm

Parallel algorithm

In computer science, a parallel algorithm or concurrent algorithm, as opposed to a traditional sequential algorithm, is an algorithm which can be executed a piece at a time on many different processing devices, and then put back together again at the end to get the correct result.Some algorithms...

s. A bridging model "is intended neither as a hardware nor a programming model but something in between" . It serves a purpose similar to the Parallel Random Access Machine

Parallel Random Access Machine

In computer science, Parallel Random Access Machine is a shared memory abstract machine. As its name indicates, the PRAM was intended as the parallel computing analogy to the random access machine...

(PRAM) model. BSP differs from PRAM by not taking communication and synchronization for granted. An important part of analysing a BSP algorithm rests on quantifying the synchronisation and communication needed.

BSP was developed by Leslie Valiant

Leslie Valiant

Leslie Gabriel Valiant is a British computer scientist and computational theorist.He was educated at King's College, Cambridge, Imperial College London, and University of Warwick where he received his Ph.D. in computer science in 1974. He started teaching at Harvard University in 1982 and is...

during the 1980s. The definitive article was published in 1990.

The model

A BSP computer consists of processors connected by a communication network. Each processor has a fast local memory, and may follow different threadsThread (computer science)

In computer science, a thread of execution is the smallest unit of processing that can be scheduled by an operating system. The implementation of threads and processes differs from one operating system to another, but in most cases, a thread is contained inside a process...

of computation.

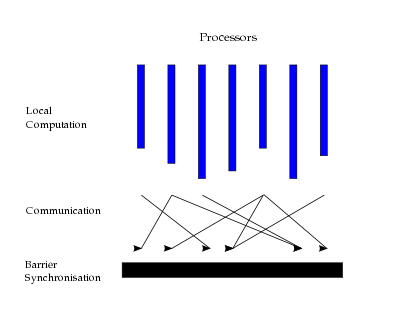

A BSP computation proceeds in a series of global supersteps. A superstep consists of three ordered stages:

- Concurrent computation: Several computations take place on every participating processor. Each process only uses values stored in the local memory of the processor. The computations are independent in the sense that they occur asynchronously of all the others.

- Communication: At this stage, the processes exchange data between themselves.

- Barrier synchronisation: When a process reaches this point (the barrier), it waits until all other processes have finished their communication actions.

The figure below shows this in a diagrammatic form. The processes are not regarded as having a particular linear order (from left to right or otherwise), and may be mapped to processors in any way.

Communication

In many parallel programming systems, communications are considered at the level of individual actions: sending and receiving a message, memory to memory transfer, etc. This is difficult to work with, since there are many simultaneous communication actions in a parallel program, andtheir interactions are typically complex. In particular, it is difficult to say much about the time any single communication action will take to complete.

The BSP model considers communication actions en masse. This has the effect that an upper bound on the time taken to communicate a set of data can be given. BSP considers all communication actions of a superstep as one unit, and assumes all messages have a fixed size.

The maximum number of incoming or outgoing messages for a superstep is denoted by

. The ability of a communication network to deliver data is captured by a parameter

. The ability of a communication network to deliver data is captured by a parameter  , defined such that it takes time

, defined such that it takes time  for a processor to deliver

for a processor to deliver  messages of size 1.

messages of size 1.A message of length

obviously takes longer to send than a message of size 1. However, the BSP model does not make a distinction between a message length of

obviously takes longer to send than a message of size 1. However, the BSP model does not make a distinction between a message length of  or

or  messages of length 1. In either case the cost is said to be

messages of length 1. In either case the cost is said to be  .

.The parameter

is dependent on the following factors:

is dependent on the following factors:

- The protocols used to interact within the communication network.

- Buffer management by both the processors and the communication network.

- The routing strategy used in the network.

- The BSP runtime system.

A value for

is, in practice, determined empirically for each parallel computer. Note that

is, in practice, determined empirically for each parallel computer. Note that  is not the normalised single-word delivery time, but the single-word delivery time under continuous traffic conditions.

is not the normalised single-word delivery time, but the single-word delivery time under continuous traffic conditions.Barriers

On most of today's architectures, barrier synchronizationBarrier (computer science)

- Threads synchronization primitive :In parallel computing, a barrier is a type of synchronization method. A barrier for a group of threads or processes in the source code means any thread/process must stop at this point and cannot proceed until all other threads/processes reach this barrier.Many...

is often

expensive, so should be used sparingly. However, future architecture developments may make them much cheaper. The cost of barrier synchronization is influenced by a couple of issues:

- The cost imposed by the variation in the completion time of the participating concurrent computations. Take the example where all but one of the processes have completed their work for this superstep, and are waiting for the last process, which still has a lot of work to complete. The best that an implementation can do is ensure that each process works on roughly the same problem size.

- The cost of reaching a globally consistent state in all of the processors. This depends on the communication network, but also on whether there is special-purpose hardware available for synchronizing, and on the way in which interrupts are handled by processors.

The cost of a barrier synchronization is denoted by

. In practice, a value of

. In practice, a value of  is determined empirically.

is determined empirically. Barriers

Barrier (computer science)

- Threads synchronization primitive :In parallel computing, a barrier is a type of synchronization method. A barrier for a group of threads or processes in the source code means any thread/process must stop at this point and cannot proceed until all other threads/processes reach this barrier.Many...

are potentially costly, but have a number of attractions. They do not introduce the possibility of deadlock

Deadlock

A deadlock is a situation where in two or more competing actions are each waiting for the other to finish, and thus neither ever does. It is often seen in a paradox like the "chicken or the egg"...

or livelock

Deadlock

A deadlock is a situation where in two or more competing actions are each waiting for the other to finish, and thus neither ever does. It is often seen in a paradox like the "chicken or the egg"...

,

since barriers do not create circular data dependencies. Therefore tools to detect and deal with them are unnecessary. Barriers also permit novel forms of fault tolerance

Fault-tolerant system

Fault-tolerance or graceful degradation is the property that enables a system to continue operating properly in the event of the failure of some of its components. A newer approach is progressive enhancement...

.

The Cost of a BSP algorithm

The cost of a superstep is determined as the sum of three terms; the cost of the longest running local computation, the cost of global communication between the processors, and the cost of the barrier synchronisation at the end of the superstep. The cost of one superstepfor

processors:

processors:

where

is the cost for the local computation in process

is the cost for the local computation in process  , and

, and  is the number of messages sent or received by process

is the number of messages sent or received by process  . Note that homogeneous processors are assumed here. It is more common for the expression to be written as

. Note that homogeneous processors are assumed here. It is more common for the expression to be written as  where

where  and

and  are maxima. The cost of the algorithm then, is the sum of the costs of each superstep.

are maxima. The cost of the algorithm then, is the sum of the costs of each superstep.

where

is the number of supersteps.

is the number of supersteps. ,

,  , and

, and  are usually modelled as functions, that vary with problem size. These three characteristics of a BSP algorithm are usually described in terms of asymptotic notation, e.g.

are usually modelled as functions, that vary with problem size. These three characteristics of a BSP algorithm are usually described in terms of asymptotic notation, e.g.  .

.Extensions and uses

BSP has been extended by many authors to address concerns about BSP's unsuitability for modelling specific architectures or computational paradigms. One example of this is the decomposable BSP model. The model has also been used in the creation of a number of new programming languages --- including BSML (Bulk Synchronous Parallel ML) --- and programming models --- including BSPLib, Apache HamaApache Hama

Apache Hama is a distributed computing framework based on Bulk Synchronous Parallel computing techniques for massive scientific computations e.g., matrix, graph and network algorithms, currently being incubated as one of the incubator projects by the Apache Software Foundation. It was created by...

, MapReduce

MapReduce

MapReduce is a software framework introduced by Google in 2004 to support distributed computing on large data sets on clusters of computers. Parts of the framework are patented in some countries....

, and Pregel.

See also

- Computer cluster

- Concurrent computingConcurrent computingConcurrent computing is a form of computing in which programs are designed as collections of interacting computational processes that may be executed in parallel...

- ConcurrencyConcurrency (computer science)In computer science, concurrency is a property of systems in which several computations are executing simultaneously, and potentially interacting with each other...

- Dataflow programming

- Grid computingGrid computingGrid computing is a term referring to the combination of computer resources from multiple administrative domains to reach a common goal. The grid can be thought of as a distributed system with non-interactive workloads that involve a large number of files...

- Parallel computingParallel computingParallel computing is a form of computation in which many calculations are carried out simultaneously, operating on the principle that large problems can often be divided into smaller ones, which are then solved concurrently . There are several different forms of parallel computing: bit-level,...

- ScientificPython

- LogP machineLogP machineThe LogP machine is a model for parallel computation.It aims at being more practical than the PRAM model while still allowing for easy analysis of computation....

External links

- D.B. Skillicorn, Jonathan Hill, W. F. McColl, [ftp://ftp.comlab.ox.ac.uk/pub/Documents/techpapers/Jonathan.Hill/SkillHillMcColl_QA.ps.gz Questions and answers about BSP] (1996)

- BSP Worldwide

- BSP related papers

- WWW Resources on BSP Computing Bulk Synchronous Parallel ML ( official website)