Alternating decision tree

Encyclopedia

An alternating decision tree (ADTree) is a machine learning

method for classification. It generalizes decision trees

and has connections to boosting

.

and Llew Mason. However, the algorithm as presented had several typographical errors. Clarifications and optimizations were later presented by Bernhard Pfahringer, Geoffrey Holmes and Richard Kirkby . Implementations are available in Weka

and JBoost.

algorithms typically used either decision stump

s

or decision trees as weak hypotheses. As an example, boosting decision stump

s creates

a set of weighted decision stumps (where

weighted decision stumps (where

is the number of boosting iterations), which then vote on the final classification according to their weights. Individual decision stumps are weighted according to their ability to classify the data.

Boosting a simple learner results in an unstructured set of hypotheses, making it difficult to infer correlation

hypotheses, making it difficult to infer correlation

s between attributes. Alternating decision trees introduce structure to the set of hypotheses by requiring that they build off a hypothesis that was produced in an earlier iteration. The resulting set of hypotheses can be visualized in a tree based on the relationship between a hypothesis and its "parent."

Another important feature of boosted algorithms is that the data is given a different distribution

at each iteration. Instances that are misclassified are given a larger weight while accurately classified instances are given reduced weight.

and regular email is coded as

and regular email is coded as  .

.

The following table contains part of the information for a single instance.

The following table contains part of the information for a single instance.

The instance is scored by summing all of the prediction nodes through which it passes. In the case of the instance above, the score is

calculate as

The final score of is positive, so the instance is classified as spam. The magnitude of the value is a measure of confidence in the prediction. The original authors list three potential levels of interpretation for the set of attributes identified by an ADTree:

is positive, so the instance is classified as spam. The magnitude of the value is a measure of confidence in the prediction. The original authors list three potential levels of interpretation for the set of attributes identified by an ADTree:

Care must be taken when interpreting individual nodes as the scores reflect a re weighting of the data in each iteration.

The fundamental element of the ADTree algorithm is the rule. A single

rule consists of a precondition, a condition, and two scores. A

condition is a predicate of the form "attribute value."

A precondition is simply a logical conjunction

of conditions.

Evaluation of a rule involves a pair of nested if statements:

1 if(precondition)

2 if(condition)

3 return score_one

4 else

5 return score_two

6 end if

7 else

8 return 0

9 end if

Several auxiliary functions are also required by the algorithm:

The algorithm is as follows:

1 function ad_tree

2 input Set of training instances

training instances

3

4 for all

for all

5

6 a rule with scores

a rule with scores  and

and  , precondition "true" and condition "true."

, precondition "true" and condition "true."

7

8 the set of all possible conditions

the set of all possible conditions

9 for

10 get values that minimize

get values that minimize

11

12

13

14 new rule with precondition

new rule with precondition  , condition

, condition  , and weights

, and weights  and

and

15

16 end for

17 return set of

The set grows by two preconditions in each iteration, and it is possible to derive the tree structure of a set of rules by making note of the precondition that is used in each successive rule.

grows by two preconditions in each iteration, and it is possible to derive the tree structure of a set of rules by making note of the precondition that is used in each successive rule.

ADTrees are typically as robust as boosted decision trees and boosted decision stump

s. Typically, equivalent accuracy can be achieved with a much simpler tree structure than recursive partitioning algorithms.

Machine learning

Machine learning, a branch of artificial intelligence, is a scientific discipline concerned with the design and development of algorithms that allow computers to evolve behaviors based on empirical data, such as from sensor data or databases...

method for classification. It generalizes decision trees

Decision tree learning

Decision tree learning, used in statistics, data mining and machine learning, uses a decision tree as a predictive model which maps observations about an item to conclusions about the item's target value. More descriptive names for such tree models are classification trees or regression trees...

and has connections to boosting

Boosting

Boosting is a machine learning meta-algorithm for performing supervised learning. Boosting is based on the question posed by Kearns: can a set of weak learners create a single strong learner? A weak learner is defined to be a classifier which is only slightly correlated with the true classification...

.

History

ADTrees were introduced by Yoav FreundYoav Freund

Yoav Freund is a researcher and professor at the University of California, San Diego who mainly works on machine learning, probability theory and related fields and applications.From his homepage:...

and Llew Mason. However, the algorithm as presented had several typographical errors. Clarifications and optimizations were later presented by Bernhard Pfahringer, Geoffrey Holmes and Richard Kirkby . Implementations are available in Weka

Weka (machine learning)

Weka is a popular suite of machine learning software written in Java, developed at the University of Waikato, New Zealand...

and JBoost.

Motivation

Original boostingBoosting

Boosting is a machine learning meta-algorithm for performing supervised learning. Boosting is based on the question posed by Kearns: can a set of weak learners create a single strong learner? A weak learner is defined to be a classifier which is only slightly correlated with the true classification...

algorithms typically used either decision stump

Decision stump

A decision stump is a machine learning model consisting of a one-level decision tree. That is, it is a decision tree with one internal node which is immediately connected to the terminal nodes. A decision stump makes a prediction based on the value of just a single input feature...

s

or decision trees as weak hypotheses. As an example, boosting decision stump

Decision stump

A decision stump is a machine learning model consisting of a one-level decision tree. That is, it is a decision tree with one internal node which is immediately connected to the terminal nodes. A decision stump makes a prediction based on the value of just a single input feature...

s creates

a set of

weighted decision stumps (where

weighted decision stumps (where

is the number of boosting iterations), which then vote on the final classification according to their weights. Individual decision stumps are weighted according to their ability to classify the data.

Boosting a simple learner results in an unstructured set of

hypotheses, making it difficult to infer correlation

hypotheses, making it difficult to infer correlationCorrelation

In statistics, dependence refers to any statistical relationship between two random variables or two sets of data. Correlation refers to any of a broad class of statistical relationships involving dependence....

s between attributes. Alternating decision trees introduce structure to the set of hypotheses by requiring that they build off a hypothesis that was produced in an earlier iteration. The resulting set of hypotheses can be visualized in a tree based on the relationship between a hypothesis and its "parent."

Another important feature of boosted algorithms is that the data is given a different distribution

Frequency distribution

In statistics, a frequency distribution is an arrangement of the values that one or more variables take in a sample. Each entry in the table contains the frequency or count of the occurrences of values within a particular group or interval, and in this way, the table summarizes the distribution of...

at each iteration. Instances that are misclassified are given a larger weight while accurately classified instances are given reduced weight.

Alternating decision tree structure

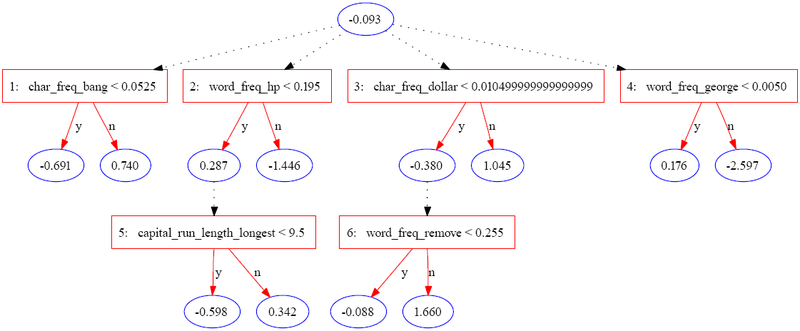

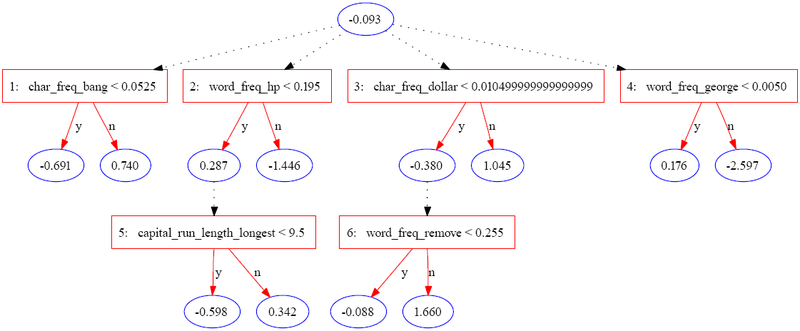

An alternating decision tree consists of decision nodes and prediction nodes. Decision nodes specify a predicate condition. Prediction nodes contain a single number. ADTrees always have prediction nodes as both root and leaves. An instance is classified by an ADTree by following all paths for which all decision nodes are true and summing any prediction nodes that are traversed. This is different from binary classification trees such as CART (Classification and regression tree) or C4.5 in which an instance follows only one path through the tree.Example

The following tree was constructed using JBoost on the spambase dataset (available from the UCI Machine Learning Repository). In this example, spam is coded as and regular email is coded as

and regular email is coded as  .

.

| Feature | Value |

|---|---|

| char_freq_bang | 0.08 |

| word_freq_hp | 0.4 |

| capital_run_length_longest | 4 |

| char_freq_dollar | 0 |

| word_freq_remove | 0.9 |

| word_freq_george | 0 |

| Other features | ... |

The instance is scored by summing all of the prediction nodes through which it passes. In the case of the instance above, the score is

calculate as

| Iteration | 0 | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|---|

| Instance values | N/A | .08 < .052 = f | .4 < .195 = f | 0 < .01 = t | 0 < 0.005 = t | N/A | .9 < .225 = f |

| Prediction | -0.093 | 0.74 | -1.446 | -0.38 | 0.176 | 0 | 1.66 |

The final score of

is positive, so the instance is classified as spam. The magnitude of the value is a measure of confidence in the prediction. The original authors list three potential levels of interpretation for the set of attributes identified by an ADTree:

is positive, so the instance is classified as spam. The magnitude of the value is a measure of confidence in the prediction. The original authors list three potential levels of interpretation for the set of attributes identified by an ADTree:

- Individual nodes can be evaluated for their own predictive ability.

- Sets of nodes on the same path may be interpreted as having a joint effect

- The tree can be interpreted as a whole.

Care must be taken when interpreting individual nodes as the scores reflect a re weighting of the data in each iteration.

Description of the algorithm

The inputs to the alternating decision tree algorithm are:- A set of inputs

where

where  is a vector of attributes and

is a vector of attributes and  is either -1 or 1. Inputs are also called instances.

is either -1 or 1. Inputs are also called instances. - A set of weights

corresponding to each instance.

corresponding to each instance.

The fundamental element of the ADTree algorithm is the rule. A single

rule consists of a precondition, a condition, and two scores. A

condition is a predicate of the form "attribute

A precondition is simply a logical conjunction

Logical conjunction

In logic and mathematics, a two-place logical operator and, also known as logical conjunction, results in true if both of its operands are true, otherwise the value of false....

of conditions.

Evaluation of a rule involves a pair of nested if statements:

1 if(precondition)

2 if(condition)

3 return score_one

4 else

5 return score_two

6 end if

7 else

8 return 0

9 end if

Several auxiliary functions are also required by the algorithm:

-

returns the sum of the weights of all positively labeled examples that satisfy predicate

returns the sum of the weights of all positively labeled examples that satisfy predicate

-

returns the sum of the weights of all negatively labeled examples that satisfy predicate

returns the sum of the weights of all negatively labeled examples that satisfy predicate

-

returns the sum of the weights of all examples that satisfy predicate

returns the sum of the weights of all examples that satisfy predicate

The algorithm is as follows:

1 function ad_tree

2 input Set of

training instances

training instances3

4

for all

for all

5

6

a rule with scores

a rule with scores  and

and  , precondition "true" and condition "true."

, precondition "true" and condition "true."7

8

the set of all possible conditions

the set of all possible conditions9 for

10

get values that minimize

get values that minimize

11

12

13

14

new rule with precondition

new rule with precondition  , condition

, condition  , and weights

, and weights  and

and

15

16 end for

17 return set of

The set

grows by two preconditions in each iteration, and it is possible to derive the tree structure of a set of rules by making note of the precondition that is used in each successive rule.

grows by two preconditions in each iteration, and it is possible to derive the tree structure of a set of rules by making note of the precondition that is used in each successive rule.Empirical results

Figure 6 in the original paper demonstrates thatADTrees are typically as robust as boosted decision trees and boosted decision stump

Decision stump

A decision stump is a machine learning model consisting of a one-level decision tree. That is, it is a decision tree with one internal node which is immediately connected to the terminal nodes. A decision stump makes a prediction based on the value of just a single input feature...

s. Typically, equivalent accuracy can be achieved with a much simpler tree structure than recursive partitioning algorithms.

External links

- An introduction to Boosting and ADTrees (Has many graphical examples of alternating decision trees in practice).

- JBoost software implementing ADTrees.